Tuesday, March 16th 2010

GeForce GTX 480 has 480 CUDA Cores?

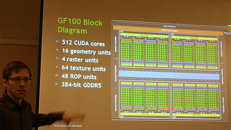

In several of its communications about Fermi as a GPGPU product (Next-Gen Tesla series) and GF100 GPU, NVIDIA mentioned the GF100 GPU to have 512 physical CUDA cores (shader units) on die. In the run up to the launch of GeForce 400 series however, it appears as if GeForce GTX 480, the higher-end part in the series will have only 480 of its 512 physical CUDA cores enabled, sources at Add-in Card manufacturers confirmed to Bright Side of News. This means that 15 out of 16 SMs will be enabled. It has a 384-bit GDDR5 memory interface holding 1536 MB of memory.

This could be seen as a move to keep the chip's TDP down and help with yields. It's unclear if this is a late change, because if it is, benchmark scores of the product could be different when it's finally reviewed upon launch. The publication believes that while the GeForce GTX 480 targets a price point around $449-499, while the GeForce GTX 470 is expected to be priced $299-$349. The GeForce GTX 470 has 448 CUDA cores and a 320-bit GDDR5 memory interface holding 1280 MB of memory. In another report by Donanim Haber, the TDP of the GeForce GTX 480 is expected to be 298W, with GeForce GTX 470 at 225W. NVIDIA will unveil the two on the 26th of March.

Sources:

Bright Side of News, DonanimHaber

This could be seen as a move to keep the chip's TDP down and help with yields. It's unclear if this is a late change, because if it is, benchmark scores of the product could be different when it's finally reviewed upon launch. The publication believes that while the GeForce GTX 480 targets a price point around $449-499, while the GeForce GTX 470 is expected to be priced $299-$349. The GeForce GTX 470 has 448 CUDA cores and a 320-bit GDDR5 memory interface holding 1280 MB of memory. In another report by Donanim Haber, the TDP of the GeForce GTX 480 is expected to be 298W, with GeForce GTX 470 at 225W. NVIDIA will unveil the two on the 26th of March.

79 Comments on GeForce GTX 480 has 480 CUDA Cores?

just goter wait the GTX480 hits $400 Aud :D then ill be happy

odd tho to me that the high end part still has one cluster disabled, and the 470 only 2 disabled. Fermi you are a troubled chip aren't you.

i hope.. with the smaller process, 28 nm?.

I've said it once and I'll say it again;

BRING ON THE REVIEWS

But indeed let's wait for the benchies..

28nm is going to bring about a return of RV770/G92 for both companies. It should be interesting.

Nvidia will get 1024 shaders or some odd number, they are a fan of that. 96 112 shaders 216 shaders and so on, and 384 and 448 bit mem bus....

Nvidia's design is already very complex

Nvidia havnt made a real trend i think.

Ati:

55nm -> 40nm = twice + 30% die size.(loads of added functionality !!!

40nm->28nm (no function add 5-10% die size increase 3200 shaders, same ratio for rop texture units and such.

depends if ati is certant that their architecture will still scale good!

Ati's tesselation unit isnt very bad really, not vs die size, ati have proven to have a very good design when it comes to performance vs die size, cheaper to make, cheaper to sell if compotition is there.

Just throwing some thoughts out there.

I think they should pay a bit more heed to murphy's law and make the product for september. But who knows what will happen... they probably have people much smarter than me sitting in a room somewhere thinking about this all day long.

Seriously, lets wait for whats not even out first.

the folding power it will bring:rolleyes:

well in a little while we'll know for sure, whether overclocking sucks or not

hopefully it can be unlinked like the other cards, but with these new cards you never know T.T