Wednesday, September 7th 2011

MSI Calls Bluff on Gigabyte's PCIe Gen 3 Ready Claim

In August, Gigabyte made a claim that baffled at least MSI, that scores of its motherboards are Ready for Native PCIe Gen. 3. Along with the likes of ASRock, MSI was one of the first with motherboards featuring PCI-Express 3.0 slots, the company took the pains to educate buyers what PCI-E 3.0 is, and how to spot a motherboard that features it. MSI thinks that Gigabyte made a factual blunder bordering misinformation by claiming that as many as 40 of its motherboards are "Ready for Native PCIe Gen. 3." MSI decided to put its engineering and PR team to build a technically-sound presentation rebutting Gigabyte's claims.More slides, details follow.

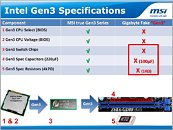

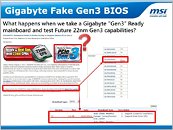

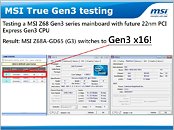

MSI begins by explaining that PCIe support isn't as easy as laying a wire between the CPU and the slot. It needs specifications-compliant lane switches and electrical components, and that you can't count on certain Gigabytes for future-proofing.MSI did some PCI-Express electrical testing using a 22 nm Ivy Bridge processor sample.MSI claims that apart from the G1.Sniper 2, none of Gigabyte's so-called "Ready for Native PCIe Gen. 3" motherboards are what the badge claims to be, and that the badge is extremely misleading to buyers. Time to refill the popcorn bowl.

Source:

MSI

MSI begins by explaining that PCIe support isn't as easy as laying a wire between the CPU and the slot. It needs specifications-compliant lane switches and electrical components, and that you can't count on certain Gigabytes for future-proofing.MSI did some PCI-Express electrical testing using a 22 nm Ivy Bridge processor sample.MSI claims that apart from the G1.Sniper 2, none of Gigabyte's so-called "Ready for Native PCIe Gen. 3" motherboards are what the badge claims to be, and that the badge is extremely misleading to buyers. Time to refill the popcorn bowl.

286 Comments on MSI Calls Bluff on Gigabyte's PCIe Gen 3 Ready Claim

I understand you not liking GB for this, and that is not only your right, but it is totally understandable. However calling anyone who trusts GB a sucker is going a bit far. At least have some respect and say something like "gullible".It said HM to 1GB GDDR5 but it clearly can only have 512MB of GDDR5 and then its DDR2/3. It also doesn't say the actual memory size on the box. Definitively shady and misleading. They screwed up

As per the topic, I think this could potentially be a big blow to GB. The hypermemory is shady advertising, but it is still somewhat gullible of the buyer to buy it as the specs say 512mb, this doesn't excuse GB, but makes it a bearable mistake. However it seems they have no excuse for this, we will just have to wait and see

in this case, we know that whatever it turns out - giga IS responsible. this time around its clearly official marketing, and not just random misleading box art/stickers on a few products.

I don't fully get the slides, can someone summarize them for me so they are a bit more understandable?

MSI: we have PCI-E3... and gigabyte doesnt. liars.

@ Mussels :roll: I meant a more detailed summary :rolleyes:

As far as Native, sure once you add a Ivy Bridge its native to that NB chipset!

All I am saying is that they have reported (however truthfully) that the UD4 isn't compliant and you came on and started talking about the UD7 which after reading what was in the story that TPU put up was not even mentioned.

I will agree that yes it doesn't really disprove as you said but why did you in the first place introduce the UD7 in to this. No where in the article is it mentioned.

is this the ud7 mentionned that he might be talking about?

I've talked with some knowledgeable MB folks and they all say that the CPUs will stay in 2.0 x16 mode when you install a PCI-E 3.0 card in it without the proper switches.

vr-zone.com/articles/the-upgrade-path-to-ivy-bridge-might-be-blocked-by-changes-to-uefi/13513.html

Hmmm gigabyte = Dual Bios + No UEFI :)

www.gigabyte.us/press-center/news-page.aspx?nid=1048So yeah, there you have it, even with the original press release they already knew they were lying since the UD7 has the NF200 and you will NOT have maximum data bandwidth for future discrete graphics cards.Yeah, there are going to be loads of ****ed off gigabyte customer once they find out their 2011 system can't run Windows 8 :p

Even OA3 doesnt need UEFI which is for large SI's as the bios strings can still be built into older bios's.

Also on the UD7 Gigabytes board if I remember rightly doesn't activate the NF200 chip until 3 or more pcie16 lanes are used which is different to the way boards such as the ROG board on Asus work where they send all pcie traffic via the nf200, so in theory it should work for the first pcie lane too. I think there are 2 ways to look at the news, Gigabyte have said they are PCIe3 Native ready which suggests you can use PCIe3.

Now i know you can use PCIe3 without the switches but only in the first slot. MSI's pictures are misleading in their presentation because they show the switches below the path of the cpu. In reality these chips are to bridge pcie lanes.

Now this means that the first pciex16 slot has a direct connection with the cpu, this means it will become a pcie3 slot. However the speed through the switches will be reduced to the limits of the switch.

So something like this:

Ivy Bridge CPU-------PCIe3---Switch Gen2------PCIe2

vs

Ivy Bridge CPU-------PCIe3---Switch Gen3------PCIe3

Now depending on your view you might say Gigabyte are the good guys as they are giving existing customers PCIe3 albeit only in one slot, where as other manufacturers are charging you to upgrade for gen 3 support.

They could have made it clearer I agree but I think MSI are mud throwing here and they will come out worse for it. Especially if IVY bridge needs them to wipe all there UEFI bios which cant be done at a service or reseller level!

WHY IS MSI WRONG? ...because the PCI-e 3.0 physical layer is the same as PCI-e 2.0. The only thing that is different is the '128b/130b' encoding. The data is encoded/decoded by the PCI-e controller from the processor (sandy bridge) and from the graphic card. So, the PCI-E Express 3.0 has the same physical characteristics as PCI-E Express 2.0, that means: if the PCI-e controller know how to encode and decode PCI-E 3.0 data then we can transfer PCI-E 3.0 data through a PCI-E 2.0 physical link.

You decide but i know why

The only question I have is why did MSi single out Gigabyte? What makes them different, from, say, ASUS?

ASUS hasn't mentioned PCIe 3.0 at all, that I can tell.

Read this vr-zone.com/articles/the-upgrade-path-to-ivy-bridge-might-be-blocked-by-changes-to-uefi/13513.html

lol