Tuesday, September 27th 2011

ZOTAC Announces GeForce GT 520 in PCI and PCIe x1 Interface Variants

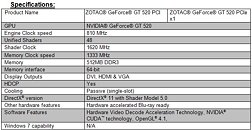

ZOTAC International, a leading innovator and channel manufacturer, today expands the value GeForce GT 520 lineup with new PCI and PCI Express x1 form factors for users with pre-built systems that have limited expansion capabilities. The new ZOTAC GeForce GT 520 PCI and PCI Express x1 graphics cards breathe new life into older systems by delivering a performance punch and new video capabilities.

"Upgrading your graphics card is the easiest way to boost your system performance and gain new capabilities. The new ZOTAC GeForce GT 520 PCI and PCI Express x1 graphics cards shows that you can experience good graphics without upgrading the rest of your system," said Carsten Berger, marketing director, ZOTAC International.The ZOTAC GeForce GT 520 PCI and PCI Express x1 graphics cards provide DVI, HDMI and VGA outputs with dual simultaneous independent display support for an instant dual-monitor upgrade. The low-profile form factor enables the ZOTAC GeForce GT 520 PCI and PCI express x1 graphics card to easily fit in compact pre-built systems with height limitations in addition to expansion limitations.It's time to play with the ZOTAC GeForce GT 520 PCI and PCI Express x1 graphics card.

"Upgrading your graphics card is the easiest way to boost your system performance and gain new capabilities. The new ZOTAC GeForce GT 520 PCI and PCI Express x1 graphics cards shows that you can experience good graphics without upgrading the rest of your system," said Carsten Berger, marketing director, ZOTAC International.The ZOTAC GeForce GT 520 PCI and PCI Express x1 graphics cards provide DVI, HDMI and VGA outputs with dual simultaneous independent display support for an instant dual-monitor upgrade. The low-profile form factor enables the ZOTAC GeForce GT 520 PCI and PCI express x1 graphics card to easily fit in compact pre-built systems with height limitations in addition to expansion limitations.It's time to play with the ZOTAC GeForce GT 520 PCI and PCI Express x1 graphics card.

36 Comments on ZOTAC Announces GeForce GT 520 in PCI and PCIe x1 Interface Variants

I've used x1/PCI cards in servers without onboard video before, leaving the valuable x16/x8 slots available for RAID controllers, NICs, etc. A niche benefit, but a benefit none the less.

Also nice as a secondary card. Better compatibility with other newer series cards than with the ancient 6000/7000/8000 x1 and PCI cards currently available.

Anyone else feeling nostalgic ?

The last Nvidia PCI card was Geforce 6200 if i'm correct. An update is certainly due.

Also, why put the passive heatsink on the x16 version, but an active, whiny one on the x1 one? Makes no sense to me, should be the other way round, if anything.

[H]@RD5TUFF is using one, TPU has a GT 520 review stating it's supported, and the only requirements that nVidia give are:I can't see the manufacturer having control over PhysX (or CUDA) support.

More seriously...

I can't see performance being affected all that much by the bandwidth provided:

physxinfo.com/news/880/dedicated-physx-gpu-perfomance-dependence-on-pci-e-bandwidth/

If you have a truly passive system, then yes, this may be a concern. I don't, as the intake fan blows across all my peripheral cards and I've been meaning to purchase the rear-extractor for my Lian-Li case. Give a bit of overclock room for moar physics! I'd wager most of our systems have some level of airflow.

Most seriously...

I would just go ahead and buy this card, knowing that there is a chance of PhysX being disabled and I don't endorse for it for anyone else. End: Disclaimer.