Tuesday, May 19th 2015

Top-end AMD "Fiji" to Get Fancy SKU Name

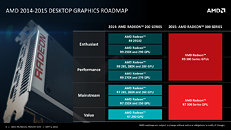

Weeks ago, we were the first to report that AMD could give its top SKU carved out of the upcoming "Fiji" silicon a fancy name à la GeForce GTX TITAN, breaking away from the R9 3xx mold. A new report by SweClockers confirms that AMD is carving out at least one flagship SKU based on the "Fiji" silicon, which will be given a fancy brand name. The said product will go head on against NVIDIA's GeForce GTX TITAN-X. We know that AMD is preparing two SKUs out of the fully-loaded "Fiji" silicon - an air-cooled variant with 4 GB of memory; and a liquid-cooled one, with up to 8 GB of memory. We even got a glimpse of the what this card could look like. AMD is expected to unveil its "Fiji" based high-end graphics card at E3 (mid-June, 2015), with a product launch a week after.

Source:

SweClockers

56 Comments on Top-end AMD "Fiji" to Get Fancy SKU Name

One GPU to rule them all, One GPU to find them; One GPU to bring them all

[INDENT]and in the darkness bind them.[/INDENT]

But excited for sure, been looking forward to it for quite some time!

If it's aimed at the Titan cards, it will be expensive.

You're forgetting DirectX 12 and increasing proliferation of 64-bit games. VRAM is quickly becoming a limiting factor, especially as more and more games are starting to use ridiculously high resolution textures.

Price will be a main concern.

how about no software optimization from AMD. many people switched to Maxwell from hawaii due to no drivers suppportive strength. only weakness hey are facing right now. maxwell still performing great in games, while paying extra worth it.

I'm thinking about vanilla R9-390 (non X). I don't need absolute top performance, but I don't think I'll feel happy with "old" architecture packaged in new cards under R9-380X. In that case I may just as well stick with trusty HD7950 3GB because I'm not willing to pay 600 € ($ = €) for a graphic card...

Only real reason I want to buy R9-390 is because I simply want to have something new to fiddle with, to explore and admire it's tech capabilities. I won't mind extra framerate and future proof. I just don't see any point in having 4K display. For what? It doens't make image any more "HD" than 1080p unless you're gaming on a 40 inch monitor... especially not in games. This isn't video, this is real time render.

What sells more hardware: Common sense or marketing?

Companies are in the business of selling product. How much product would they sell if they admitted that the new generation of tech is ideally suited for the previous generation ecosystem?

Now, if vendor "A" tells world+dog that their cards are 4K capable/ready, how much worse does it sound if vendor "B" touts 1080p capable?

The other issue directly related to AMD is, why the hell would they target 1080p with their marketing when their own AIB partner is proclaiming 4K ?

Where I'd get 4K? When I was testing 42 inch LCD as a monitor replacement. With so big screen, 1080p didn't feel right because of massive pixels. But you felt almost like you were in a game, because you could only see game content and no room :D Stuffing 4K in such tiny formats is pointless. Like I said, pixel count doesn't make games better looking, especially not since we have FSAA... Games != video. Resolution doesn't affect anything within object edges. It only makes edges better looking without FSAA. Are people really still playing games without FSAA? I know I haven't since GeForce 2 days. 2xFSAA was a must have and since GeForce 6600, I've been using 4x regularly. I literally can't imagine playing a game without any FSAA these days. I rather not play it at all than without FSAA...

Gotta love the free market, run by greed & stupidity.

If current pricing trends continue this will be the last PC i build, the only component that came down in price since i built my last is the SSD. Eveything else (equivalent) has remained the same or increased in price. Good job :clap: