Sunday, April 30th 2017

NVIDIA to Support 4K Netflix on GTX 10 Series Cards

Up to now, only users with Intel's latest Kaby Lake architecture processors could enjoy 4K Netflix due to some strict DRM requirements. Now, NVIDIA is taking it upon itself to allow users with one of its GTX 10 series graphics cards (absent the 1050, at least for now) to enjoy some 4K Netflix and chillin'. Though you really do seem to have to go through some hoops to get there, none of these should pose a problem.

The requirements to enable Netflix UHD playback, as per NVIDIA, are so:

In case of a multi monitor configuration on a single GPU or multiple GPUs where GPUs are not linked together in SLI/LDA mode, 4K UHD streaming will happen only if all the active monitors are HDCP2.2 capable. If any of the active monitors is not HDCP2.2 capable, the quality will be downgraded to FHD.What do you think? Is this enough to tide you over to the green camp? Do you use Netflix on your PC?

Sources:

NVIDIA Customer Help Portal, Eteknix

The requirements to enable Netflix UHD playback, as per NVIDIA, are so:

- NVIDIA Driver version exclusively provided via Microsoft Windows Insider Program (currently 381.74).

- No other GeForce driver will support this functionality at this time

- If you are not currently registered for WIP, follow this link for instructions to join: insider.windows.com/

- NVIDIA Pascal based GPU, GeForce GTX 1050 or greater with minimum 3GB memory

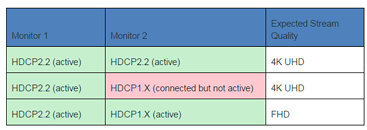

- HDCP 2.2 capable monitor(s). Please see the additional section below if you are using multiple monitors and/or multiple GPUs.

- Microsoft Edge browser or Netflix app from the Windows Store

- Approximately 25Mbps (or faster) internet connection.

In case of a multi monitor configuration on a single GPU or multiple GPUs where GPUs are not linked together in SLI/LDA mode, 4K UHD streaming will happen only if all the active monitors are HDCP2.2 capable. If any of the active monitors is not HDCP2.2 capable, the quality will be downgraded to FHD.What do you think? Is this enough to tide you over to the green camp? Do you use Netflix on your PC?

59 Comments on NVIDIA to Support 4K Netflix on GTX 10 Series Cards

That said, you can still easily spot HDR, so...

I very much doubt you can see the difference between 720p, 1080p and 4k resolutions from 10' away... You do however notice those nice features that come with a newer 4k tv such as HDR and a better color scheme that comes with full color gamut and full array lighting zones.

So far I've only seen HDR10 in action which is the lowest of the HDR standards and is fantastic...

I originally watched Sense8 and Marco Polo in regular 4k and then again in 4k HDR and yes there is a dramatic difference...

. In my honest opinion 4k is utterly useless unless it comes along with HDR and you have the ability to stream @30mb/s...

I know it says 25Mb/s but it actually doesn't do a constant 25Mb/s.... It goes back and forth from 18Mb/s to 30Mb/s...

And yes Netflix 4k UHD is a bit better than Vudu, Amazon and Google play movies.

Like you said, I'd appreciate better colors, but wouldn't really want 4K to waste my bandwidth for no real reason. Then again my LCD isn't HDR yet so that's not that much of an issue. It does have micro dimming so black stuff is really pitch black which is nice.

I'll probably try this out on my computer a couple times and then go back to using the Xbox.HDR does work at 1080p. Your TV must support HDR, which means it's going to be a 4k TV anyway, but if network conditions drop below 4K range, the stream will be 1080p HDR or even as low as 480p HDR. Netflix will send you any number of different bitrates and resolutions, the issue is that someone with a 1080p TV or a 4k TV but an old chromecast or apple TV won't have the hardware in place to decode HDR HEVC streams and make any use of the extra dynamic range or color gamut.

another fun fact: optimus allows 4k playback via kaby lake while the dgpu nvidia card accelerates another application, provided you can meet the hdcp compliance to play at all.

I thing this a only a very new thingy for 4K TVs only, just to justify their still callous prices.

Might not have the full contrast range, but as far as the color input/HDMI support goes its definitely been around for a while.

I wonder how they'll handle this scam they've been running when people try to play HDR content on their $2000 HDR monitors that go on sale this month. On the desktop your game cards only do 8-bit. They only do 10-bit in DX games.

EDIT: And as for the poster above with the 12-bit TV, I highly doubt it. 12-bit panels today cost over $40,000 and hardly any studio has them, plus there's no software until recently that even let you edit video/photos using 12-bits, or video cards besides the Quadro. CS6 only recently added support for 30 bit displays and 10-bit color editing. You can find a good article on Samsung I believe lying about their panel bit depth being 10 when it was really 8. They didn't know. And both Sony and Samsung refuse to tell customers the specs on their panels.

Dell and ASUS or Acer are only now this month and summer coming out with their HDR 4K PC monitors. 1000 nits and quantum dot, not the Dell. 100% Adobe and 95-97.7 DCI-P3. That is off the charts for monitors and better than anything the pros have. They should be TRUE 10-bit panels, not that FRC crap I mentioned above. And again you need a Quadro card for 10-bit output.

www.nvidia.com/docs/IO/40049/TB-04701-001_v02_new.pdf

The only thing that requires a Quadro is 10bit for OpenGL (which kinda sucks for Linux, but Linux has more stringent issues anyway).

And yes, 10bit requires an end-to-end solution to work, that's in no way particular to Nvidia.