Monday, September 30th 2013

Radeon R9 290X Clock Speeds Surface, Benchmarked

Radeon R9 290X is looking increasingly good on paper. Most of its rumored specifications, and SEP pricing were reported late last week, but the ones that eluded us were clock speeds. A source that goes by the name Grant Kim, with access to a Radeon R9 290X sample, disclosed its clock speeds, and ran a few tests for us. To begin with, the GPU core is clocked at 1050 MHz. There is no dynamic-overclocking feature, but the chip can lower its clocks, taking load and temperatures into account. The memory is clocked at 1125 MHz (4.50 GHz GDDR5-effective). At that speed, the chip churns out 288 GB/s of memory bandwidth, over its 512-bit wide memory interface. Those clock speeds were reported by the GPU-Z client to us, so we give it the benefit of our doubt, even if it goes against AMD's ">300 GB/s memory bandwidth" bullet-point in its presentation.

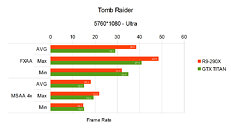

Among the tests run on the card include frame-rates and frame-latency for Aliens vs. Predators, Battlefield 3, Crysis 3, GRID 2, Tomb Raider (2013), RAGE, and TESV: Skyrim, in no-antialiasing, FXAA, and MSAA modes; at 5760 x 1080 pixels resolution. An NVIDIA GeForce GTX TITAN was pitted against it, running the latest WHQL driver. We must remind you that at that resolution, AMD and NVIDIA GPUs tend to behave a little differently due to the way they handle multi-display, and so it may be an apples-to-coconuts comparison. In Tomb Raider (2013), the R9 290X romps ahead of the GTX TITAN, with higher average, maximum, and minimum frame rates in most tests.RAGE

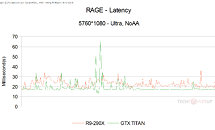

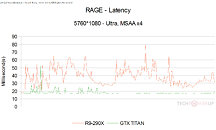

The OpenGL-based RAGE is a different beast. With AA turned off, the R9 290X puts out an overall lower frame-rates, and higher frame latency (lower the better). It gets even more inconsistent with AA cranked up to 4x MSAA. Without AA, frame-latencies of both chips remain under 30 ms, with the GTX TITAN looking more consistent, and lower. At 4x MSAA, the R9 290X is all over the place with frame latency.TESV: Skyrim

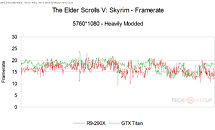

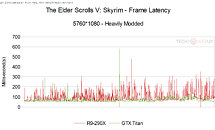

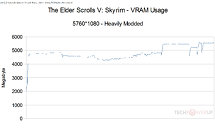

The tester somehow got the game to work at 5760 x 1080. With no AA, both chips put out similar frame-rates, with the GTX TITAN having a higher mean, and the R9 290X spiking more often. In the frame-latency graph, the R9 290X has a bigger skyline than the GTX TITAN, which is not something to be proud of. As an added bonus, the VRAM usage of the game was plotted throughout the test run.GRID 2

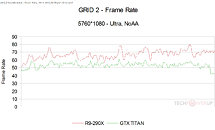

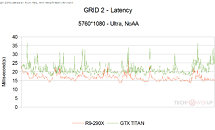

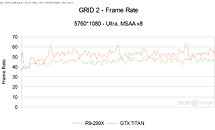

GRID 2 is a surprise package for the R9 290X. The chip puts out significantly, and consistently higher frame-rates than the GTX TITAN at no-AA, and offers lower frame-latencies. Even with MSAA cranked all the way up to 8x, the R9 290X holds out pretty well on the frame-rate front, but not frame-latency.Crysis 3

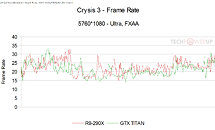

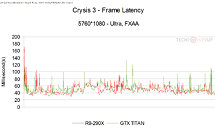

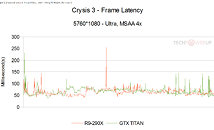

This Cryengine 3-based game offers MSAA and FXAA anti-aliasing methods, and so it wasn't tested without either enabled. With 4x MSAA, both chips offer similar levels of frame-rates and frame-latencies. With FXAA enabled, the R9 290X offers higher frame-rates on average, and lower latencies.Battlefield 3

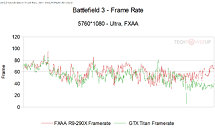

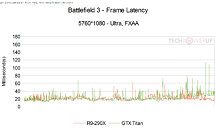

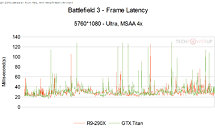

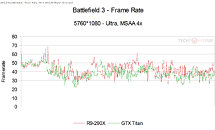

That leaves us with Battlefield 3, which like Crysis 3, supports MSAA and FXAA. At 4x MSAA, the R9 290X offers higher frame-rates on average, and lower frame-latencies. It gets better for AMD's chip with FXAA on both fronts.Overall, at 1050 MHz (core) and 4.50 GHz (memory), it's advantage-AMD, looking at these graphs. Then again, we must remind you that this is 5760 x 1080 we're talking about. Many Thanks to Grant Kim.

Among the tests run on the card include frame-rates and frame-latency for Aliens vs. Predators, Battlefield 3, Crysis 3, GRID 2, Tomb Raider (2013), RAGE, and TESV: Skyrim, in no-antialiasing, FXAA, and MSAA modes; at 5760 x 1080 pixels resolution. An NVIDIA GeForce GTX TITAN was pitted against it, running the latest WHQL driver. We must remind you that at that resolution, AMD and NVIDIA GPUs tend to behave a little differently due to the way they handle multi-display, and so it may be an apples-to-coconuts comparison. In Tomb Raider (2013), the R9 290X romps ahead of the GTX TITAN, with higher average, maximum, and minimum frame rates in most tests.RAGE

The OpenGL-based RAGE is a different beast. With AA turned off, the R9 290X puts out an overall lower frame-rates, and higher frame latency (lower the better). It gets even more inconsistent with AA cranked up to 4x MSAA. Without AA, frame-latencies of both chips remain under 30 ms, with the GTX TITAN looking more consistent, and lower. At 4x MSAA, the R9 290X is all over the place with frame latency.TESV: Skyrim

The tester somehow got the game to work at 5760 x 1080. With no AA, both chips put out similar frame-rates, with the GTX TITAN having a higher mean, and the R9 290X spiking more often. In the frame-latency graph, the R9 290X has a bigger skyline than the GTX TITAN, which is not something to be proud of. As an added bonus, the VRAM usage of the game was plotted throughout the test run.GRID 2

GRID 2 is a surprise package for the R9 290X. The chip puts out significantly, and consistently higher frame-rates than the GTX TITAN at no-AA, and offers lower frame-latencies. Even with MSAA cranked all the way up to 8x, the R9 290X holds out pretty well on the frame-rate front, but not frame-latency.Crysis 3

This Cryengine 3-based game offers MSAA and FXAA anti-aliasing methods, and so it wasn't tested without either enabled. With 4x MSAA, both chips offer similar levels of frame-rates and frame-latencies. With FXAA enabled, the R9 290X offers higher frame-rates on average, and lower latencies.Battlefield 3

That leaves us with Battlefield 3, which like Crysis 3, supports MSAA and FXAA. At 4x MSAA, the R9 290X offers higher frame-rates on average, and lower frame-latencies. It gets better for AMD's chip with FXAA on both fronts.Overall, at 1050 MHz (core) and 4.50 GHz (memory), it's advantage-AMD, looking at these graphs. Then again, we must remind you that this is 5760 x 1080 we're talking about. Many Thanks to Grant Kim.

100 Comments on Radeon R9 290X Clock Speeds Surface, Benchmarked

Looks like AMD's card partners are going to enjoy their time tweaking this chip.

I also did not mean dynamic underclocking as a negative. That is just how the article described it with out the term I used. I was wondering if such a term would be correct.

Considering this is almost hand in hand with titan at 800Mhz, 1Ghz would would provide somewhere around 20% more performance depending on achievable memory speeds.

27% increase in SP count but W1zz's benchmarks of Titan VS 7970 shows the AMD only 12% slower at that resolution, so either the card is crippled and someone got it for other testing, or they have failed to reach their target.

A efficiency improvement in the 7970 for higher core speeds and better memory would have been a better investment for them if this were true. I doubt this to be true, instead its most likely a crippled ES card.

www.techpowerup.com/reviews/NVIDIA/GeForce_GTX_Titan/22.html

Or its meant to show the validity of the core architecture at the same speed as Titan, while consuming less power, and having more features possibly.

They (AMD) redefined it well bro... :laugh:

:toast:

I'm thinking the Titan benches are dubious due to the huge latency spikes. It's one of the most consistent cards out there. Unless of course it's not that good at handling triple screen resolutions?

Either way, those clocks look far too low on the core for that same manufacturing process. You'd think AMD would learn from low clock speeds (the original 7970). I call 'meh' until it's official.

These benchmarks mean nothing, and I highly doubt the core or memory clock is correct. Like way off. I certainly dont see these benchmarks as being very open and clear.

Either way, if the benchmarks are legit, it's a great price point for the kind of performance it will put out. I can't see how people are complaining except for that it's a benchmark before the end of the NDA.