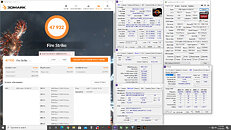

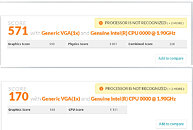

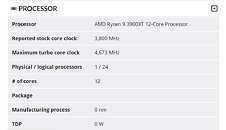

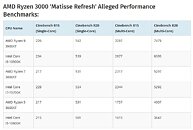

German publication Heise.de got its hands on a Radeon RX 5500 (OEM) graphics card and put it through their test bench. The numbers yielded show exactly what caused NVIDIA to refresh its entry-level with the GeForce GTX 1650 Super and the GTX 1660 Super. The RX 5500, in Heise's testing was found matching the previous-generation RX 580, and NVIDIA's current-gen GTX 1660 (non-Super). When compared to factory-overclocked RX 580 NITRO+ and GTX 1660 OC, the RX 5500 yielded similar 3DMark Firestrike performance, with 12,111 points, compared to 12,744 points of the RX 580 NITRO+, and 12,525 points of the GTX 1660 OC.

The card was put through two other game tests at 1080p, "Shadow of the Tomb Raider," and "Far Cry 5." In SoTR, the RX 5500 put out 59 fps, which was slightly behind the 65 fps of the RX 580 NITRO+, and 69 fps of the GTX 1660 OC. In "Far Cry 5," it scored 72 fps, which again is within reach of the 75 fps of the RX 580 NITRO+, and 85 fps of the GTX 1660 OC. It's important to once again note that the RX 580 and GTX 1660 in this comparison are factory-overclocked cards, while the RX 5500 is ticking a stock speeds. Heise also did some power testing, and found the RX 5500 to have a lower idle power-draw than the GTX 1660 OC, at 7 W compared to 10 W of the NVIDIA card; and 12 W of the RX 580 NITRO+. Gaming power-draw is also similar to the GTX 1660, with the RX 5500 pulling 133 W compared to 128 W of the GTX 1660. This short test shows that the RX 5500 is in the same league as the RX 580 and GTX 1660, and explains how NVIDIA had to make its recent product-stack changes.