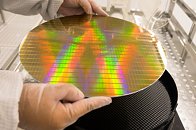

TSMC Starts Shipping its 7nm+ Node Based on EUV Technology

TSMC today announced that its seven-nanometer plus (N7+), the industry's first commercially available Extreme Ultraviolet (EUV) lithography technology, is delivering customer products to market in high volume. The N7+ process with EUV technology is built on TSMC's successful 7 nm node and paves the way for 6 nm and more advanced technologies.

The N7+ volume production is one of the fastest on record. N7+, which began volume production in the second quarter of 2019, is matching yields similar to the original N7 process that has been in volume production for more than one year.

The N7+ volume production is one of the fastest on record. N7+, which began volume production in the second quarter of 2019, is matching yields similar to the original N7 process that has been in volume production for more than one year.