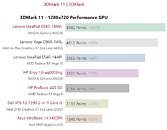

AMD in its October 8 online launch event for the Ryzen 5000 "Zen 3" processors, provided a teaser of the company's next flagship graphics card slotted in the Radeon RX 6000 series. This particular SKU has been referred to by company CEO Lisa Su as "Big Navi," meaning it could be the top part from AMD's upcoming client GPU lineup. As part of the teaser, Su held up the reference design card, and provided three performance numbers of the card as tested on a machine powered by a Ryzen 9 5900X "Zen 3" processor. We compared these performance numbers, obtained at 4K UHD, with our own testing data for the games, to see how the card compares to other current-gen cards in its class. Our testing data for one of the games is from the latest RTX 30-series reviews, find details of our test bed

here. We obviously have a different CPU since the 5900X is unreleased, but use the highest presets in our testing.

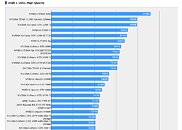

With "Borderlands 3" at 4K, with "badass" performance preset and DirectX 12 renderer, AMD claims a frame-rate of 61 FPS. We tested the game with its DirectX 12 renderer in our dedicated performance review (test bed details

here). AMD's claimed performance ends up 45.9 percent higher than that of the GeForce RTX 2080 Ti

as tested by us, which yields 41.8 FPS on our test bed. The RTX 3080 ends up 15.24 percent faster than Big Navi, with 70.3 FPS. It's important to note here that AMD may be using a different/lighter test scene than us, since we don't use internal benchmark tools of games, and design our own test scenes. It's also important to note that we tested Borderlands 3 with DirectX 12 only in the game's launch-day review, and use the DirectX 11 renderer in our regular VGA reviews.