Hewlett Packard Enterprise Brings HPE Cray EX and HPE Cray XD Supercomputers to Enterprise Customers

Hewlett Packard Enterprise (NYSE: HPE) today announced it is making supercomputing accessible for more enterprises to harness insights, solve problems and innovate faster by delivering its world-leading, energy-efficient supercomputers in a smaller form factor and at a lower price point.

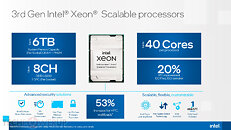

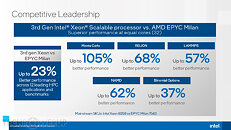

The expanded portfolio includes new HPE Cray EX and HPE Cray XD supercomputers, which are based on HPE's exascale innovation that delivers end-to-end, purpose-built technologies in compute, accelerated compute, interconnect, storage, software, and flexible power and cooling options. The supercomputers provide significant performance and AI-at-scale capabilities to tackle demanding, data-intensive workloads, speed up AI and machine learning initiatives, and accelerate innovation to deliver products and services to market faster.

The expanded portfolio includes new HPE Cray EX and HPE Cray XD supercomputers, which are based on HPE's exascale innovation that delivers end-to-end, purpose-built technologies in compute, accelerated compute, interconnect, storage, software, and flexible power and cooling options. The supercomputers provide significant performance and AI-at-scale capabilities to tackle demanding, data-intensive workloads, speed up AI and machine learning initiatives, and accelerate innovation to deliver products and services to market faster.