NVIDIA Launches UK's Most Powerful Supercomputer

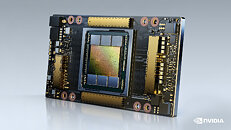

NVIDIA today officially launched Cambridge-1, the United Kingdom's most powerful supercomputer, which will enable top scientists and healthcare experts to use the powerful combination of AI and simulation to accelerate the digital biology revolution and bolster the country's world-leading life sciences industry. Dedicated to advancing healthcare, Cambridge-1 represents a $100 million investment by NVIDIA. Its first projects with AstraZeneca, GSK, Guy's and St Thomas' NHS Foundation Trust, King's College London and Oxford Nanopore Technologies include developing a deeper understanding of brain diseases like dementia, using AI to design new drugs and improving the accuracy of finding disease-causing variations in human genomes.

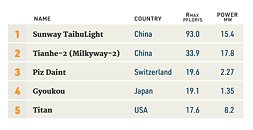

Cambridge-1 brings together decades of NVIDIA's work in accelerated computing, AI and life sciences, where NVIDIA Clara and AI frameworks are optimized to take advantage of the entire system for large-scale research. An NVIDIA DGX SuperPOD supercomputing cluster, it ranks among the world's top 50 fastest computers and is powered by 100 percent renewable energy.

Cambridge-1 brings together decades of NVIDIA's work in accelerated computing, AI and life sciences, where NVIDIA Clara and AI frameworks are optimized to take advantage of the entire system for large-scale research. An NVIDIA DGX SuperPOD supercomputing cluster, it ranks among the world's top 50 fastest computers and is powered by 100 percent renewable energy.