Wednesday, October 28th 2020

AMD Announces the Radeon RX 6000 Series: Performance that Restores Competitiveness

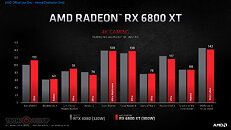

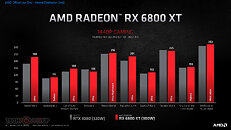

AMD (NASDAQ: AMD) today unveiled the AMD Radeon RX 6000 Series graphics cards, delivering powerhouse performance, incredibly life-like visuals, and must-have features that set a new standard for enthusiast-class PC gaming experiences. Representing the forefront of extreme engineering and design, the highly anticipated AMD Radeon RX 6000 Series includes the AMD Radeon RX 6800 and Radeon RX 6800 XT graphics cards, as well as the new flagship Radeon RX 6900 XT - the fastest AMD gaming graphics card ever developed.

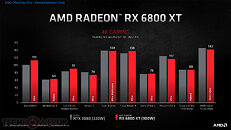

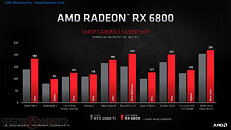

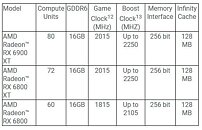

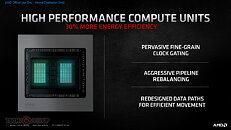

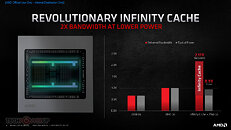

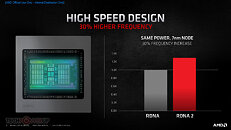

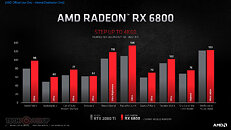

AMD Radeon RX 6000 Series graphics cards are built upon groundbreaking AMD RDNA 2 gaming architecture, a new foundation for next-generation consoles, PCs, laptops and mobile devices, designed to deliver the optimal combination of performance and power efficiency. AMD RDNA 2 gaming architecture provides up to 2X higher performance in select titles with the AMD Radeon RX 6900 XT graphics card compared to the AMD Radeon RX 5700 XT graphics card built on AMD RDNA architecture, and up to 54 percent more performance-per-watt when comparing the AMD Radeon RX 6800 XT graphics card to the AMD Radeon RX 5700 XT graphics card using the same 7 nm process technology.AMD RDNA 2 offers a number of innovations, including applying advanced power saving techniques to high-performance compute units to improve energy efficiency by up to 30 percent per cycle per compute unit, and leveraging high-speed design methodologies to provide up to a 30 percent frequency boost at the same power level. It also includes new AMD Infinity Cache technology that offers up to 2.4X greater bandwidth-per-watt compared to GDDR6-only AMD RDNA -based architectural designs.

"Today's announcement is the culmination of years of R&D focused on bringing the best of AMD Radeon graphics to the enthusiast and ultra-enthusiast gaming markets, and represents a major evolution in PC gaming," said Scott Herkelman, corporate vice president and general manager, Graphics Business Unit at AMD. "The new AMD Radeon RX 6800, RX 6800 XT and RX 6900 XT graphics cards deliver world class 4K and 1440p performance in major AAA titles, new levels of immersion with breathtaking life-like visuals, and must-have features that provide the ultimate gaming experiences. I can't wait for gamers to get these incredible new graphics cards in their hands."

Powerhouse Performance, Vivid Visuals & Incredible Gaming Experiences

AMD Radeon RX 6000 Series graphics cards support high-bandwidth PCIe 4.0 technology and feature 16 GB of GDDR6 memory to power the most demanding 4K workloads today and in the future. Key features and capabilities include:

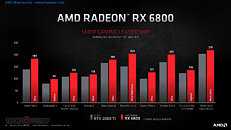

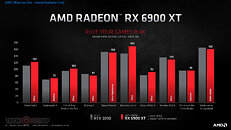

Powerhouse Performance

In the coming weeks, AMD will release a series of videos from its ISV partners showcasing the incredible gaming experiences enabled by AMD Radeon RX 6000 Series graphics cards in some of this year's most anticipated games. These videos can be viewed on the AMD website.

AMD Radeon RX 6000 Series graphics cards are built upon groundbreaking AMD RDNA 2 gaming architecture, a new foundation for next-generation consoles, PCs, laptops and mobile devices, designed to deliver the optimal combination of performance and power efficiency. AMD RDNA 2 gaming architecture provides up to 2X higher performance in select titles with the AMD Radeon RX 6900 XT graphics card compared to the AMD Radeon RX 5700 XT graphics card built on AMD RDNA architecture, and up to 54 percent more performance-per-watt when comparing the AMD Radeon RX 6800 XT graphics card to the AMD Radeon RX 5700 XT graphics card using the same 7 nm process technology.AMD RDNA 2 offers a number of innovations, including applying advanced power saving techniques to high-performance compute units to improve energy efficiency by up to 30 percent per cycle per compute unit, and leveraging high-speed design methodologies to provide up to a 30 percent frequency boost at the same power level. It also includes new AMD Infinity Cache technology that offers up to 2.4X greater bandwidth-per-watt compared to GDDR6-only AMD RDNA -based architectural designs.

"Today's announcement is the culmination of years of R&D focused on bringing the best of AMD Radeon graphics to the enthusiast and ultra-enthusiast gaming markets, and represents a major evolution in PC gaming," said Scott Herkelman, corporate vice president and general manager, Graphics Business Unit at AMD. "The new AMD Radeon RX 6800, RX 6800 XT and RX 6900 XT graphics cards deliver world class 4K and 1440p performance in major AAA titles, new levels of immersion with breathtaking life-like visuals, and must-have features that provide the ultimate gaming experiences. I can't wait for gamers to get these incredible new graphics cards in their hands."

Powerhouse Performance, Vivid Visuals & Incredible Gaming Experiences

AMD Radeon RX 6000 Series graphics cards support high-bandwidth PCIe 4.0 technology and feature 16 GB of GDDR6 memory to power the most demanding 4K workloads today and in the future. Key features and capabilities include:

Powerhouse Performance

- AMD Infinity Cache - A high-performance, last-level data cache suitable for 4K and 1440p gaming with the highest level of detail enabled. 128 MB of on-die cache dramatically reduces latency and power consumption, delivering higher overall gaming performance than traditional architectural designs.

- AMD Smart Access Memory - An exclusive feature of systems with AMD Ryzen 5000 Series processors, AMD B550 and X570 motherboards and Radeon RX 6000 Series graphics cards. It gives AMD Ryzen processors greater access to the high-speed GDDR6 graphics memory, accelerating CPU processing and providing up to a 13-percent performance increase on a AMD Radeon RX 6800 XT graphics card in Forza Horizon 4 at 4K when combined with the new Rage Mode one-click overclocking setting.9,10

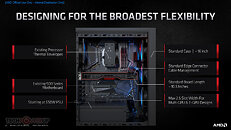

- Built for Standard Chassis - With a length of 267 mm and 2x8 standard 8-pin power connectors, and designed to operate with existing enthusiast-class 650 W-750 W power supplies, gamers can easily upgrade their existing large to small form factor PCs without additional cost.

- DirectX 12 Ultimate Support - Provides a powerful blend of raytracing, compute, and rasterized effects, such as DirectX Raytracing (DXR) and Variable Rate Shading, to elevate games to a new level of realism.

- DirectX Raytracing (DXR) - Adding a high performance, fixed-function Ray Accelerator engine to each compute unit, AMD RDNA 2-based graphics cards are optimized to deliver real-time lighting, shadow and reflection realism with DXR. When paired with AMD FidelityFX, which enables hybrid rendering, developers can combine rasterized and ray-traced effects to ensure an optimal combination of image quality and performance.

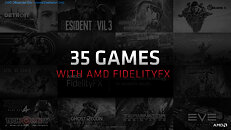

- AMD FidelityFX - An open-source toolkit for game developers available on AMD GPUOpen. It features a collection of lighting, shadow and reflection effects that make it easier for developers to add high-quality post-process effects that make games look beautiful while offering the optimal balance of visual fidelity and performance.

- Variable Rate Shading (VRS) - Dynamically reduces the shading rate for different areas of a frame that do not require a high level of visual detail, delivering higher levels of overall performance with little to no perceptible change in image quality.

- Microsoft DirectStorage Support - Future support for the DirectStorage API enables lightning-fast load times and high-quality textures by eliminating storage API-related bottlenecks and limiting CPU involvement.

- Radeon Software Performance Tuning Presets - Simple one-click presets in Radeon Software help gamers easily extract the most from their graphics card. The presets include the new Rage Mode stable over clocking setting that takes advantage of extra available headroom to deliver higher gaming performance.

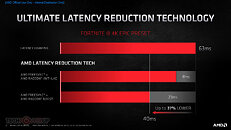

- Radeon Anti-Lag - Significantly decreases input-to-display response times and offers a competitive edge in gameplay.

In the coming weeks, AMD will release a series of videos from its ISV partners showcasing the incredible gaming experiences enabled by AMD Radeon RX 6000 Series graphics cards in some of this year's most anticipated games. These videos can be viewed on the AMD website.

- DIRT 5 - October 29

- Godfall - November 2

- World of Warcraft : Shadowlands - November 10

- RiftBreaker - November 12

- FarCry 6 - November 17

- AMD Radeon RX 6800 and Radeon RX 6800 XT graphics cards are expected to be available from global etailers/retailers and on AMD.com beginning November 18, 2020, for $579 USD SEP and $649 USD SEP, respectively. The AMD Radeon RX 6900 XT is expected to be available December 8, 2020, for $999 USD SEP.

- AMD Radeon RX 6800 and RX 6800 XT graphics cards are also expected to be available from AMD board partners, including ASRock, ASUS, Gigabyte, MSI, PowerColor, SAPPHIRE and XFX, beginning in November 2020.

394 Comments on AMD Announces the Radeon RX 6000 Series: Performance that Restores Competitiveness

Laughable World of Warcraft effects barely anyone notices (bar framerate dip)?

Note how despite DXR being a DirectX standard, API, Jensen Huang still managed to shit all over the place and make "nvidia sponsored" games (at least) use green proprietary extensions.

I guess he doesn't remember how he killed OpenGL.

Pros for AMD :

- Competitive in rasterization now

- Can compete on price

Cons for AMD:

- 1st gen RT, so its a slower than Ampere's RT

- No DLSS, which gives huge performance advantage in the second most popular game in the world (Fortnite)

- NVENC is far superior to AMD's implementation. (OBS Streaming software has native support for Nvidia but not AMD)

- Nvidia Broadcast is a huge asset to content creators, no equivalent from AMD

- Way behind on professional applications due to Nvidia's broad CUDA support.

My choice is clear.

also, for me i don't care about RT, DLSS (though I do think it is nice but not enough games use it really), I don't care about streaming, don't care about broadcast for same reason, and I don't care about professional stuff.

just a gamer guy that likes to game at high refresh rates 1440p. AMD is 100% for me if i can find it in stock on launch day, big if. heh.

6800 is said to be 18% faster than 2080Ti.

It has twice the RAM of 3070.

1\ You don't know RT performance numbers. Its likely weaker, but literally have nfi atm. Also, with RT still all being hybrid.... its a feature not a requirement.

2\ AMD mentioned its DLSS alternative, it hasn't detailed it yet however.

3\ No info has been provided about the encoder except what it can support and it supports all current standards.

4\ If Nvidia Broadcast is a value ad for you, thats great. The majority of us just want to play games, not broadcast our warts to the world. See above regarding feature.

5\ CUDA is somewhat of a chicken and egg, became a defacto standard due to initial better support. However, 'massively better' is a misnomer, and is really dependent on what professional task you are doing as more things look to ditch CUDA (which has started to pick up pace over the last year).

tl;dr making definitive statements is crass.

Reference cards these days have the perfect VRM for most users; wether thats water, air or even LN2 as there is hardly any condition these days that you need a larger VRM then reference already is pre-designed for you. You wont be overclocking that much either that you need the VRM working at it's full capacity. The proces node or limitation of the silicon itself wont allow this either. And knowing AMD there's proberly a curent limit running into those chips as well to prevent degrading these.

It's why you see game / boost clock; simular as Zen CPU's they allow higher clocks when light workloads are happening, they go down when heavy workload is being applied. All this is polled every 25ms on hardware level to present you the best clocks possible in relation of temperature and power consumption. This is obviously a bliss for consumers, but it takes the fun out of OC'ing a bit if you plan to run on water. AMD is already maxing out the silicon for you, you have to go subzero to get more out of it.

And if it's not what you need in relation of sound, slap a watercooler and your done. Watercooling always has better efficiency compared to air to be honest. And because of that you could hold the boost clocks longer. I'm glad for AMD to bring back not one but two generations leap forward that competes with the 3090 which costs 1500 compared to 999$. 24GB of Vram is'nt a real reason to pay 500$ more, considering nvidia pays like 4 $ per each extra memory chip. The margins are huge.

we know nothing about RT performance, so we should wait for the review before draw any conclusion.When did they speak about X570 ???

the problem is: are those 16 Gb somehow useful at the target 1440P resolution ? Hardly...Ah ok, so not only X570...

2) Again AMD's encoder is an unknown, I can only base on recent history and Nvidia's solution is proven to be superior at this moment.

3) RT can only be appreciated by someone who values realistic graphics, for the rest, it's just a feature.

Even if I were a pure gamer, there is no reason for me to go AMD. When I pay almost $600-$700 for a GPU, $50 difference is nothing for these added features, not to mention, AMD has to regain some credibility with its driver problems in the last year.

And seriously, AMD driver problems? When I got my 2080 Ti I was BSODing for fucks sake. Both vendors have and continue to have issues at times.

I have tried all the different hardware encoders out there, and none of them was good. To be honest they're all shit! Sorry if you want quality, you have to do it in software. So no reason to go green for me there. Only reason to go for NVENC would be if you only had a tiny 4 or 6 core CPU that couldn't handle software encoding. But if you only had such a low tier cpu, you wouldn't buy 3080/6800xt anyways.

DLSS is absolutely overhyped. First of all there is only a handful of games that support it. Then the difference to native rendering is absolutely visible even with 2.0. Its just a blur. And, last but not least, it only makes sense for absolute hardcore esport guys, because 3080 and 6800xt seen to have no problems rendering in 4k/60fps or 1440p/120fps, so its really only a thing for competitive 4k/120fps+ gamers.

This thread though ... damn. I mean, even though we don't have third-party review numbers yet, so we can't trust the numbers given 100%, we are still seeing AMD promise a bigger jump in performance/W than Nvidia delivered with Maxwell. (And given the risk of shareholder lawsuits, promises need to be reasonably accurate.) Does nobody else understand just how insane that is? The GPU industry hasn't seen anything like this for a decade or more. And they aren't even doing a "best case vs. worst case" comparison, but comparing the (admittedly worst case) 5700 XT against the seemingly overall representative 6800 XT - if they were going for a skewed benchmark, the ultra-binned 6900 XT at the same power would have been the obvious choice.

Yes, the prices could be lower (and given the use of cheaper memory technology and a narrower bus than Nvidia, AMD probably has the margins to do that if competition necessitates it, even with 2x the memory). For now they look to either deliver a bit less performance for 2/3 the price, matching or slightly better performance for $50 less, or somewhat better performance for $79 more. From a purely competitive standpoint, that sounds pretty okay to me. From a "I want the best GPU I can afford" standpoint it would obviously be better if they went into a full price war, but that is highly unlikely in an industry like this. This nonetheless means we for the first time in a long time will have a competitive GPU market across the entire price range.

I'll be mighty interested in a 6800 XT - after seeing 3rd party reviews, obviously - but just as interested in seeing how this pans out across the rest of the lineup. I'm predicting a very interesting year for ~$2-400 GPUs after this, given that $5-600 GPUs are now matching the value of previous-gen $400 GPUs we should get a noticeable bump in perf/$ in that price range.

It remains to be seen if there will be reasonable quantities of 6800xt of course, but since AMDs move to built in that cache instead of using exotic hardly available 6x memory and using TSMCs 7nm of which AMD seems to have good availability seems to point to better availability at launch, but we will see.

Hrmmmm now the hard choices... Amd or Nvidia???

the 6800 (non xt) looks good and i think nvidia will answer it with RTX 3080 LE :P

There is DLSS in form of Super Resolution. We don't know how it works but it is there and we will have to wait and see what it brings.

You dont know if it will be slower than Amperes RT. 6000 series will use Microsoft DXR API with the Ray Accelerators for every CU.

You way of perceiving this makes me confused.

CUDA support :) , NVENC :)

It's just the same way if I'd said NV sucks cause it doesn't have AMD Cores.