Friday, January 29th 2021

AMD Files Patent for Chiplet Machine Learning Accelerator to be Paired With GPU, Cache Chiplets

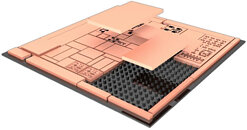

AMD has filed a patent whereby they describe a MLA (Machine Learning Accelerator) chiplet design that can then be paired with a GPU unit (such as RDNA 3) and a cache unit (likely a GPU-excised version of AMD's Infinity Cache design debuted with RDNA 2) to create what AMD is calling an "APD" (Accelerated Processing Device). The design would thus enable AMD to create a chiplet-based machine learning accelerator whose sole function would be to accelerate machine learning - specifically, matrix multiplication. This would enable capabilities not unlike those available through NVIDIA's Tensor cores.

This could give AMD a modular way to add machine-learning capabilities to several of their designs through the inclusion of such a chiplet, and might be AMD's way of achieving hardware acceleration of a DLSS-like feature. This would avoid the shortcomings associated with implementing it in the GPU package itself - an increase in overall die area, with thus increased cost and reduced yields, while at the same time enabling AMD to deploy it in other products other than GPU packages. The patent describes the possibility of different manufacturing technologies being employed in the chiplet-based design - harkening back to the I/O modules in Ryzen CPUs, manufactured via a 12 nm process, and not the 7 nm one used for the core chiplets. The patent also describes acceleration of cache-requests from the GPU die to the cache chiplet, and on-the-fly usage of it as actual cache, or as directly-addressable memory.

Sources:

Free Patents Online, via Reddit

This could give AMD a modular way to add machine-learning capabilities to several of their designs through the inclusion of such a chiplet, and might be AMD's way of achieving hardware acceleration of a DLSS-like feature. This would avoid the shortcomings associated with implementing it in the GPU package itself - an increase in overall die area, with thus increased cost and reduced yields, while at the same time enabling AMD to deploy it in other products other than GPU packages. The patent describes the possibility of different manufacturing technologies being employed in the chiplet-based design - harkening back to the I/O modules in Ryzen CPUs, manufactured via a 12 nm process, and not the 7 nm one used for the core chiplets. The patent also describes acceleration of cache-requests from the GPU die to the cache chiplet, and on-the-fly usage of it as actual cache, or as directly-addressable memory.

28 Comments on AMD Files Patent for Chiplet Machine Learning Accelerator to be Paired With GPU, Cache Chiplets

Encode & Decode is widely popular and used, the only issue with it is how much die space it takes from the GPU/APU die, when packaging and MCM becomes the trend of GPU's then having a dedicated media chiplet might be useful, but it all depends on how much it will cost to separate and implement (packaging) and power consumption also, not to mention the space area on the PCB. If the cost saving from the die area is good compared to separating it on another chiplet is worth it. Then they might do it.

FPGA are normally expensive, for such fixed functions it's better just to keep it like they're currently, they're also not space and power efficient compared to a fixed function ASIC.

FPGA should be optional in the first implementation for CDNA, I don't think it will be added to RDNA until maybe later generations. As the FPGA is used mainly for professional usage scenarios, later it will become more mainstream and cheaper that AMD can have it also on RDNA. Then, it can be used in some apps to accelerate specific functions, for example when a new codec appears, encoding softwares can reprogram the FPGA to accelerate the encoding without waiting for the ASIC to be developed which can take few years. And also the ASIC will requires you to buy a new GPU while the FPGA can add the function with no need to buy another card.