Wednesday, May 12th 2021

AMD Radeon RX 6600 XT, 6600 to Feature Navi 23 Chip With up to 2048 Stream Processors

AMD is preparing to round-out its RX 6000 series lineup with the upcoming RX 6600 XT and RX 6600, introducing true midrange GPUs to their latest generation RDNA2 architecture. According to recent leaks, both graphics cards should feature AMD's Navi 23 chip, another full chip design, instead of making do with a cut-down Navi 22 (even though that chip still only powers one graphics card in the AMD lineup, the RX 6700 XT).

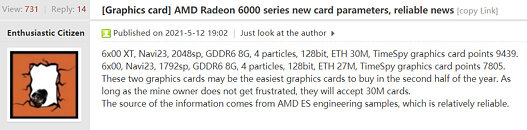

According to the leak, the RX 6600 XT should feature 2048 stream processors and 8 GB of GDDR6 memory over a 128-bit memory bus. The RX 6600, on the other hand, is said to feature a cut-down Navi 23, with only 1796 stream processors enabled out of the original silicon design, whilst offering the same 8 GB GDDR6 over a 128-bit memory bus. There are even some benchmark scores to go with these leaks: supposedly, the RX 6600 XT scores 9,439 points in 3DMark Time Spy (Graphics), while the RX 6600 card scores 7,805 points. Those scores place these cards in the same ballpark as the RDNA-based RX 5700 XT and RX 5700. It's expected that these cards feature a further cut-down 32 MB of Infinity cache - half that of the RX 6700 XT's 64 MB. With die-size being an estimated 236 mm², AMD is essentially introducing the same performance with 15 mm² less area, whilst shaving some 45 W from that cards' TDP (225 W for the RX 5700 XT, and an estimated 180 W for the RX 6600 XT).

Source:

Videocardz

According to the leak, the RX 6600 XT should feature 2048 stream processors and 8 GB of GDDR6 memory over a 128-bit memory bus. The RX 6600, on the other hand, is said to feature a cut-down Navi 23, with only 1796 stream processors enabled out of the original silicon design, whilst offering the same 8 GB GDDR6 over a 128-bit memory bus. There are even some benchmark scores to go with these leaks: supposedly, the RX 6600 XT scores 9,439 points in 3DMark Time Spy (Graphics), while the RX 6600 card scores 7,805 points. Those scores place these cards in the same ballpark as the RDNA-based RX 5700 XT and RX 5700. It's expected that these cards feature a further cut-down 32 MB of Infinity cache - half that of the RX 6700 XT's 64 MB. With die-size being an estimated 236 mm², AMD is essentially introducing the same performance with 15 mm² less area, whilst shaving some 45 W from that cards' TDP (225 W for the RX 5700 XT, and an estimated 180 W for the RX 6600 XT).

45 Comments on AMD Radeon RX 6600 XT, 6600 to Feature Navi 23 Chip With up to 2048 Stream Processors

Maybe I missed something about the new Navi 2x parts on N7P but I can't find anything beyond pre-release speculation about the CPUs being on N7P.

I picked up a 5700xt Raw II last year in April for my new build at around $370 from Best Buy. 5700XTs are currently going for around $1k on eBay.

Assuming that the performance of the 6600xt and 6600 are comparable, if not slightly better, than their forebears, I can't see AMD pricing them that low while still maintaining the 6700xt at it's MSRP. That's too big of a gulf, and if their performance is siginficantly closer to the 6700xt than the 5700xt is, pricing that low definitely wouldn't make sense.

The clearest information probably comes from Dr Ian Cutress speaking to AMD directly to clarify that N7+ as shown in AMD's Zen3 product slides referred to "better than N7FF" and not actually the coincidentally named N7FF+ EUV process (3rd gen) at TSMC. That leaves only one possibility - N7P:

[INDENT]www.anandtech.com/show/15589/amd-clarifies-comments-on-7nm-7nm-for-future-products-euv-not-specified[/INDENT]There was also an article about one of the TSMC investor calls about 18 months ago now that mentioned they were upgrading most of their 1st gen N7FF production to 2nd gen after successful launch of their 2nd gen process. If you connect the dots I believe that means that TSMC converted existing N7FF production into N7P as those are the node names for 1st gen and 2nd gen 7nm at TSMC respectively. I don't know if TSMC has any 1st gen fabs left, or whether all 1st gen fabs are now 2nd gen. All I can say for certain is that a lot of the 1st gen production capability was removed, making any remaining 1st gen much more scarce than it was for the Zen2/Navi launch period if it even exists at all now.

As I said, there's no direct statement, but there are credible sources like Ian Cutress and TSMC's own investor calls.

I actually think AMD could combine mixed GPU usage with a low end card like it along with a APU and get some reasonable results using one for rendering and the other for post process improvements and upscale. The sooner we get a real ecosystem supporting secondary GPU's usage for post process IQ improvements the better. They could be discrete or APU based, but a lot of people have access to a secondary slower GPU these days that could be put to better usage. It's kind of a mostly untapped potential to be exploited though for 3D modeling spare GPU resources have been put to use for awhile. I'm a real fan of what Lucid Hydra aimed to achieve, but for technical reasons couldn't pull off well enough at that point in time and on their budget. The mCable is another example as well and if they can do that with a tiny lil dongle chip obviously more advanced post process can be achieved by something like a APU or spare older discrete GPU.

I believe the PCIe spec only allows for 66W on the 12V rail through the PCIe slot, and remember that the power draw of graphics cards tend to have a bit of fluctuationThere is nothing comparable to the quality of Nvidia's official Linux driver.

So what's the issue with your RX 560? Is it worn out? Because I don't think any new low-end GPU is going to offer that much of a performance uplift.

And since you are focusing so much on TDP, what's your restriction? Is it cooling, PSU?

Most of today's GPUs do consume very little during non-gaming, so is it really a problem to put in a 150W GPU? (if you could find a low-profile version)

[INDENT]1) Be more interested to try another brand. Hell, that could even be Intel in the near future.[/INDENT]

[INDENT]2) Give up and switch to consoles or other HTPC/entry-level alternatives.[/INDENT]

[INDENT]3) Give up and buy something on the used market instead.[/INDENT]

All three of those scenarios are lost sales and two of them are lost future customers too.

On a side note, 5600XT debuted at $280...

For gaming the 5700-series really were the kings of that generation. At $400 they bascially killed it for anyone except the 1% who were in the market for a $1000 GPU. No, they didn't have RTX but we're now almost 4 years on from RTX launch and reviewers/streamers/regular people are still undecided on whether it's any good, with an extremely limited number of supported titles. I have bought three RTX cards for personal use and still have two of them and not once have I ever found the RTX features to be of real value; An interesting novelty that's worth looking at? Sure, but not something I've ever left turned on for more than a couple of hours as the tradeoffs in performance AND texture quality just disappoint me to much to accept (DLSS 2.0 is good but I can count the titles where it's better than being left off on the fingers of one hand).

The 5700-series was priced to end Nvidia's monopoly pricing on higher-end GPUs and it succeeded. Not only did leaked performance/pricing of the 5700/5700XT force Nvidia to slash their RTX-card prices by around 30% with the Super re-brand of the RTX lineup, it also made a mockery of Nvidia's overpriced 16-series, because the performance/$ of the 5700 was better than the lower-end 16-series card. When the 5600XT came out at the same price as the 1660Ti it was pretty clear Nvidia was ripping you off.

Turing was, both at launch, and after the Super mid-life refresh, a rip-off.

It's as if someone was biased or something... :D