Monday, July 5th 2021

AMD 4700S Desktop Kit Features PlayStation 5 SoC Without iGPU

Previously, we have assumed that AMD 4700S desktop kit is based on Xbox Series X APU. Today, according to the findings of Bodnara, who managed to access one of these units, and we got some interesting discoveries. The chip powering the system is actually the PlayStation 5 SoC, which features AMD Zen 2 based system architecture, with 8 cores and 16 threads that can boost up to 3.2 GHz. The board that was tested features SK Hynix GDDR6 memory running at 14 Gbps, placed on the backside of the board. The APU is attached to AMD A77E Fusion Controller Hub (FCH), which was the one powering Xbox One "Durango" SoC, leading us to previously believe that the AMD 4700S is derived from an Xbox Series X system.

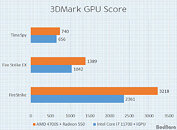

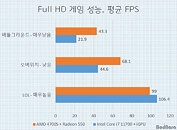

The graphics of this APU are disabled, however, it was the same variant of RDNA 2 GPU used by the PlayStation 5. Right out of the box, the system is equipped with a discrete GPU coming in a form of the Radeon 550, and this configuration was tested by the Bodnara team. You can find the images of the system and some performance results below.Performance:

Sources:

Bodnara, via VideoCardz

The graphics of this APU are disabled, however, it was the same variant of RDNA 2 GPU used by the PlayStation 5. Right out of the box, the system is equipped with a discrete GPU coming in a form of the Radeon 550, and this configuration was tested by the Bodnara team. You can find the images of the system and some performance results below.Performance:

33 Comments on AMD 4700S Desktop Kit Features PlayStation 5 SoC Without iGPU

I'm curious how that ram performs vs DDR4/5 however

Like maybe there was a huge order for dev kits that got cancelled and they thought to sell the whole lot off, but had to nerf the GPU first?

The second slot only runs at x4, which would have been for an NVME slot in the console

Even the full-fat RX550 was a lame duck with performance close to the GT1030 but twice the power draw and lacking an encoder to match NVENC. At this performance level, power consumption and encoder performance are kind of a big deal because the actual 3D compute/gaming performance is bad enough to not be worth consdering over an IGP (unless, of course, you don't even HAVE and IGP like in this case).

I know how powerful my 5800x can be in a 95W TDP, so i can only dream of what would be possible with laptop optimised parts

this 4700S ps5 ZEN2 ~~38.5mm² is ~heavily dawngraded to 128 bit though, if it has any impact on games that should be it.

What they should do is integrate 45W ZEN3 shrinked 30mm² on 5nm ondie RX6900~~RTX3090 shrinked to 200mm² and a fast NVMe on the back side.

and call it a day or a ps6 pro. cant wait for the PS6pro.

Missing the most important component..............................the igpu.............................

GT1030 lacks the Turing NVENC, but I thought it had the older generation Pascal NVENC? I wouldn't know because the 1030 is a turd that I've never purchased; Happy to stand corrected if I'm wrong.

Edit:

Just looked it up, 1050 is the first Pascal card with NVENC, I think I was mis-remembering the controversy surrounding the 1650 getting short-changed on the previous-gen NVENC.

It's less of a mouthful than SoCmGPUfclr (SoC missing GPU for complex licensing reasons)

* Intellectual is put in quotes, property is put in quotes, then the whole phrase is put in quotes once again.

There's however a high latency penalty by using GDDR6 vs DDR4.

Furthermore: it would be cool if the thing could be reverse engineered and perhaps a HDMI output soldered on + working VGA.