Which is also impossible.which seems to imply moving scheduling out of the operating system and fully back onto the CPU - is worth it.

-

Welcome to TechPowerUp Forums, Guest! Please check out our forum guidelines for info related to our community.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Intel 15th-Generation Arrow Lake-S Could Abandon Hyper-Threading Technology

- Thread starter AleksandarK

- Start date

- Joined

- Feb 18, 2005

- Messages

- 5,847 (0.81/day)

- Location

- Ikenai borderline!

| System Name | Firelance. |

|---|---|

| Processor | Threadripper 3960X |

| Motherboard | ROG Strix TRX40-E Gaming |

| Cooling | IceGem 360 + 6x Arctic Cooling P12 |

| Memory | 8x 16GB Patriot Viper DDR4-3200 CL16 |

| Video Card(s) | MSI GeForce RTX 4060 Ti Ventus 2X OC |

| Storage | 2TB WD SN850X (boot), 4TB Crucial P3 (data) |

| Display(s) | 3x AOC Q32E2N (32" 2560x1440 75Hz) |

| Case | Enthoo Pro II Server Edition (Closed Panel) + 6 fans |

| Power Supply | Fractal Design Ion+ 2 Platinum 760W |

| Mouse | Logitech G602 |

| Keyboard | Razer Pro Type Ultra |

| Software | Windows 10 Professional x64 |

Why?Which is also impossible.

- Joined

- Aug 30, 2006

- Messages

- 7,221 (1.08/day)

| System Name | ICE-QUAD // ICE-CRUNCH |

|---|---|

| Processor | Q6600 // 2x Xeon 5472 |

| Memory | 2GB DDR // 8GB FB-DIMM |

| Video Card(s) | HD3850-AGP // FireGL 3400 |

| Display(s) | 2 x Samsung 204Ts = 3200x1200 |

| Audio Device(s) | Audigy 2 |

| Software | Windows Server 2003 R2 as a Workstation now migrated to W10 with regrets. |

Thread priorities determined by admin/OS, potentially hundreds if not thousands, would be a nightmare to then have to "code forward " to the CPU with all the thread telemetry that would need to be kept, plus then add to that VM. How would you manage priorities over VMs? Nasty plus security issues.

Thread scheduling has become a nightmare with asymmetric CPU architecture. Mixing that between OS and CPU sounds like a recipe calling for too many cooks.

Thread scheduling has become a nightmare with asymmetric CPU architecture. Mixing that between OS and CPU sounds like a recipe calling for too many cooks.

Last edited:

- Joined

- Apr 19, 2018

- Messages

- 1,227 (0.51/day)

| Processor | AMD Ryzen 9 5950X |

|---|---|

| Motherboard | Asus ROG Crosshair VIII Hero WiFi |

| Cooling | Arctic Liquid Freezer II 420 |

| Memory | 32Gb G-Skill Trident Z Neo @3806MHz C14 |

| Video Card(s) | MSI GeForce RTX2070 |

| Storage | Seagate FireCuda 530 1TB |

| Display(s) | Samsung G9 49" Curved Ultrawide |

| Case | Cooler Master Cosmos |

| Audio Device(s) | O2 USB Headphone AMP |

| Power Supply | Corsair HX850i |

| Mouse | Logitech G502 |

| Keyboard | Cherry MX |

| Software | Windows 11 |

I have a 16 core 32 thread CPU. Can somebody tell me what the point of SMT is for a CPU like this? Genuine question.

- Joined

- Feb 15, 2019

- Messages

- 1,659 (0.79/day)

| System Name | Personal Gaming Rig |

|---|---|

| Processor | Ryzen 7800X3D |

| Motherboard | MSI X670E Carbon |

| Cooling | MO-RA 3 420 |

| Memory | 32GB 6000MHz |

| Video Card(s) | RTX 4090 ICHILL FROSTBITE ULTRA |

| Storage | 4x 2TB Nvme |

| Display(s) | Samsung G8 OLED |

| Case | Silverstone FT04 |

They have done the same thing in Ultra mobile series CPU and those have HT enabled no problem....Tiles, chiplets?

Its for applications and multitasking that would benefit from 32 threads being fully loaded. If you don’t have that use case, purchasing a 16/32 CPU was not the right CPU for you.I have a 16 core 32 thread CPU. Can somebody tell me what the point of SMT is for a CPU like this? Genuine question.

If you want to know what applications can benefit from 32 threads, look at CPU reviews with benchmarks that show increased performance as thread count goes up.

For multitasking, streaming, playing a game, voice chat and rendering all at the same time will definitely load all 32 threads. Someone recording a team play game session live for twitch TV is an example.

Lots of background tasks and editing a photo with complex filters while watching a 4K movie on another screen is another example that will load up 32 threads.

- Joined

- May 3, 2019

- Messages

- 2,099 (1.03/day)

| System Name | BigRed |

|---|---|

| Processor | I7 12700k |

| Motherboard | Asus Rog Strix z690-A WiFi D4 |

| Cooling | Noctua D15S chromax black/MX6 |

| Memory | TEAM GROUP 32GB DDR4 4000C16 B die |

| Video Card(s) | MSI RTX 3080 Gaming Trio X 10GB |

| Storage | M.2 drives WD SN850X 1TB 4x4 BOOT/WD SN850X 4TB 4x4 STEAM/USB3 4TB OTHER |

| Display(s) | Dell s3422dwg 34" 3440x1440p 144hz ultrawide |

| Case | Corsair 7000D |

| Audio Device(s) | Logitech Z5450/KEF uniQ speakers/Bowers and Wilkins P7 Headphones |

| Power Supply | Corsair RM850x 80% gold |

| Mouse | Logitech G604 lightspeed wireless |

| Keyboard | Logitech G915 TKL lightspeed wireless |

| Software | Windows 10 Pro X64 |

| Benchmark Scores | Who cares |

Be interesting to see first tests of these sans HT CPUs

Some people seem to confuse a HT thread as a real core and it is not. Nothing wrong with a 16core CPU with no HT, HT does not add that much really. A 16 core CPU is just that not a 32 core

Some people seem to confuse a HT thread as a real core and it is not. Nothing wrong with a 16core CPU with no HT, HT does not add that much really. A 16 core CPU is just that not a 32 core

- Joined

- Jan 22, 2024

- Messages

- 86 (0.28/day)

| Processor | 7800X3D |

|---|---|

| Cooling | Thermalright Peerless Assasin 120 |

| Memory | 32GB at 6000/30 |

| Video Card(s) | 7900 XT soon to be replaced by 4080 Super |

| Storage | WD Black SN850X 4TB |

| Display(s) | 1440p 360 Hz IPS + 34" Ultrawide 3440x1440 165 Hz IPS ... Hopefully going OLED this year |

I think Intel knows what they are doing here. Can't wait to see Arrow Lake vs Zen 5.

If Intels new process node has lowered power usage then Intel is fully back in business again.

HT/SMT never were that important to begin with.

If Intels new process node has lowered power usage then Intel is fully back in business again.

HT/SMT never were that important to begin with.

It’s also possible Intel is leaving the high end desktop CPU business much like it is rumored that AMD is leaving the high end desktop GPU business. Isn’t wonton speculation driven by biased brand loyalty wonderful?I think Intel knows what they are doing here. Can't wait to see Arrow Lake vs Zen 5.

If Intels new process node has lowered power usage then Intel is fully back in business again.

HT/SMT never were that important to begin with.

- Joined

- Jan 22, 2024

- Messages

- 86 (0.28/day)

| Processor | 7800X3D |

|---|---|

| Cooling | Thermalright Peerless Assasin 120 |

| Memory | 32GB at 6000/30 |

| Video Card(s) | 7900 XT soon to be replaced by 4080 Super |

| Storage | WD Black SN850X 4TB |

| Display(s) | 1440p 360 Hz IPS + 34" Ultrawide 3440x1440 165 Hz IPS ... Hopefully going OLED this year |

Intel has the best overall CPUs in the desktop segment IMO. Performance is not Intels problem, power usage is. With AMD you have to choose depending on your needs.It’s also possible Intel is leaving the high end CPU business much like it is rumored that AMD is leaving the high end GPU business. Isn’t wonton speculation driven by biased brand loyalty wonderful?

I bought 7800X3D because its the best gaming CPU and uses low power but for actual work its not impressive and 13700K/14700K which is priced similar will destroy my chip in most stuff outside of gaming, while only being a few percent behind in gaming.

7950X3D was supposed to be the sweet spot with good results regardless of workload, however it loses to 7800X3D in gaming and is beaten by Intel in content creation, 7950X beats it as well.

I hope AMD will address this with Zen 5. Hopefully 3D models won't be too far off the initial release and hopefully all the cores will get 3D cache this time. 7900X3D was kinda meh and 7950X3D should have 16 cores with 3D cache for sure with that price tag. In a perfect world there would be no 3D parts. The CPUs should be good overall regardless of workload.

Intels power draw is not much of a "problem" if you look at real world watt usage instead of synthetic loads.

A friend of mine has 13700K and it hovers around 100 watts in gaming which is 40 watts lower than my 7800X3D, performance is very similar.

According to Techpowerups review of 14th gen it also seems that Intel regains the lead in minimum fps at higher resolution.

14700K generally performs on par with 7950X3D in gaming while performing similar in content creation. Not too much difference, yet the i7 is much cheaper and boards are also generally cheaper than AM5 boards especially if you choose the B650E/X670E boards. Power draw is about 100 watts higher on the i7 when peaked tho.

Last edited:

Aquinus

Resident Wat-man

- Joined

- Jan 28, 2012

- Messages

- 13,171 (2.81/day)

- Location

- Concord, NH, USA

| System Name | Apollo |

|---|---|

| Processor | Intel Core i9 9880H |

| Motherboard | Some proprietary Apple thing. |

| Memory | 64GB DDR4-2667 |

| Video Card(s) | AMD Radeon Pro 5600M, 8GB HBM2 |

| Storage | 1TB Apple NVMe, 4TB External |

| Display(s) | Laptop @ 3072x1920 + 2x LG 5k Ultrafine TB3 displays |

| Case | MacBook Pro (16", 2019) |

| Audio Device(s) | AirPods Pro, Sennheiser HD 380s w/ FIIO Alpen 2, or Logitech 2.1 Speakers |

| Power Supply | 96w Power Adapter |

| Mouse | Logitech MX Master 3 |

| Keyboard | Logitech G915, GL Clicky |

| Software | MacOS 12.1 |

It's because effectively scheduling these sorts of tasks without any knowledge by the scheduler regarding data dependencies and performance for the thread it's running on, there simply isn't enough data available to the CPU scheduler to effectively divvy out these tasks or move them in efficient ways. This gets a lot harder when you start adding multiple levels because there are different tradeoffs to switching to different threads. Not to mention the cost of getting it wrong is huge because context shifts are expensive from a cache locality standpoint, so more often than not you want threads to have some level of "stickiness," to them. This poses a real problem when pairing cores with different performance characteristics as well as threads via SMT which has its own series of tradeoffs.That said, I'm not sure why this requires HT to go away

I'd also partially blame the NT kernel for probably not having any good mechanisms for handling this sort of topology. Linux tends to have a better handle on these sorts of things because a lot of servers have to handle CPUs being on different sockets and the cost of context switching between cores on the same package versus on another package are very real. There are some really good reasons that most servers run Linux and it's because of these sorts of things.

- Joined

- Jun 21, 2013

- Messages

- 602 (0.14/day)

| Processor | Ryzen 9 3900x |

|---|---|

| Motherboard | MSI B550 Gaming Plus |

| Cooling | be quiet! Dark Rock Pro 4 |

| Memory | 32GB GSkill Ripjaws V 3600CL16 |

| Video Card(s) | 3060Ti FE 0.9v |

| Storage | Samsung 970 EVO 1TB, 2x Samsung 840 EVO 1TB |

| Display(s) | ASUS ProArt PA278QV |

| Case | be quiet! Pure Base 500 |

| Audio Device(s) | Edifier R1850DB |

| Power Supply | Super Flower Leadex III 650W |

| Mouse | A4Tech X-748K |

| Keyboard | Logitech K300 |

| Software | Win 10 Pro 64bit |

Well, the e-cores are kind of a replacement for HT, and if P cores can be made to run at higher clocks with HT disabled to provide superiors ST performance, why not do it?

- Joined

- Jan 5, 2017

- Messages

- 307 (0.11/day)

| System Name | Main |

|---|---|

| Processor | 8700K |

| Motherboard | Maximus Hero X |

| Cooling | EVGA 280 CLC w/ Noctua silent fans |

| Memory | 2x8GB 3600/16 |

| Video Card(s) | EVGA 2080TI Hybrid |

I've disabled HT on all my builds since at least the 8700K. No downside at all that I can tell for normal usage.

- Joined

- Feb 18, 2005

- Messages

- 5,847 (0.81/day)

- Location

- Ikenai borderline!

| System Name | Firelance. |

|---|---|

| Processor | Threadripper 3960X |

| Motherboard | ROG Strix TRX40-E Gaming |

| Cooling | IceGem 360 + 6x Arctic Cooling P12 |

| Memory | 8x 16GB Patriot Viper DDR4-3200 CL16 |

| Video Card(s) | MSI GeForce RTX 4060 Ti Ventus 2X OC |

| Storage | 2TB WD SN850X (boot), 4TB Crucial P3 (data) |

| Display(s) | 3x AOC Q32E2N (32" 2560x1440 75Hz) |

| Case | Enthoo Pro II Server Edition (Closed Panel) + 6 fans |

| Power Supply | Fractal Design Ion+ 2 Platinum 760W |

| Mouse | Logitech G602 |

| Keyboard | Razer Pro Type Ultra |

| Software | Windows 10 Professional x64 |

If you never enable HT how would you know how much performance you're losing FFS.I've disabled HT on all my builds since at least the 8700K. No downside at all that I can tell for normal usage.

- Joined

- Jan 22, 2024

- Messages

- 86 (0.28/day)

| Processor | 7800X3D |

|---|---|

| Cooling | Thermalright Peerless Assasin 120 |

| Memory | 32GB at 6000/30 |

| Video Card(s) | 7900 XT soon to be replaced by 4080 Super |

| Storage | WD Black SN850X 4TB |

| Display(s) | 1440p 360 Hz IPS + 34" Ultrawide 3440x1440 165 Hz IPS ... Hopefully going OLED this year |

I clearly remember seeing fps numbers for 9700K vs 9900K where 9900K performed much better in some games because of HT, especially when paired with a somewhat high-end GPU and this was 4-5 years ago probablyI've disabled HT on all my builds since at least the 8700K. No downside at all that I can tell for normal usage.

I'd probably not disable HT/SMT on a 6 core chip today, tons of games can use more than 6 threads

- Joined

- Apr 30, 2011

- Messages

- 2,703 (0.54/day)

- Location

- Greece

| Processor | AMD Ryzen 5 5600@80W |

|---|---|

| Motherboard | MSI B550 Tomahawk |

| Cooling | ZALMAN CNPS9X OPTIMA |

| Memory | 2*8GB PATRIOT PVS416G400C9K@3733MT_C16 |

| Video Card(s) | Sapphire Radeon RX 6750 XT Pulse 12GB |

| Storage | Sandisk SSD 128GB, Kingston A2000 NVMe 1TB, Samsung F1 1TB, WD Black 10TB |

| Display(s) | AOC 27G2U/BK IPS 144Hz |

| Case | SHARKOON M25-W 7.1 BLACK |

| Audio Device(s) | Realtek 7.1 onboard |

| Power Supply | Seasonic Core GC 500W |

| Mouse | Sharkoon SHARK Force Black |

| Keyboard | Trust GXT280 |

| Software | Win 7 Ultimate 64bit/Win 10 pro 64bit/Manjaro Linux |

Almost surely next gen Intel CPUs won't have ΗΤ. Might have the reverse arch that share thoughts to the one Bulldozer had combining 2 parts of the same X to produce one more powerful thread for specific workloads.

- Joined

- Jan 5, 2017

- Messages

- 307 (0.11/day)

| System Name | Main |

|---|---|

| Processor | 8700K |

| Motherboard | Maximus Hero X |

| Cooling | EVGA 280 CLC w/ Noctua silent fans |

| Memory | 2x8GB 3600/16 |

| Video Card(s) | EVGA 2080TI Hybrid |

To each their own I guess, to me not worth the security risk and the potential performance upside is minuscule to non-existent unless you're fully loading the CPU which I almost never do anyway.I clearly remember seeing fps numbers for 9700K vs 9900K where 9900K performed much better in some games because of HT, especially when paired with a somewhat high-end GPU and this was 4-5 years ago probably

I'd probably not disable HT/SMT on a 6 core chip today, tons of games can use more than 6 threads

- Joined

- Jan 22, 2024

- Messages

- 86 (0.28/day)

| Processor | 7800X3D |

|---|---|

| Cooling | Thermalright Peerless Assasin 120 |

| Memory | 32GB at 6000/30 |

| Video Card(s) | 7900 XT soon to be replaced by 4080 Super |

| Storage | WD Black SN850X 4TB |

| Display(s) | 1440p 360 Hz IPS + 34" Ultrawide 3440x1440 165 Hz IPS ... Hopefully going OLED this year |

Which security risk? Do you work for NASA?To each their own I guess, to me not worth the security risk and the potential performance upside is minuscule to non-existent unless you're fully loading the CPU which I almost never do anyway.

Both Intel and AMD uses HT/SMT for a reason still. Tons of software is optimized with this in mind.

HT/SMT does more good than harm. In most software you gain, not loose performance.

Especially people with quad and hexa core chips should enable it for sure. Will make up for the lack of real cores.

- Joined

- Feb 20, 2020

- Messages

- 9,340 (5.36/day)

- Location

- Louisiana

| System Name | Ghetto Rigs z490|x99|Acer 17 Nitro 7840hs/ 5600c40-2x16/ 4060/ 1tb acer stock m.2/ 4tb sn850x |

|---|---|

| Processor | 10900k w/Optimus Foundation | 5930k w/Black Noctua D15 |

| Motherboard | z490 Maximus XII Apex | x99 Sabertooth |

| Cooling | oCool D5 res-combo/280 GTX/ Optimus Foundation/ gpu water block | Blk D15 |

| Memory | Trident-Z Royal 4000c16 2x16gb | Trident-Z 3200c14 4x8gb |

| Video Card(s) | Titan Xp-water | evga 980ti gaming-w/ air |

| Storage | 970evo+500gb & sn850x 4tb | 860 pro 256gb | Acer m.2 1tb/ sn850x 4tb| Many2.5" sata's ssd 3.5hdd's |

| Display(s) | 1-AOC G2460PG 24"G-Sync 144Hz/ 2nd 1-ASUS VG248QE 24"/ 3rd LG 43" series |

| Case | D450 | Cherry Entertainment center on Test bench |

| Audio Device(s) | Built in Realtek x2 with 2-Insignia 2.0 sound bars & 1-LG sound bar |

| Power Supply | EVGA 1000P2 with APC AX1500 | 850P2 with CyberPower-GX1325U |

| Mouse | Redragon 901 Perdition x3 |

| Keyboard | G710+x3 |

| Software | Win-7 pro x3 and win-10 & 11pro x3 |

| Benchmark Scores | Are in the benchmark section |

Hi,

Yeah killing or just limiting e-core use would be best

They were always supposed to be used for background tasks and to me that is all windows tasks not necessarily user tasks so limit the use to updates/ security/ ms services....

Then p cores can do all user workloads with or without HT.

Without HT they can run a little cooler but then again p cores can clock a little higher to.

But mainly intel is just trying to mess with amd by taking away HT intel knows they have the higher clock speeds in the bag

Never underestimate intel's ability to troll amd x3d lol

Yeah killing or just limiting e-core use would be best

They were always supposed to be used for background tasks and to me that is all windows tasks not necessarily user tasks so limit the use to updates/ security/ ms services....

Then p cores can do all user workloads with or without HT.

Without HT they can run a little cooler but then again p cores can clock a little higher to.

But mainly intel is just trying to mess with amd by taking away HT intel knows they have the higher clock speeds in the bag

Never underestimate intel's ability to troll amd x3d lol

- Joined

- Feb 18, 2005

- Messages

- 5,847 (0.81/day)

- Location

- Ikenai borderline!

| System Name | Firelance. |

|---|---|

| Processor | Threadripper 3960X |

| Motherboard | ROG Strix TRX40-E Gaming |

| Cooling | IceGem 360 + 6x Arctic Cooling P12 |

| Memory | 8x 16GB Patriot Viper DDR4-3200 CL16 |

| Video Card(s) | MSI GeForce RTX 4060 Ti Ventus 2X OC |

| Storage | 2TB WD SN850X (boot), 4TB Crucial P3 (data) |

| Display(s) | 3x AOC Q32E2N (32" 2560x1440 75Hz) |

| Case | Enthoo Pro II Server Edition (Closed Panel) + 6 fans |

| Power Supply | Fractal Design Ion+ 2 Platinum 760W |

| Mouse | Logitech G602 |

| Keyboard | Razer Pro Type Ultra |

| Software | Windows 10 Professional x64 |

Yeah, but can't the problems with HT be solved in a similar manner i.e. by bundling more context along with each task such that the CPU is able to make better decisions around scheduling?It's because effectively scheduling these sorts of tasks without any knowledge by the scheduler regarding data dependencies and performance for the thread it's running on, there simply isn't enough data available to the CPU scheduler to effectively divvy out these tasks or move them in efficient ways. This gets a lot harder when you start adding multiple levels because there are different tradeoffs to switching to different threads. Not to mention the cost of getting it wrong is huge because context shifts are expensive from a cache locality standpoint, so more often than not you want threads to have some level of "stickiness," to them. This poses a real problem when pairing cores with different performance characteristics as well as threads via SMT which has its own series of tradeoffs.

But there hasn't really been a concept of heterogenous cores in the same package until now, and Windows Server has definitely encountered some of the same pains in core scheduling that Linux has. Everything I've read so far indicates that the issues that desktop Windows has encountered around scheduling over heterogenous cores is regarding high-performance/low-latency applications like games, not the line-of-business stuff that you'd find running on servers.I'd also partially blame the NT kernel for probably not having any good mechanisms for handling this sort of topology. Linux tends to have a better handle on these sorts of things because a lot of servers have to handle CPUs being on different sockets and the cost of context switching between cores on the same package versus on another package are very real. There are some really good reasons that most servers run Linux and it's because of these sorts of things.

- Joined

- Sep 17, 2014

- Messages

- 22,449 (6.03/day)

- Location

- The Washing Machine

| Processor | 7800X3D |

|---|---|

| Motherboard | MSI MAG Mortar b650m wifi |

| Cooling | Thermalright Peerless Assassin |

| Memory | 32GB Corsair Vengeance 30CL6000 |

| Video Card(s) | ASRock RX7900XT Phantom Gaming |

| Storage | Lexar NM790 4TB + Samsung 850 EVO 1TB + Samsung 980 1TB + Crucial BX100 250GB |

| Display(s) | Gigabyte G34QWC (3440x1440) |

| Case | Lian Li A3 mATX White |

| Audio Device(s) | Harman Kardon AVR137 + 2.1 |

| Power Supply | EVGA Supernova G2 750W |

| Mouse | Steelseries Aerox 5 |

| Keyboard | Lenovo Thinkpad Trackpoint II |

| Software | W11 IoT Enterprise LTSC |

| Benchmark Scores | Over 9000 |

Euh.. okTo each their own I guess, to me not worth the security risk and the potential performance upside is minuscule to non-existent unless you're fully loading the CPU which I almost never do anyway.

Just so you know, you can swallow your pride when we're not looking and quietly enable it. Its fine.

- Joined

- Feb 3, 2017

- Messages

- 3,755 (1.32/day)

| Processor | Ryzen 7800X3D |

|---|---|

| Motherboard | ROG STRIX B650E-F GAMING WIFI |

| Memory | 2x16GB G.Skill Flare X5 DDR5-6000 CL36 (F5-6000J3636F16GX2-FX5) |

| Video Card(s) | INNO3D GeForce RTX™ 4070 Ti SUPER TWIN X2 |

| Storage | 2TB Samsung 980 PRO, 4TB WD Black SN850X |

| Display(s) | 42" LG C2 OLED, 27" ASUS PG279Q |

| Case | Thermaltake Core P5 |

| Power Supply | Fractal Design Ion+ Platinum 760W |

| Mouse | Corsair Dark Core RGB Pro SE |

| Keyboard | Corsair K100 RGB |

| VR HMD | HTC Vive Cosmos |

Very minor difference, if at all. IIRC L1 data cache was one thing that was in separated units but rest of the shared parts are all the same ones that have had vulnerabilities in other architectures - caches, registers and associated management hardware.Out of interest, since this is a bit out of my field, was this also the case with the “separate cores sharing some HW” approach the like of which Bulldozer used?

Increases power consumption. After all, you get some 15-30% extra performance and that happens with more of the chip being in use. How much exactly is difficult to say and varies wildly across different use cases. Also, everything is dynamic these days - clocks, voltages etc - with power limit being the main factor (on some level which is not necessarily per core).Does HT impact power consumption in any meaningful way? If it does, maybe that is Intel's goal. Substitute HT with ecores to reduce power consumption

P/E core scheduling itself proves to be pretty problematic even up to this point. P and E cores are quite different in performance and HT potentially widens that gap another 30-35%. I bet simply reducing the variability of how long a task takes on one kind of core makes scheduling easier on already mismatched set of cores.That said, I'm not sure why this requires HT to go away; it feels like something that should be fixed at the scheduling level, not by completely reworking some very fundamental ways in which cores have been designed for over a decade. But considering how much pain scheduling over P- and E-cores has given Intel so far (WRT having both the CPU and OS having to be aware of which cores to schedule tasks to), they may have determined that this rather drastic approach - which seems to imply moving scheduling out of the operating system and fully back onto the CPU - is worth it.

I propose a different take on this - Microsoft's problems are twofold:I'd also partially blame the NT kernel for probably not having any good mechanisms for handling this sort of topology. Linux tends to have a better handle on these sorts of things because a lot of servers have to handle CPUs being on different sockets and the cost of context switching between cores on the same package versus on another package are very real. There are some really good reasons that most servers run Linux and it's because of these sorts of things.

1. They want to and need to put their best foot forward on desktop. This leads and has lead to solutions optimized for presumed use cases. Windows Server scheduler seems to work a bit different than desktop Windows variants.

2. Microsoft is a company that needs to cater to needs and wants of hardware manufacturers. The best example I can think of was Bulldozer - AMD wanted it to be 8-core and Microsoft obliged, with performance issues and patches back and forth to get it working properly. What did Linux do? Did not give a crap and treated it as 4c/8t CPU which is what it architecturally was (or was closest to) and that worked just fine. Windows in the end went for the same solution... This is not the only example, both Intel and AMD have butted heads with Microsoft about scheduling on various strange CPUs.

Last edited:

Intel has the best overall CPUs in the desktop segment IMO. Performance is not Intels problem, power usage is. With AMD you have to choose depending on your needs.

I bought 7800X3D because its the best gaming CPU and uses low power but for actual work its not impressive and 13700K/14700K which is priced similar will destroy my chip in most stuff outside of gaming, while only being a few percent behind in gaming.

7950X3D was supposed to be the sweet spot with good results regardless of workload, however it loses to 7800X3D in gaming and is beaten by Intel in content creation, 7950X beats it as well.

I hope AMD will address this with Zen 5. Hopefully 3D models won't be too far off the initial release and hopefully all the cores will get 3D cache this time. 7900X3D was kinda meh and 7950X3D should have 16 cores with 3D cache for sure with that price tag. In a perfect world there would be no 3D parts. The CPUs should be good overall regardless of workload.

Intels power draw is not much of a "problem" if you look at real world watt usage instead of synthetic loads.

A friend of mine has 13700K and it hovers around 100 watts in gaming which is 40 watts lower than my 7800X3D, performance is very similar.

According to Techpowerups review of 14th gen it also seems that Intel regains the lead in minimum fps at higher resolution.

14700K generally performs on par with 7950X3D in gaming while performing similar in content creation. Not too much difference, yet the i7 is much cheaper and boards are also generally cheaper than AM5 boards especially if you choose the B650E/X670E boards. Power draw is about 100 watts higher on the i7 when peaked tho.

Just go back to Intel, no one cares. You are arguing about a 2% difference in performance that no one really notices most of the time.

Just go back to Intel, no one cares. You are arguing about a 2% difference in performance that no one really notices most of the time.- Joined

- Oct 28, 2012

- Messages

- 1,190 (0.27/day)

| Processor | AMD Ryzen 3700x |

|---|---|

| Motherboard | asus ROG Strix B-350I Gaming |

| Cooling | Deepcool LS520 SE |

| Memory | crucial ballistix 32Gb DDR4 |

| Video Card(s) | RTX 3070 FE |

| Storage | WD sn550 1To/WD ssd sata 1To /WD black sn750 1To/Seagate 2To/WD book 4 To back-up |

| Display(s) | LG GL850 |

| Case | Dan A4 H2O |

| Audio Device(s) | sennheiser HD58X |

| Power Supply | Corsair SF600 |

| Mouse | MX master 3 |

| Keyboard | Master Key Mx |

| Software | win 11 pro |

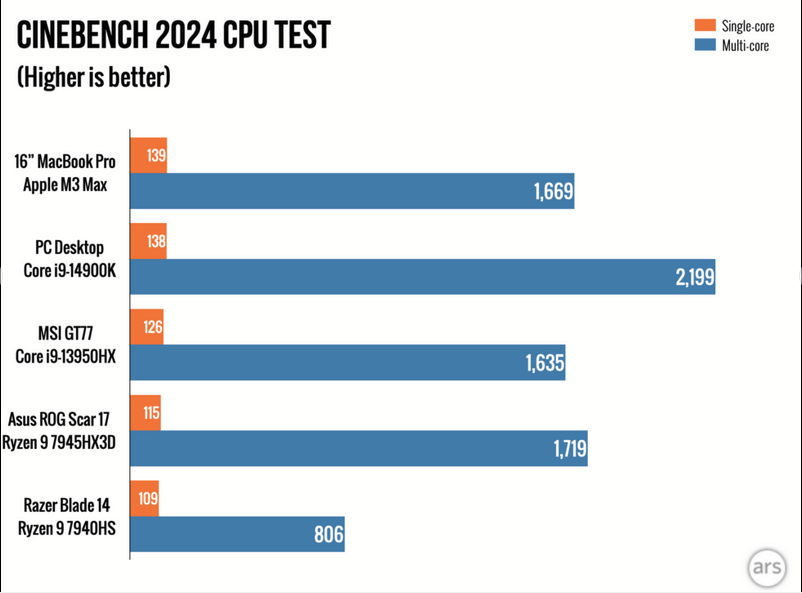

Lots of emotions around this, but Apple managed to have honorable MT performance without SMT since the M1. The M3 ultra should handily beat a 14900k. ARL is going to be a proof of concept, but down the line, Intel might have more flexibility when it comes to core config/core count to definitely make up for the lack of HT.

- Joined

- Feb 20, 2020

- Messages

- 9,340 (5.36/day)

- Location

- Louisiana

| System Name | Ghetto Rigs z490|x99|Acer 17 Nitro 7840hs/ 5600c40-2x16/ 4060/ 1tb acer stock m.2/ 4tb sn850x |

|---|---|

| Processor | 10900k w/Optimus Foundation | 5930k w/Black Noctua D15 |

| Motherboard | z490 Maximus XII Apex | x99 Sabertooth |

| Cooling | oCool D5 res-combo/280 GTX/ Optimus Foundation/ gpu water block | Blk D15 |

| Memory | Trident-Z Royal 4000c16 2x16gb | Trident-Z 3200c14 4x8gb |

| Video Card(s) | Titan Xp-water | evga 980ti gaming-w/ air |

| Storage | 970evo+500gb & sn850x 4tb | 860 pro 256gb | Acer m.2 1tb/ sn850x 4tb| Many2.5" sata's ssd 3.5hdd's |

| Display(s) | 1-AOC G2460PG 24"G-Sync 144Hz/ 2nd 1-ASUS VG248QE 24"/ 3rd LG 43" series |

| Case | D450 | Cherry Entertainment center on Test bench |

| Audio Device(s) | Built in Realtek x2 with 2-Insignia 2.0 sound bars & 1-LG sound bar |

| Power Supply | EVGA 1000P2 with APC AX1500 | 850P2 with CyberPower-GX1325U |

| Mouse | Redragon 901 Perdition x3 |

| Keyboard | G710+x3 |

| Software | Win-7 pro x3 and win-10 & 11pro x3 |

| Benchmark Scores | Are in the benchmark section |

Hi,

MS doesn't give a crap about desktop only thing they care about is the mobile world it aligns with onedrive storage/....

Desktops are a thorn in their backside and wish they would go away and use lower carbon footprint as why lol

MS doesn't give a crap about desktop only thing they care about is the mobile world it aligns with onedrive storage/....

Desktops are a thorn in their backside and wish they would go away and use lower carbon footprint as why lol