Raevenlord

News Editor

- Joined

- Aug 12, 2016

- Messages

- 3,755 (1.19/day)

- Location

- Portugal

| System Name | The Ryzening |

|---|---|

| Processor | AMD Ryzen 9 5900X |

| Motherboard | MSI X570 MAG TOMAHAWK |

| Cooling | Lian Li Galahad 360mm AIO |

| Memory | 32 GB G.Skill Trident Z F4-3733 (4x 8 GB) |

| Video Card(s) | Gigabyte RTX 3070 Ti |

| Storage | Boot: Transcend MTE220S 2TB, Kintson A2000 1TB, Seagate Firewolf Pro 14 TB |

| Display(s) | Acer Nitro VG270UP (1440p 144 Hz IPS) |

| Case | Lian Li O11DX Dynamic White |

| Audio Device(s) | iFi Audio Zen DAC |

| Power Supply | Seasonic Focus+ 750 W |

| Mouse | Cooler Master Masterkeys Lite L |

| Keyboard | Cooler Master Masterkeys Lite L |

| Software | Windows 10 x64 |

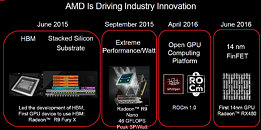

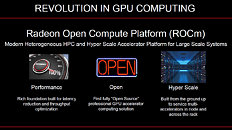

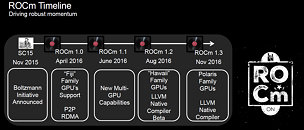

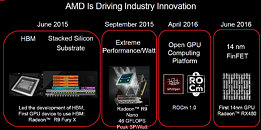

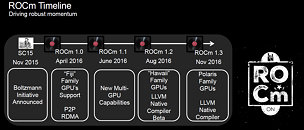

AMD on Monday announced their ROCm initiative. Introduced by AMD's Gregory Stoner, Senior Director for the Radeon Open Compute Initiative, ROCm stands for Radeon Open Compute platforM. This open-standard, high-performance, Hyper Scale computing platform stands on the shoulders of AMD's technological expertise and accomplishments, with cards like the Radeon R9 Nano achieving as much as 46 GFLOPS of peak single-precision performance per Watt.

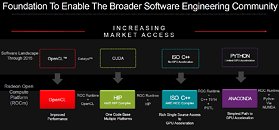

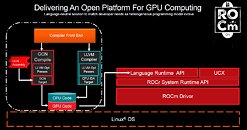

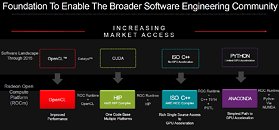

The natural evolution of AMD's Boltzmann Initiative, ROCm grants developers and coders a platform which allows the leveraging of AMD's GPU solutions through a variety of popular programming languages, such as OpenCL, CUDA, ISO C++ and Python. AMD knows that the hardware is but a single piece in an ecosystem, and that having it without any supporting software is a recipe for failure. As such, AMD's ROCm stands as AMD's push towards HPC by leveraging both its hardware, as well as the support for open-standards and the conversion of otherwise proprietary code.

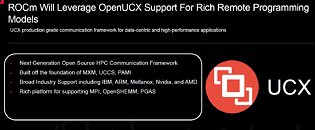

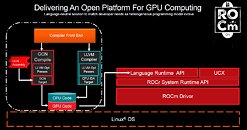

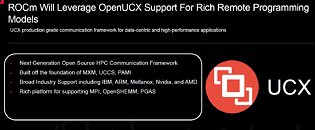

Built from the ground up, this platform goes towards the developers' wishes, being fully open-source from the metal, and all the way up towards compilers and libraries, leveraging both low-level and high-level approaches. Recognizing its lacking programming language support, AMD's ROCm now allows developers to leverage not only OpenCL (in its 1.2+ version), but also NVIDIA's CUDA (through AMD's Heterogeneous-Compute Interface for Portability compiler), ISO C++ (with added GPU acceleration through AMD's Heterogeneous Compute Compiler, supporting C++ 11/14/17 and FSTL) as well as ANACONDA (traditional Python via NUMBA and AMD's ROC Runtime). ROCm also leverages OpenUCX support, a next-generation open-source HPC communication framework.

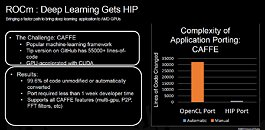

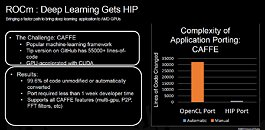

The platform now also supports Native Float16 Instruction Support, as well as allowing for high-performance, highly-automated code-conversion. On the Tip version of CAFFE, a popular, machine-learning framework driven by 55000+ lines of code, and highly-denoted support through NVIDIA CUDA, about 99.6% of the the code was automatically converted through HIP, with support for all of CAFFE features, whereas a typical OpenCL porting would require rework of over 30000+ lines of code. AMD hopes that this will increase the desirability of its solutions, by allowing them to work not only on the open standards that AMD is pushing, but also in supporting high-impact, proprietary solutions, with minimal investment.

Supported hardware includes the recently-announced Radeon PRO WX4100, WX5100, and WX7100, as well as AMD's most recent consumer boards (the RX460, RX470, and RX480), and previous-generation NANO and FirePro products, all inserted in a broader hardware ecosystem through AMD64 Support, ARM AArch64, IMP OpenPower, GenZ, CCIX, as well as OpenCAPI.

View at TechPowerUp Main Site

The natural evolution of AMD's Boltzmann Initiative, ROCm grants developers and coders a platform which allows the leveraging of AMD's GPU solutions through a variety of popular programming languages, such as OpenCL, CUDA, ISO C++ and Python. AMD knows that the hardware is but a single piece in an ecosystem, and that having it without any supporting software is a recipe for failure. As such, AMD's ROCm stands as AMD's push towards HPC by leveraging both its hardware, as well as the support for open-standards and the conversion of otherwise proprietary code.

Built from the ground up, this platform goes towards the developers' wishes, being fully open-source from the metal, and all the way up towards compilers and libraries, leveraging both low-level and high-level approaches. Recognizing its lacking programming language support, AMD's ROCm now allows developers to leverage not only OpenCL (in its 1.2+ version), but also NVIDIA's CUDA (through AMD's Heterogeneous-Compute Interface for Portability compiler), ISO C++ (with added GPU acceleration through AMD's Heterogeneous Compute Compiler, supporting C++ 11/14/17 and FSTL) as well as ANACONDA (traditional Python via NUMBA and AMD's ROC Runtime). ROCm also leverages OpenUCX support, a next-generation open-source HPC communication framework.

The platform now also supports Native Float16 Instruction Support, as well as allowing for high-performance, highly-automated code-conversion. On the Tip version of CAFFE, a popular, machine-learning framework driven by 55000+ lines of code, and highly-denoted support through NVIDIA CUDA, about 99.6% of the the code was automatically converted through HIP, with support for all of CAFFE features, whereas a typical OpenCL porting would require rework of over 30000+ lines of code. AMD hopes that this will increase the desirability of its solutions, by allowing them to work not only on the open standards that AMD is pushing, but also in supporting high-impact, proprietary solutions, with minimal investment.

Supported hardware includes the recently-announced Radeon PRO WX4100, WX5100, and WX7100, as well as AMD's most recent consumer boards (the RX460, RX470, and RX480), and previous-generation NANO and FirePro products, all inserted in a broader hardware ecosystem through AMD64 Support, ARM AArch64, IMP OpenPower, GenZ, CCIX, as well as OpenCAPI.

View at TechPowerUp Main Site

Last edited by a moderator: