Raevenlord

News Editor

- Joined

- Aug 12, 2016

- Messages

- 3,755 (1.19/day)

- Location

- Portugal

| System Name | The Ryzening |

|---|---|

| Processor | AMD Ryzen 9 5900X |

| Motherboard | MSI X570 MAG TOMAHAWK |

| Cooling | Lian Li Galahad 360mm AIO |

| Memory | 32 GB G.Skill Trident Z F4-3733 (4x 8 GB) |

| Video Card(s) | Gigabyte RTX 3070 Ti |

| Storage | Boot: Transcend MTE220S 2TB, Kintson A2000 1TB, Seagate Firewolf Pro 14 TB |

| Display(s) | Acer Nitro VG270UP (1440p 144 Hz IPS) |

| Case | Lian Li O11DX Dynamic White |

| Audio Device(s) | iFi Audio Zen DAC |

| Power Supply | Seasonic Focus+ 750 W |

| Mouse | Cooler Master Masterkeys Lite L |

| Keyboard | Cooler Master Masterkeys Lite L |

| Software | Windows 10 x64 |

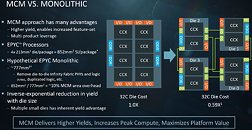

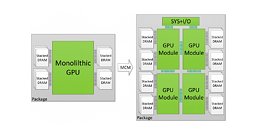

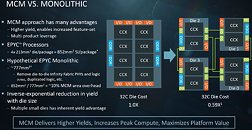

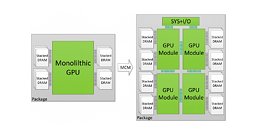

With Moore's Law being pronounced as within its death throes, historic monolithic die designs are becoming increasingly expensive to manufacture. It's no secret that both AMD and NVIDIA have been exploring an MCM (Multi-Chip-Module) approach towards diverting from monolithic die designs over to a much more manageable, "chiplet" design. Essentially, AMD has achieved this in different ways with its Zen line of CPUs (two CPU modules of four cores each linked via the company's Infinity Fabric interconnect), and their own R9 and Vega graphics cards, which take another approach in packaging memory and the graphics processing die in the same silicon base - an interposer.

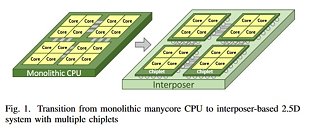

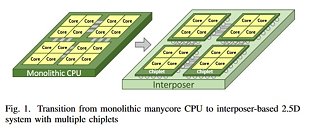

This "chiplet" approach is easy to understand - smaller, leaner chips can be produced with higher yields than large, monolithic ones, which are more prone to silicon defects. This increased yield (and increased number of chips per wafer, in the end) allows AMD to reduce manufacturing costs and increase production efficiency by using a single mask for a quad-core module, for instance. Afterwards, it's "simply" a matter of scaling the number of modules to the desired amount and performance paradigm - from eight-core Ryzen CPUs to 32-core Threadripper II CPUs. However, as the number of modules in a given chip increase, so does complexity of signal processing and routing - two modules communicating with each other is relatively easy to achieve, but six or eight modules? Not so much, as a small hiccup in information transmission can deadlock the entire processor (speaking of either CPU or GPU designs here now). The challenge becomes increasingly greater when joining different types of chips - from memory to processing dies and I/O controllers - in a single silicon slice. This is where interposers appear - and where AMD is taking strides towards achieving an active interposer design.

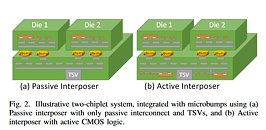

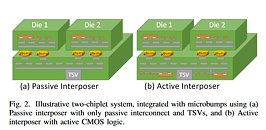

Passive interposers - such as the one found in the AMD Vega graphics cards - are simply a "dumb", silicon-enabler of data transmission - information is sent exactly as it was engineered to with the TSV (Through Silicon Vias) design. However, this approach is insufficient in the dream, open approach to chip integration - where manufacturers can create their designs, purchase other chips from other companies, and mix and match according to their end-product requirement. A "dumb", passive interposer won't suffice here - data routing problems would have to be manually solved with each different implementation, which is prohibitive from the cost perspective, and a big no-no for the approach. The solution, as AMD puts it on their "Cost-Effective Design of Scalable High-Performance Systems Using Active and Passive Interposers" paper, can be found, at least partially, with an active interposer - an interposer that features network logic (NoC - Network on Chip) that can adapt to the different slices of silicon grafted onto it. And this isn't just theoretics - active interposer designs have been manufactured, showing "improved signaling and efficiency over passive interposer [design]).

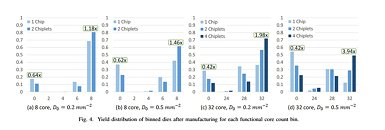

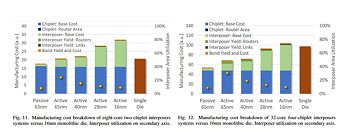

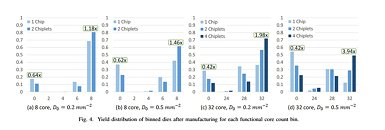

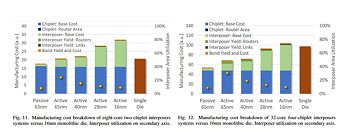

A big problem for this active interposer approach, however, is cost - again, the end of many promising technologies. AMD, however, is actively working on building enough redundancy in the active interposer design that yields aren't much of a concern - achieving the sweetspot between development cost and yield ratio. Nothing is worse than paying for a big-area, active interposer die with all of its network circuitry and have it have such defects that it can't be used.

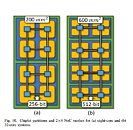

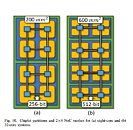

For now, AMD says these active interposers ought to be reserved for high-performance designs - not surprising, given the added R&D cost over passive interposer or sans-interposer design. The dream to mix and match and reuse different IPs at will via a "simple" substrate, however, is very much alive. AMD researchers proved that for 32-core processors - with possibilities of scaling with estimated monolithic die complexity versus the chiplet approach - could be achieved with much better yield and lower manufacturing cost in the chiplet + passive /active interposer design versus a monolithic 16 nm die.

The process used to fabricate the interposer is very important here - so as to increase yield ratios, 65 nm through 28 nm active or passive solutions provide reduced development and manufacturing costs. Active interposers, while more expensive than their passive counterparts, add the aforementioned networking capabilities that allow for zero deadlock possibility, routing signals as needed between different components of the design.

View at TechPowerUp Main Site

This "chiplet" approach is easy to understand - smaller, leaner chips can be produced with higher yields than large, monolithic ones, which are more prone to silicon defects. This increased yield (and increased number of chips per wafer, in the end) allows AMD to reduce manufacturing costs and increase production efficiency by using a single mask for a quad-core module, for instance. Afterwards, it's "simply" a matter of scaling the number of modules to the desired amount and performance paradigm - from eight-core Ryzen CPUs to 32-core Threadripper II CPUs. However, as the number of modules in a given chip increase, so does complexity of signal processing and routing - two modules communicating with each other is relatively easy to achieve, but six or eight modules? Not so much, as a small hiccup in information transmission can deadlock the entire processor (speaking of either CPU or GPU designs here now). The challenge becomes increasingly greater when joining different types of chips - from memory to processing dies and I/O controllers - in a single silicon slice. This is where interposers appear - and where AMD is taking strides towards achieving an active interposer design.

Passive interposers - such as the one found in the AMD Vega graphics cards - are simply a "dumb", silicon-enabler of data transmission - information is sent exactly as it was engineered to with the TSV (Through Silicon Vias) design. However, this approach is insufficient in the dream, open approach to chip integration - where manufacturers can create their designs, purchase other chips from other companies, and mix and match according to their end-product requirement. A "dumb", passive interposer won't suffice here - data routing problems would have to be manually solved with each different implementation, which is prohibitive from the cost perspective, and a big no-no for the approach. The solution, as AMD puts it on their "Cost-Effective Design of Scalable High-Performance Systems Using Active and Passive Interposers" paper, can be found, at least partially, with an active interposer - an interposer that features network logic (NoC - Network on Chip) that can adapt to the different slices of silicon grafted onto it. And this isn't just theoretics - active interposer designs have been manufactured, showing "improved signaling and efficiency over passive interposer [design]).

A big problem for this active interposer approach, however, is cost - again, the end of many promising technologies. AMD, however, is actively working on building enough redundancy in the active interposer design that yields aren't much of a concern - achieving the sweetspot between development cost and yield ratio. Nothing is worse than paying for a big-area, active interposer die with all of its network circuitry and have it have such defects that it can't be used.

For now, AMD says these active interposers ought to be reserved for high-performance designs - not surprising, given the added R&D cost over passive interposer or sans-interposer design. The dream to mix and match and reuse different IPs at will via a "simple" substrate, however, is very much alive. AMD researchers proved that for 32-core processors - with possibilities of scaling with estimated monolithic die complexity versus the chiplet approach - could be achieved with much better yield and lower manufacturing cost in the chiplet + passive /active interposer design versus a monolithic 16 nm die.

The process used to fabricate the interposer is very important here - so as to increase yield ratios, 65 nm through 28 nm active or passive solutions provide reduced development and manufacturing costs. Active interposers, while more expensive than their passive counterparts, add the aforementioned networking capabilities that allow for zero deadlock possibility, routing signals as needed between different components of the design.

View at TechPowerUp Main Site