FordGT90Concept

"I go fast!1!11!1!"

- Joined

- Oct 13, 2008

- Messages

- 26,263 (4.39/day)

- Location

- IA, USA

| System Name | BY-2021 |

|---|---|

| Processor | AMD Ryzen 7 5800X (65w eco profile) |

| Motherboard | MSI B550 Gaming Plus |

| Cooling | Scythe Mugen (rev 5) |

| Memory | 2 x Kingston HyperX DDR4-3200 32 GiB |

| Video Card(s) | AMD Radeon RX 7900 XT |

| Storage | Samsung 980 Pro, Seagate Exos X20 TB 7200 RPM |

| Display(s) | Nixeus NX-EDG274K (3840x2160@144 DP) + Samsung SyncMaster 906BW (1440x900@60 HDMI-DVI) |

| Case | Coolermaster HAF 932 w/ USB 3.0 5.25" bay + USB 3.2 (A+C) 3.5" bay |

| Audio Device(s) | Realtek ALC1150, Micca OriGen+ |

| Power Supply | Enermax Platimax 850w |

| Mouse | Nixeus REVEL-X |

| Keyboard | Tesoro Excalibur |

| Software | Windows 10 Home 64-bit |

| Benchmark Scores | Faster than the tortoise; slower than the hare. |

That's not the way raytracing works. Light source -> bounces -> eye. What it bounces off of (be a highly detailed model or a matte colored wall) doesn't matter in terms of compute. All it changes is the information the ray carries.Seems like we may still be a long way from games using it at high levels of detail, though maybe a cartoon-style game could pull it off?

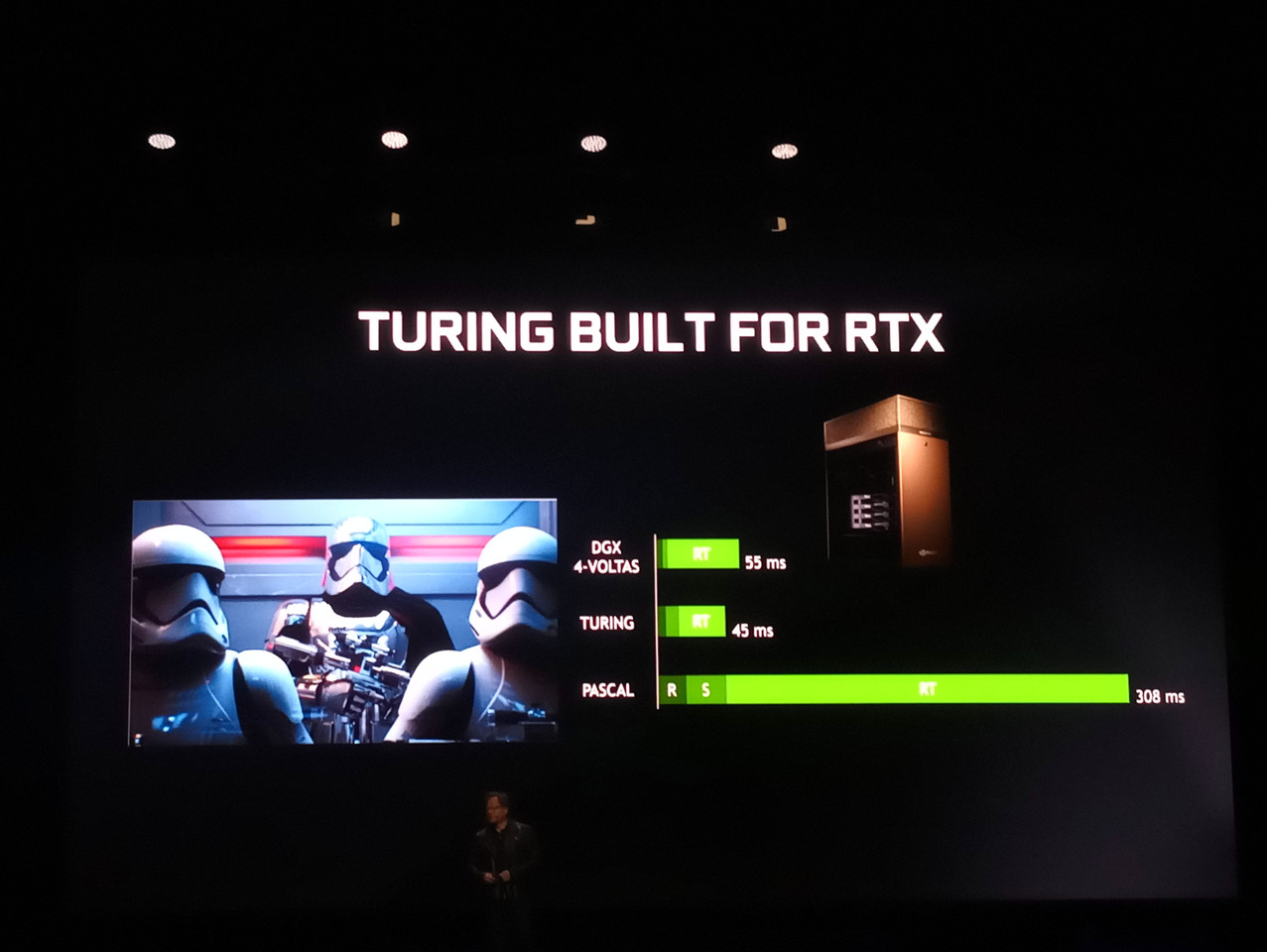

I see what NVIDIA is trying to do: end the use of traditional lighting methods. But this is a problem because of backwards compatibility. They need a crapload of shaders to keep older games running while they also soak a crapload of transistors into RT and Tensor cores to raytrace and denoise it. Turing could be a lot better at either one. NVIDIA isn't wrong that we're at a branching point in computer graphics. Either we keep faking it until we make it or we start raytracing.

Last edited:

Wow! The Raytracing must seriously be a resource hog if the top line 2080Ti can only manage those frames on 1080p.

Wow! The Raytracing must seriously be a resource hog if the top line 2080Ti can only manage those frames on 1080p.