- Joined

- Oct 9, 2007

- Messages

- 47,579 (7.46/day)

- Location

- Dublin, Ireland

| System Name | RBMK-1000 |

|---|---|

| Processor | AMD Ryzen 7 5700G |

| Motherboard | ASUS ROG Strix B450-E Gaming |

| Cooling | DeepCool Gammax L240 V2 |

| Memory | 2x 8GB G.Skill Sniper X |

| Video Card(s) | Palit GeForce RTX 2080 SUPER GameRock |

| Storage | Western Digital Black NVMe 512GB |

| Display(s) | BenQ 1440p 60 Hz 27-inch |

| Case | Corsair Carbide 100R |

| Audio Device(s) | ASUS SupremeFX S1220A |

| Power Supply | Cooler Master MWE Gold 650W |

| Mouse | ASUS ROG Strix Impact |

| Keyboard | Gamdias Hermes E2 |

| Software | Windows 11 Pro |

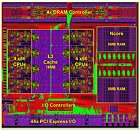

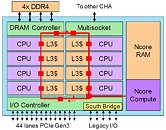

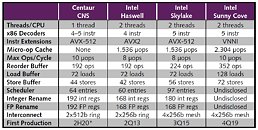

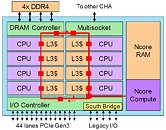

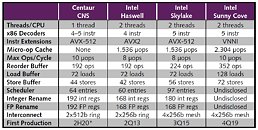

Centaur Technology today revealed in-depth information about its new processor-design technology for integrating high-performance x86 CPUs with a specialized co-processor optimized for artificial intelligence (AI) acceleration. On its website, Centaur provides a new independent report from The Linley Group, the industry's leading authority on microprocessor technology and publishers of Microprocessor Report. The Linley Group reviewed Centaur's detailed design documents and interviewed Centaur's CPU and AI architects to support the analysis of both Centaur's newest x86 microarchitecture and the AI co-processor design.

"Centaur is galloping back into the x86 market with an innovative processor design that combines eight high-performance CPUs with a custom deep-learning accelerator (DLA). The company is the first to announce a server-processor design that integrates a DLA. The new accelerator, called Ncore, delivers better neural-network performance than even the most powerful Xeon, but without the high cost of an external GPU card," stated Linley Gwennap, Editor-in-Chief, Microprocessor Report.

The report can be accessed here (PDF).

The Linley Group referenced certified MLPerf benchmark (Preview) scores to compare Centaur's AI performance to high-end x86 CPU cores from the leading x86 vendor. Based on MLPerf scores, Centaur's AI-coprocessor inference performance is comparable to 23 of Intel's world-class x86 cores that now support 512-bit vector neural network instructions (VNNI). Centaur's AI co-processor uses an architecturally similar single-instruction-multiple-data (SIMD) approach as VNNI, but crunches 32,768 bits in a single clock cycle using a 16 MB memory with 20 terabytes/sec of bandwidth. Moreover, by offloading inference processing to a specialized co-processor, the x86 CPU cores remain available for other, more general-purpose tasks. Application developers can innovate new algorithms that take advantage of the unparalleled inference latency enabled by Centaur's AI performance and tight integration with x86 CPUs.

Attendees at the ISC East trade show in NYC saw Centaur's new technology up close for the first time. The demo showcased video analytics using Centaur's reference system with x86-based network-video-recording (NVR) software from Qvis Labs. In addition to conventional, real-time object detection/classification, Centaur was the only vendor at the show to highlight leading-edge applications such as semantic segmentation (pixel-level image classification) and a new technique for human pose estimation ("stick figures"). Centaur is focused on improving the hardware price/performance and software productivity for platforms to support this next wave of research applications and speed deployment into new server-class products.

View at TechPowerUp Main Site

"Centaur is galloping back into the x86 market with an innovative processor design that combines eight high-performance CPUs with a custom deep-learning accelerator (DLA). The company is the first to announce a server-processor design that integrates a DLA. The new accelerator, called Ncore, delivers better neural-network performance than even the most powerful Xeon, but without the high cost of an external GPU card," stated Linley Gwennap, Editor-in-Chief, Microprocessor Report.

The report can be accessed here (PDF).

The Linley Group referenced certified MLPerf benchmark (Preview) scores to compare Centaur's AI performance to high-end x86 CPU cores from the leading x86 vendor. Based on MLPerf scores, Centaur's AI-coprocessor inference performance is comparable to 23 of Intel's world-class x86 cores that now support 512-bit vector neural network instructions (VNNI). Centaur's AI co-processor uses an architecturally similar single-instruction-multiple-data (SIMD) approach as VNNI, but crunches 32,768 bits in a single clock cycle using a 16 MB memory with 20 terabytes/sec of bandwidth. Moreover, by offloading inference processing to a specialized co-processor, the x86 CPU cores remain available for other, more general-purpose tasks. Application developers can innovate new algorithms that take advantage of the unparalleled inference latency enabled by Centaur's AI performance and tight integration with x86 CPUs.

Attendees at the ISC East trade show in NYC saw Centaur's new technology up close for the first time. The demo showcased video analytics using Centaur's reference system with x86-based network-video-recording (NVR) software from Qvis Labs. In addition to conventional, real-time object detection/classification, Centaur was the only vendor at the show to highlight leading-edge applications such as semantic segmentation (pixel-level image classification) and a new technique for human pose estimation ("stick figures"). Centaur is focused on improving the hardware price/performance and software productivity for platforms to support this next wave of research applications and speed deployment into new server-class products.

View at TechPowerUp Main Site