Maybe they should launch the original 3080 first? I really don't consider meeting less than 1% of the demand a real launch.

Nvidia was unable to meet demand for the 2080Ti right up to the 3080 launch and is by no means an unusual thing ... part of this is the normal production line ramp iup issues, but most of it is the opportunists looking to resell

The logical next step to DirectStorage and RTX-IO is graphics cards with resident non-volatile storage (i.e. SSDs on the card). I think there have already been some professional cards with onboard storage (though not leveraging tech like DirectStorage). You install optimized games directly onto this resident storage, and the GPU has its own downstream PCIe root complex that deals with it

See the Play Crysis on 3090 srticle

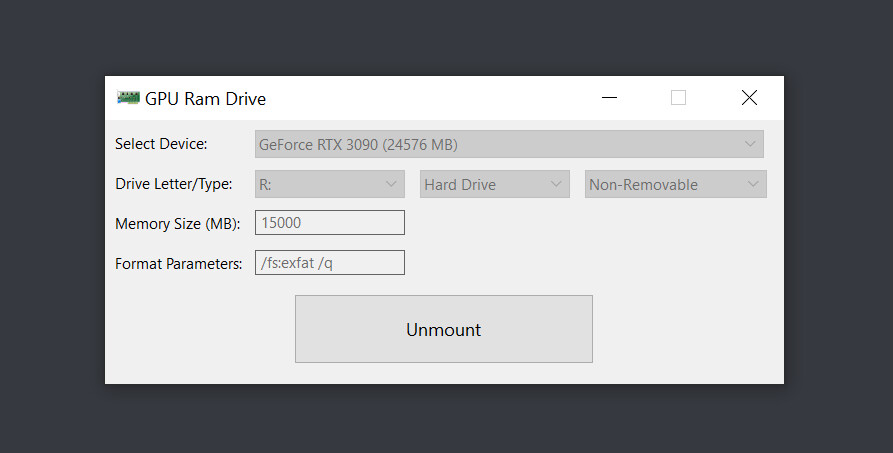

Let's skip ahead of any "Can it run Crysis" introductions for this news piece, and instead state it as it is: Crysis 3 can absolutely run when installed directly on a graphics card's memory subsystem. In this case, an RTX 3090 and its gargantuan 24 GB of GDDR6X memory where the playground for...

www.techpowerup.com

they should release the 3080 20gb ASAP

I'd wait till we see the performance comparisons ....not what MSI Afterburner or anything else says is being

used allocated .... I wanna see how fps is impacted. So far with 6xx, 7xx, 9xxx, there's been little observable impacts between cards with different RAM amounts when performance was above 30 fps . We have seen an effect w/ poor console ports and see illogical effects where the % difference was larger at 1080 than 1440.... which makes no sense. But every comparison I have read has not been able to show a significant fps impact.

In instances where a card comes in a X RAM version and a 2X RAM version, we have seen various utilies say that VRAM between 1X abd 2X is being

used allocated, but we have not seen a significant difference in fps when fps > 30 . We have even seen games refuse to install with the X RAM, but after intsalling the game with the 2X RAm card, it plays at the same fps wiyth no impact on the user experience when you switch out to the X RAM version afterwards.

GTX 770 4GB vs 2GB Showdown - Page 3 of 4 - AlienBabelTech

Why on earth would a 60-tier GPU in 2020 need 16GB of VRAM? Even 8GB is plenty for the types of games and settings that GPU will be capable of handling for its useful lifetime. Shader and RT performance will become a bottleneck at that tier long before 8GB of VRAM does. This is no 1060 3GB.

The 1060 3GB was purposely gimped with 11% less shaders because, otherwise, it showed no impact with half the VRAM. VRAM usage increases with resolution, so the 6% fps advangage that the 6GB card with 11% more shders had should have widened significantly at 1440p. It did not thereby proving that it was of no consequence. of the 2 games that had more significant impacts, on one of those two, the performance was closer between the 3 GB and 6 GB cards that at 1080 .... clearly this definace of logic points to the fact that other factors were at play in this instance. The 1060 was a 1080p card ... if 3 GB was inadequate at 1080p, then it should hev been a complete washout at 1440p and it wasn't ....

3060 6GB 3840 cuda.

3060 8GB 4864 cuda

there you have it, 8GB version is 30% faster,

They have to cut the GPU down, otherwise it will be obvious that the VRAM has no impact on fps when within the playable range.

At 4K FS2020 will try to eat as much VRAM as possible (>11GB) but that doesn't translate to

real performance.

RAM Allocation is much different than RAM usage

Video Card Performance: 2GB vs 4GB Memory - Puget Custom Computers

GTX 770 4GB vs 2GB Showdown - Page 3 of 4 - AlienBabelTech

Is 4GB of VRAM enough? AMD’s Fury X faces off with Nvidia’s GTX 980 Ti, Titan X | ExtremeTech

From 2nd link

"There is one last thing to note with

Max Payne 3: It would not normally allow one to set 4xAA at 5760×1080 with any 2GB card as it claims to require 2750MB. However, when we replaced the 4GB GTX 770 with the 2GB version, the game allowed the setting. And there were no slowdowns, stuttering, nor any performance differences that we could find between the two GTX 770s. "