And performed way worse at that power draw. Check your own review. 60 vs 52 fps 1% lows in GTAV 1080p isn't little, its the gap between framedrops and stable FPS on that game and overall there is a 15% perf gap.

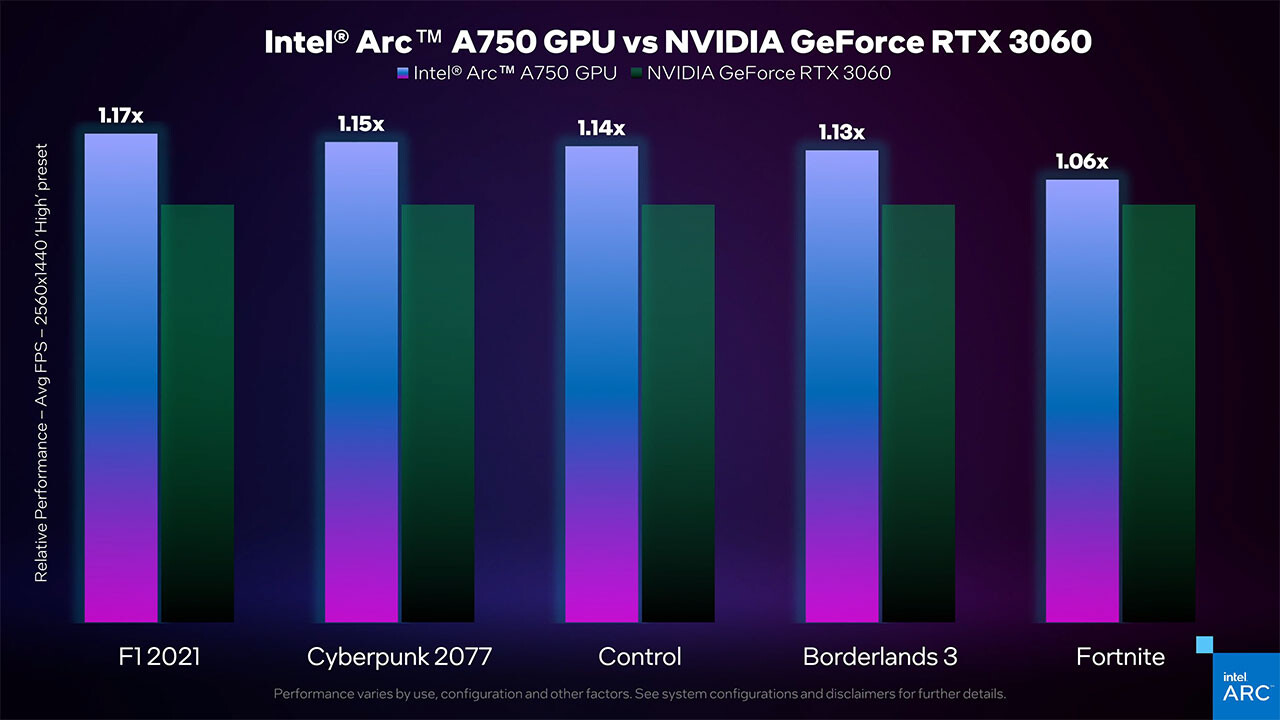

This persists throughout a broad range of games:

View attachment 257181

So great, it uses the same amount of power. Its also a stuttery GPU. The above differences are night and day. Before we praise Polaris, look at how similar it performs to the 390X, in lows. Its just a minor update to GCN, not much else - and a good one, in relative sense: lows and averages definitely got closer together. But Nvidia was on a completely different level by then, being almost rock solid.

See this is the point really that was emerging as it always has in a few decades of seeing the battle Nvidia/AMD:

power is everything. And that applies broadly. You need the graphical power, but you also need to run that at the lowest possible TDP. You need a stack that can scale and maintain efficiency.

As the node stalled at 28nm for a huge amount of time, that's when the efficiency battle was on in earnest: after all, how do you differentiate when you're all on the same silicon? You can't hide anything by saying 'we're behind' or 'we're ahead'. What we saw in the Kepler > Pascal generations was hot competition because architecture was

everything. AMD was pushing an early success with GCN's 7950 & 7970 and basically rode that train until Hawaii XT, and then rebranded on the same chip even, still selling it because it was actually still competitive and by then super cheap.

AMD stayed on that train for far too long and then accumulated 2 years of falling behind on Nvidia.

Its 2H2022 and they still haven't caught up. They might have performance parity with Nvidia, but that's with a lacking featureset. Luckily its a featureset that isn't a must have yet, but its only since a few months now that we have a solid DLSS alternative for example.

Now, apply this lens on Team Blue. Arc is releasing to compete with product performance we've had (more than?) two years ago in the

upper midrange - and note: Turing was the first gen taking twice as long to release as we were used to, so in the 'normal' cadence of 1~1,5year gen upgrades the gap would have been bigger. It does so with a grossly inefficient architecture, and the node advantage doesn't cover the gap either. It uses a good 30-50% more power for the same workload as a competitor. Or it

could use equal power, like your 1060 comparison, and then perform a whole lot less, dropping even further down the stack. On top of that, there is no history of driver support to trust on and no full support at launch.

Its literally repeating the same mistakes we've seen not very long ago, and we can't laugh at Intel in its face because they're such a potential competitor? There is no potential! There never was, and many people called it - simply because they/we

know how effin' hard this race really is. We've seen the two giants struggle. We've seen Raja fall into the very same holes he did at AMD/RTG.

I can honestly see just one saving grace for Intel: The currently exploding TDP budgets. It means Intel can ride a solid arch for longer than one generation just by sizing it up. But... you need a solid arch first, and Arc is not it by design, because the primary product is Xe. As long as there is no absolute, near-fanatical devotion to a gaming GPU line, you can safely forget it. And this isn't news: Nvidia was chopping down their featureset towards pure gaming chips for a decade already; stacking one successful year upon another.

I pointed out your own review link, userbenchmark, and now here we have another 10% which is not counting the 1% lows.

I pointed out your own review link, userbenchmark, and now here we have another 10% which is not counting the 1% lows.