- Joined

- Jun 22, 2014

- Messages

- 465 (0.12/day)

| System Name | Desktop / "Console" |

|---|---|

| Processor | Ryzen 9800X3D / Ryzen 5800X |

| Motherboard | Asus Proart X870E / Asus X570-i |

| Cooling | EK AIO Elite 280 / Cryorig C1 |

| Memory | 64GB Corsair Vengeance DDR5-6000 CL30 / 16GB Crucial Ballistix DDR4-3600 CL16 |

| Video Card(s) | RTX 5090 FE / RTX 4090 FE |

| Storage | 1TB Samsung 980 Pro, 1TB Sabrent Rocket 4 Plus NVME / 1TB Sabrent Rocket 4 NVME, 1TB Intel 660P |

| Display(s) | Alienware AW3423DW / LG 65CX Oled |

| Case | Lian Li O11 Mini / Fractal Ridge |

| Audio Device(s) | Sony AVR, SVS speakers & subs / Marantz AVR, SVS speakers & subs |

| Power Supply | ROG Loki 1000 / ROG Loki 1000 |

| VR HMD | Quest 3 |

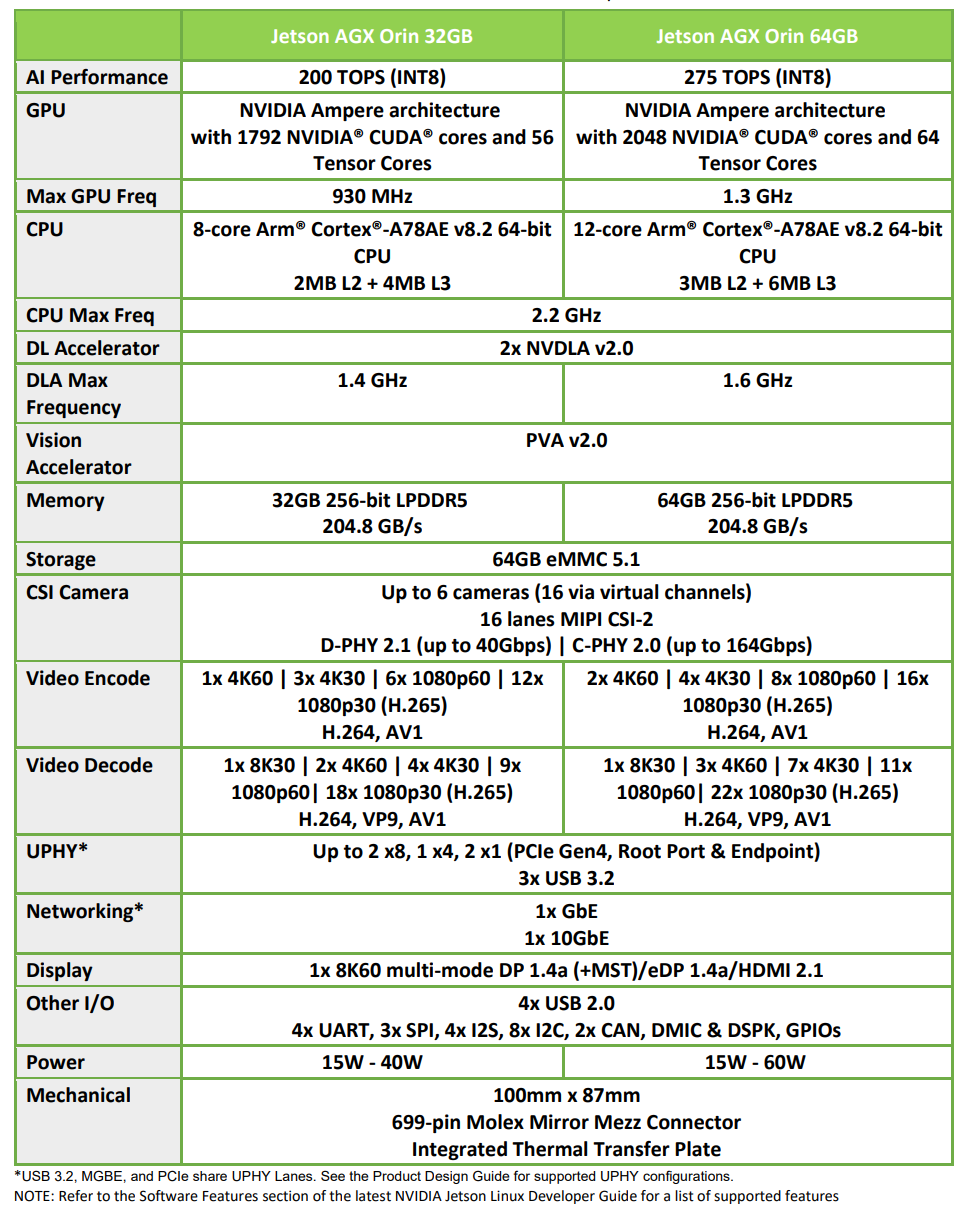

Lots of talk about ray tracing in the comments. RTX GPUs have Cuda cores, RT cores, and Tensor cores. Three completely separate core types. RT cores handle ray tracing calculations, Tensor cores handle machine/deep learning which is what runs DLSS. Orin has Cuda and Tensor cores, it does not have RT cores. This is why the article talks about DLSS and does not mention RT anywhere.

If you are reading something someplace that is referencing nVidia TensorRT, that stands for Run Time, not Ray Tracing.

NVIDIA TensorRT is a runtime library and optimizer for deep learning inference that delivers lower latency and higher throughput across NVIDIA GPU products. TensorRT enables customers to parse a trained model and maximize the throughput by quantizing models to INT8, optimizing use of the GPU memory and bandwidth by fusing nodes in a kernel, and selecting the best data layers and algorithms based on the target GPU.

If you are reading something someplace that is referencing nVidia TensorRT, that stands for Run Time, not Ray Tracing.

NVIDIA TensorRT is a runtime library and optimizer for deep learning inference that delivers lower latency and higher throughput across NVIDIA GPU products. TensorRT enables customers to parse a trained model and maximize the throughput by quantizing models to INT8, optimizing use of the GPU memory and bandwidth by fusing nodes in a kernel, and selecting the best data layers and algorithms based on the target GPU.

& panning the camera looks awful at 30fps of course.

& panning the camera looks awful at 30fps of course. And tbh. the Switch would right now be also my #1 pick. PS5 just for a few games (esp. Gran Turismo). xBox kinda makes zero sense if already you own a PC.

And tbh. the Switch would right now be also my #1 pick. PS5 just for a few games (esp. Gran Turismo). xBox kinda makes zero sense if already you own a PC.