T0@st

News Editor

- Joined

- Mar 7, 2023

- Messages

- 2,897 (3.77/day)

- Location

- South East, UK

| System Name | The TPU Typewriter |

|---|---|

| Processor | AMD Ryzen 5 5600 (non-X) |

| Motherboard | GIGABYTE B550M DS3H Micro ATX |

| Cooling | DeepCool AS500 |

| Memory | Kingston Fury Renegade RGB 32 GB (2 x 16 GB) DDR4-3600 CL16 |

| Video Card(s) | PowerColor Radeon RX 7800 XT 16 GB Hellhound OC |

| Storage | Samsung 980 Pro 1 TB M.2-2280 PCIe 4.0 X4 NVME SSD |

| Display(s) | Lenovo Legion Y27q-20 27" QHD IPS monitor |

| Case | GameMax Spark M-ATX (re-badged Jonsbo D30) |

| Audio Device(s) | FiiO K7 Desktop DAC/Amp + Philips Fidelio X3 headphones, or ARTTI T10 Planar IEMs |

| Power Supply | ADATA XPG CORE Reactor 650 W 80+ Gold ATX |

| Mouse | Roccat Kone Pro Air |

| Keyboard | Cooler Master MasterKeys Pro L |

| Software | Windows 10 64-bit Home Edition |

The Barcelona Supercomputing Center (BSC) and the State University of New York (Stony Brook and Buffalo campuses) have pitted NVIDIA's relatively new CG100 "Grace" Superchip against several rival products in a "wide variety of HPC and AI benchmarks." Team Green marketing material has focused mainly on the overall GH200 "Grace Hopper" package—so it is interesting to see technical institutes concentrate on the company's "first true" server processor (ARM-based), rather than the ever popular GPU aspect. The Next Platform's article summarized the chip's internal makeup: "(NVIDIA's) Grace CPU has a relatively high core count and a relatively low thermal footprint, and it has banks of low-power DDR5 (LPDDR5) memory—the kind used in laptops but gussied up with error correction to be server class—of sufficient capacity to be useful for HPC systems, which typically have 256 GB or 512 GB per node these days and sometimes less."

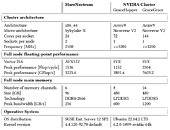

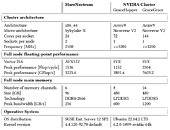

Benchmark results were revealed at last week's HPC Asia 2024 conference (in Nagoya, Japan)—Barcelona Supercomputing Center (BSC) and the State University of New York also uploaded their findings to the ACM Digital Library (link #1 & #2). BSC's MareNostrum 5 system contains an experimental cluster portion—consisting of NVIDIA Grace-Grace and Grace-Hopper superchips. We have heard plenty about the latter (in press releases), but the former is a novel concept—as outlined by The Next Platform: "Put two Grace CPUs together into a Grace-Grace superchip, a tightly coupled package using NVLink chip-to-chip interconnects that provide memory coherence across the LPDDR5 memory banks and that consumes only around 500 watts, and it gets plenty interesting for the HPC crowd. That yields a total of 144 Arm Neoverse "Demeter" V2 cores with the Armv9 architecture, and 1 TB of physical memory with 1.1 TB/sec of peak theoretical bandwidth. For some reason, probably relating to yield on the LPDDR5 memory, only 960 GB of that memory capacity and only 1 TB/sec of that memory bandwidth is actually available."

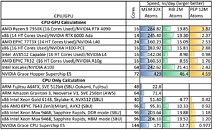

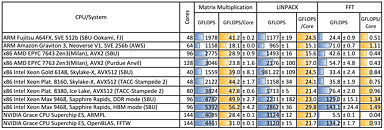

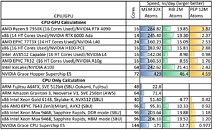

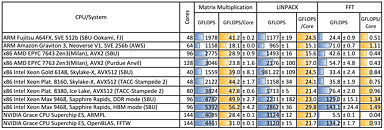

BSC's older MareNostrum 4 supercomputer is based on "nodes comprised of a pair of 24-core Skylake-X Xeon SP-8160 Platinum processors running at 2.1 GHz." The almost seven year old Team Blue-based system was bested by the NVIDIA-fortified MareNostrum 5—the latter's worst performance results were still 67% faster, while its best was indicated a 4.49x performance advantage. The Upstate New York Institute fielded a wider ranger of rival solutions against its own NVIDIA setup—in "Grace-Grace" (CPU-CPU pair) and "Grace-Hopper" (CPU-GPU pair) configurations. The competition included: Intel Sapphire Rapids and Ice Lake, AMD Milan, plus the ARM-based Amazon Graviton 3 and Fujitsu A64FX processors. Tom's Hardware checked SUNY's comparison data: "The Grace Superchip easily beat the Graviton 3, the A64FX, an 80-core Ice Lake setup, and even a 128-core configuration of Milan in all benchmarks. However, the Sapphire Rapids server with two 48-core Xeon Max 9468s stopped Grace's winning streak."

They continued: "Against Sapphire Rapids in HBM mode, Grace only won in three of the eight tests—though it was able to outperform in five tests when in DDR5 mode. It's a surprisingly mixed bag for Nvidia considering that Grace has 50% more cores and uses TSMC's more advanced 4 nm node instead of Intel's aging Intel 7 (formerly 10 nm) process. It's not entirely out of left field, though: Sapphire Rapids also beat AMD's EPYC Genoa chips for a spot in a MI300X-powered Azure instance, indicating that, despite Sapphire Rapid's shortcomings, it still has plenty of potency for HPC...On the other hand, NVIDIA might have a crushing victory in efficiency. The Grace Superchip is rated for 500 watts, while the Xeon Max 9468 is rated for 350 watts, which means two would have a TDP of 700 watts. The paper doesn't detail power consumption on either chip, but if we assume each chip was running at its TDP, then the comparison becomes very favorable for NVIDIA."

The Next Platform believes that Team Green's CG100 server processor is truly bolstered by its onboard neighbor: "any CPU paired with the same Hopper GPU would probably do as well. On the CPU-only Grace-Grace unit, the Gromacs performance is almost as potent as a pair of 'Sapphire Rapids' Xeon Max Series CPUs. It is noteworthy that the HBM memory on this chip doesn't help that much for Gromacs. Hmmmm. Anyway, that is some food for thought about the Grace CPU and HPC workloads."

View at TechPowerUp Main Site | Source

Benchmark results were revealed at last week's HPC Asia 2024 conference (in Nagoya, Japan)—Barcelona Supercomputing Center (BSC) and the State University of New York also uploaded their findings to the ACM Digital Library (link #1 & #2). BSC's MareNostrum 5 system contains an experimental cluster portion—consisting of NVIDIA Grace-Grace and Grace-Hopper superchips. We have heard plenty about the latter (in press releases), but the former is a novel concept—as outlined by The Next Platform: "Put two Grace CPUs together into a Grace-Grace superchip, a tightly coupled package using NVLink chip-to-chip interconnects that provide memory coherence across the LPDDR5 memory banks and that consumes only around 500 watts, and it gets plenty interesting for the HPC crowd. That yields a total of 144 Arm Neoverse "Demeter" V2 cores with the Armv9 architecture, and 1 TB of physical memory with 1.1 TB/sec of peak theoretical bandwidth. For some reason, probably relating to yield on the LPDDR5 memory, only 960 GB of that memory capacity and only 1 TB/sec of that memory bandwidth is actually available."

BSC's older MareNostrum 4 supercomputer is based on "nodes comprised of a pair of 24-core Skylake-X Xeon SP-8160 Platinum processors running at 2.1 GHz." The almost seven year old Team Blue-based system was bested by the NVIDIA-fortified MareNostrum 5—the latter's worst performance results were still 67% faster, while its best was indicated a 4.49x performance advantage. The Upstate New York Institute fielded a wider ranger of rival solutions against its own NVIDIA setup—in "Grace-Grace" (CPU-CPU pair) and "Grace-Hopper" (CPU-GPU pair) configurations. The competition included: Intel Sapphire Rapids and Ice Lake, AMD Milan, plus the ARM-based Amazon Graviton 3 and Fujitsu A64FX processors. Tom's Hardware checked SUNY's comparison data: "The Grace Superchip easily beat the Graviton 3, the A64FX, an 80-core Ice Lake setup, and even a 128-core configuration of Milan in all benchmarks. However, the Sapphire Rapids server with two 48-core Xeon Max 9468s stopped Grace's winning streak."

They continued: "Against Sapphire Rapids in HBM mode, Grace only won in three of the eight tests—though it was able to outperform in five tests when in DDR5 mode. It's a surprisingly mixed bag for Nvidia considering that Grace has 50% more cores and uses TSMC's more advanced 4 nm node instead of Intel's aging Intel 7 (formerly 10 nm) process. It's not entirely out of left field, though: Sapphire Rapids also beat AMD's EPYC Genoa chips for a spot in a MI300X-powered Azure instance, indicating that, despite Sapphire Rapid's shortcomings, it still has plenty of potency for HPC...On the other hand, NVIDIA might have a crushing victory in efficiency. The Grace Superchip is rated for 500 watts, while the Xeon Max 9468 is rated for 350 watts, which means two would have a TDP of 700 watts. The paper doesn't detail power consumption on either chip, but if we assume each chip was running at its TDP, then the comparison becomes very favorable for NVIDIA."

The Next Platform believes that Team Green's CG100 server processor is truly bolstered by its onboard neighbor: "any CPU paired with the same Hopper GPU would probably do as well. On the CPU-only Grace-Grace unit, the Gromacs performance is almost as potent as a pair of 'Sapphire Rapids' Xeon Max Series CPUs. It is noteworthy that the HBM memory on this chip doesn't help that much for Gromacs. Hmmmm. Anyway, that is some food for thought about the Grace CPU and HPC workloads."

View at TechPowerUp Main Site | Source