T0@st

News Editor

- Joined

- Mar 7, 2023

- Messages

- 2,847 (3.74/day)

- Location

- South East, UK

| System Name | The TPU Typewriter |

|---|---|

| Processor | AMD Ryzen 5 5600 (non-X) |

| Motherboard | GIGABYTE B550M DS3H Micro ATX |

| Cooling | DeepCool AS500 |

| Memory | Kingston Fury Renegade RGB 32 GB (2 x 16 GB) DDR4-3600 CL16 |

| Video Card(s) | PowerColor Radeon RX 7800 XT 16 GB Hellhound OC |

| Storage | Samsung 980 Pro 1 TB M.2-2280 PCIe 4.0 X4 NVME SSD |

| Display(s) | Lenovo Legion Y27q-20 27" QHD IPS monitor |

| Case | GameMax Spark M-ATX (re-badged Jonsbo D30) |

| Audio Device(s) | FiiO K7 Desktop DAC/Amp + Philips Fidelio X3 headphones, or ARTTI T10 Planar IEMs |

| Power Supply | ADATA XPG CORE Reactor 650 W 80+ Gold ATX |

| Mouse | Roccat Kone Pro Air |

| Keyboard | Cooler Master MasterKeys Pro L |

| Software | Windows 10 64-bit Home Edition |

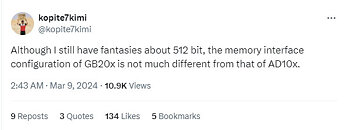

Earlier in the week it was revealed that NVIDIA had distributed next-gen AI GPUs to its most important ecosystem partners and customers—Dell's CEO expressed enthusiasm with his discussion of "Blackwell" B100 and B200 evaluation samples. Team Green's next-gen family of gaming GPUs have received less media attention in early 2024—a mid-February TPU report pointed to a rumored PCIe 6.0 CEM specification for upcoming RTX 50-series cards, but leaks have become uncommon since late last year. Top technology tipster, kopite7kimi, has broken the relative silence on Blackwell's gaming configurations—an early hours tweet posits a slightly underwhelming scenario: "although I still have fantasies about 512 bit, the memory interface configuration of GB20x is not much different from that of AD10x."

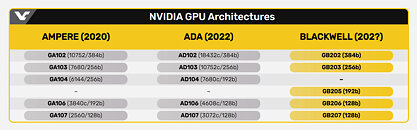

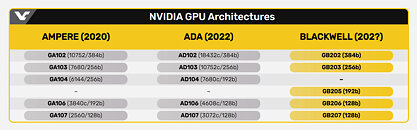

Past disclosures have hinted about next-gen NVIDIA gaming GPUs sporting memory interface configurations comparable to the current crop of "Ada Lovelace" models. The latest batch of insider information suggests that Team Green's next flagship GeForce RTX GPU—GB202—will stick with a 384-bit memory bus. The beefiest current-gen GPU AD102—as featured in GeForce RTX 4090 graphics cards—is specced with a 384-bit interface. A significant upgrade for GeForce RTX 50xx cards could arrive with a step-up to next-gen GDDR7 memory—kopite7kimi reckons that top GPU designers will stick with 16 Gbit memory chip densities (2 GB). JEDEC officially announced its "GDDR7 Graphics Memory Standard" a couple of days ago. VideoCardz has kindly assembled the latest batch of insider info into a cross-generation comparison table (see below).

View at TechPowerUp Main Site | Source

Past disclosures have hinted about next-gen NVIDIA gaming GPUs sporting memory interface configurations comparable to the current crop of "Ada Lovelace" models. The latest batch of insider information suggests that Team Green's next flagship GeForce RTX GPU—GB202—will stick with a 384-bit memory bus. The beefiest current-gen GPU AD102—as featured in GeForce RTX 4090 graphics cards—is specced with a 384-bit interface. A significant upgrade for GeForce RTX 50xx cards could arrive with a step-up to next-gen GDDR7 memory—kopite7kimi reckons that top GPU designers will stick with 16 Gbit memory chip densities (2 GB). JEDEC officially announced its "GDDR7 Graphics Memory Standard" a couple of days ago. VideoCardz has kindly assembled the latest batch of insider info into a cross-generation comparison table (see below).

View at TechPowerUp Main Site | Source

.

.