- Joined

- May 14, 2004

- Messages

- 28,606 (3.74/day)

| Processor | Ryzen 7 5700X |

|---|---|

| Memory | 48 GB |

| Video Card(s) | RTX 4080 |

| Storage | 2x HDD RAID 1, 3x M.2 NVMe |

| Display(s) | 30" 2560x1600 + 19" 1280x1024 |

| Software | Windows 10 64-bit |

Just a few weeks ago, AMD invited us to Barcelona as part of a roundtable, to share their vision for the future of the company, and to get our feedback. On site, were prominent AMD leadership, including Phil Guido, Executive Vice President & Chief Commercial Officer and Jack Huynh, Senior VP & GM, Computing and Graphics Business Group. AMD is making changes in a big way to how they are approaching technology, shifting their focus from hardware development to emphasizing software, APIs, and AI experiences. Software is no longer just a complement to hardware; it's the core of modern technological ecosystems, and AMD is finally aligning its strategy accordingly.

The major difference between AMD and NVIDIA is that AMD is a hardware company that makes software on the side to support its hardware; while NVIDIA is a software company that designs hardware on the side to accelerate its software. This is about to change, as AMD is making a pivot toward software. They believe that they now have the full stack of computing hardware—all the way from CPUs, to AI accelerators, to GPUs, to FPGAs, to data-processing and even server architecture. The only frontier left for AMD is software.

Fast Forward to Barcelona

We walked into the room in Barcelona expecting the usual fluff talk about how AI PC is the next big thing, and how it's all hands on deck to capture market share—we've heard that before, from pretty much everyone at Computex Taiwan. Well, we did get a substantial talk on how AMD's new Ryzen AI 300 series "Strix Point" processors are the tip of the spear for the company with AI PCs, and how it thinks that it brings a winning combination of hardware to see it through; but what we didn't expect was to get a glimpse into how much the company AMD is changing things around to gain competitiveness in this new world, which led to a stunning disclosure by the company.

AMD has "tripled our software engineering, and are going all-in on the software." This not only means bring in more people, but also allow people to change roles: "we moved some of our best people in the organization to support" these teams. When this transformation is completed, the company will more closely resemble contemporaries in the industry such as Intel and NVIDIA. AMD commented that in the past they were "silicon first, then we thought about SDKs, toolchains and then the ISVs (software development companies)." They continued "Our shift in strategy is to talk to ISVs first...to understand what the developers want enabled." which is a fundamental change to how new processors are created. I really like this quote: "the old AMD would just chase speeds and feeds. The new AMD is going to be AI software first, we know how to do silicon"—and I agree that this is the right path forward.

AMD of the Old: Why Hardware-first is Bad for a Hardware Company in IT

AMD's hardware-first approach to tech has met with limited market success. Despite having a CPU microarchitecture that at least matches Intel, the company barely commands a quarter of the market (both server and client processors combined); and despite its gaming GPUs being contemporary, it barely has a sixth of this market. This is not for a lack of performance—AMD makes some very powerful CPUs and GPUs, which are able to keep competitors on their toes. The number-one problem with AMD's technology has been relatively less engagement with the software vendor ecosystem—to make best use of the hardware's exclusive and unique capabilities through first-party software technologies—APIs, developer tools, resources, developer networking, and optimization.

For example, Radeon GPUs have had tessellation capabilities at least two generations ahead of NVIDIA, which was only exploited by developers after Microsoft standardized it in the DirectX 11 API, the same happened with Mantle and DirectX 12. In both cases, the X-factor NVIDIA enjoys is a software-first approach, the way it engages with developers, and more importantly, the install-base (over 75% of the discrete GPU market-share). There have been several such examples of AMD silicon packing exotic accelerators across its hardware stack that haven't been properly exploited by the software community. The reasons are usually the same—AMD has been a hardware-first company.

Why is Tesla a hotter stock than General Motors? Because General Motors is an automobile company that happens to use some technology in its vehicles; whereas Tesla is a tech company that happens to know automobile engineering. Tesla vehicles are software-defined devices that can transport you around. Tesla's approach to transportation has been to understand what consumers want or might need from a technology standpoint, and then building the hardware to achieve it. In the end, you know Tesla for its savvy cars, much in the same way that you know NVIDIA for GPUs that "just work," and like Tesla, NVIDIA's revenues are overwhelmingly made up of hardware sales—despite them being software-first, or experience-first. Another example of exactly this would be Apple who have built a huge ecosystem of software and services that is designed to work extremely well together, but also locks people in their "walled garden," enabling huge profits for the company in the process.

NVIDIA's Weakness

This is not to say that AMD has neglected software at all—far from it, the company has played nice-guy by keeping much of its software base open-source, through initiatives such as GPUOpen and ROCm, which are great resources for software developers, and we definitely love the support for open source. It's just that AMD has not treated software as its main product, that makes people buy their hardware and bring in the revenues. AMD is aware of this and wants "to create a unified architecture across our CPU and RDNA, which will let us simplify [the] software." This looks like an approach similar to Intel's OneAPI, which makes a lot of sense, but it will be a challenging project. NVIDIA's advantage here is that they have just one kind of accelerator—the GPU, which runs CUDA—a single API for all developers to learn, which enables them to solve a huge range of computing challenges on hardware ranging from $200 to $30,000.

On the other hand, this is also a weakness of NVIDIA, and an advantage for AMD. AMD has a rich IP portfolio of compute solutions, ranging from classic CPUs and GPUs, to XDNA FPGA chips (through the Xilinx acquisition), now they just need to bring them together, exposing a unified computing interface that makes it easy to strategically shift workloads between these core types, to maximize performance, cost, efficiency or both. Such a capability would give the company the ability to sell customers a single-product combined accelerator system comprised of components like a CPU, GPU and specialized FPGA(s)—similar to how you're buying an iPhone and not a screen, processor, 5G modem and battery to combine them on your own.

Enabling Software Developers

If you've sat through NVIDIA's GTC sessions like we have, barely 5-10% of the showtime is spent talking about NVIDIA hardware (their latest AI GPUs or accelerators up and down the stack), most of the talk is about first-party software solutions—problems to solve, solutions, software, API, developer tools, collaboration tools, bare-metal system software, and only then the hardware. AMD started its journey toward exactly this.

They are now talking to the major software companies, like Microsoft, Adobe and OpenAI, to learn what their plans are and what they need from a future hardware generation. AMD's roadmaps now show the company's plans several years into the future, so that their partners can learn what AMD is creating, so the software products can better utilize these new features.

Market Research

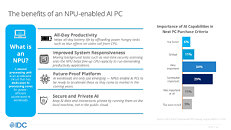

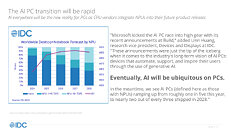

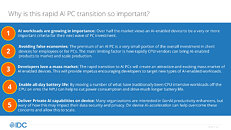

We got a detailed presentation from market research firm IDC, which AMD contracted to study the short- and medium-term future of AI PCs, and the notion that PCs with native acceleration will bring a disruptive change to computing. This happened before, when bricks became iPhones, when networks became the Internet, and when text-based prompts were banished for GUI interfaces. To be honest, generative AI has taken a life of its own, and is playing a crucial role in the mainstreaming of this new tech, but the current implementation relies on cloud-based acceleration. Running everything in the cloud comes with huge power usage and expensive NVIDIA GPUs are used in the process. Are people willing to buy a whole new device just to get some of this acceleration onto their devices for privacy and latency? This remains to be seen. Even with 50 TOPS, the NPU of AMD "Strix" and Intel "Lunar Lake" won't exactly zip through image generation, but make text-based LLMs viable, as would certain audiovisual effects such as Teams webcam background replacements, noise suppression, and even live translation.

AMD is aware of the challenges, especially after Intel (Meteor Lake) and Microsoft (Copilot) spammed us with "AI" everywhere, and huge chunks of the userbase fail to see the convincing arguments. Privacy and Security are on AMD's radar, and you need to "demonstrate that you actually increase productivity per workload. If you're asking people to spend more money [... you need to prove that] you can save hours per week....that could be worth the investment, [but] will require a massive education of the end-users." There is also a goal to give special love to "build the most innovative and disruptive form factors" for notebooks, so that people are like "wow, there's something new here". Specifically in the laptop space they are watching Qualcomm's Windows on Arm initiative very closely and want to make sure to "launch a product only when it's ready," and to also "address price-points below $1000."

Where Does AMD Begin in 2024?

What's the first stop in AMD's journey? It's to ensure that it's able to grow its market-share both on the client side with AI PCs, and on the data-center side, with its AI GPUs. For AI PCs, the company believes it has a winning product with the Ryzen AI 300 series "Strix Point" mobile processors, which it thinks are in a good position to ramp through 2024. What definitely helps is the fact that "Strix Point" is based on a relatively mature TSMC 4 nm foundry node, with which it can secure volumes; compared to Intel's "Lunar Lake" and upcoming "Arrow Lake," which are both expected to use TSMC's 3 nm foundry node. ASUS already announced a mid-July media event where it plans to launch dozens of AI PCs, all of which are powered by Ryzen AI 300 series chips, and meet Microsoft Copilot+ requirements. Over on the data-center side, AMD's MI300X accelerator is receiving spillover demand from competing NVIDIA H100 GPUs, and the company plans to continue investing in the software side of this solution, to bring in large orders from leading AI cloud-compute providers running popular AI applications.

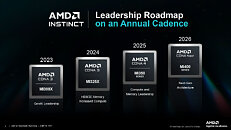

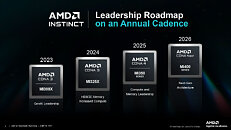

The improvements to the software ecosystem will take some time, AMD is looking at a three to five year timeframe, and to support that, AMD has greatly increased their software engineer headcount as mentioned before. They have also accelerated their hardware development: "we are going to launch a new [Radeon] Instinct product every 12 months," which is a difficult task, but it helps react quicker to changes in the software markets and its demand. On the CPU side, the company "now has two CPU teams, one does n+1 [next generation] the other n+2 [two generations ahead]," which reminds us a bit of Intel's tick-tock strategy, which was more silicon manufacturing focused of course. When asked about Moore's Law and its demise, the company also commented that it is exploring "AI in chip design to go beyond place and route," and that "yesterday's war is more rasterization, more ray tracing, more bandwidth," the challenges of the next generations are not only hardware, but software support for nurturing relations with software developers plays a crucial role. AMD even thinks that the eternal tug-of-war between CPU and GPU could shift in the future: "we can't think of AI as a checkbox/gimmick feature like USB—AI could become the hero."

What's heartening though is that AMD has made the bold move of mobilizing resources toward hiring software talent over acquiring another hardware company like it usually does when its wallet is full—this will pay off in the coming years.

View at TechPowerUp Main Site

The major difference between AMD and NVIDIA is that AMD is a hardware company that makes software on the side to support its hardware; while NVIDIA is a software company that designs hardware on the side to accelerate its software. This is about to change, as AMD is making a pivot toward software. They believe that they now have the full stack of computing hardware—all the way from CPUs, to AI accelerators, to GPUs, to FPGAs, to data-processing and even server architecture. The only frontier left for AMD is software.

Fast Forward to Barcelona

We walked into the room in Barcelona expecting the usual fluff talk about how AI PC is the next big thing, and how it's all hands on deck to capture market share—we've heard that before, from pretty much everyone at Computex Taiwan. Well, we did get a substantial talk on how AMD's new Ryzen AI 300 series "Strix Point" processors are the tip of the spear for the company with AI PCs, and how it thinks that it brings a winning combination of hardware to see it through; but what we didn't expect was to get a glimpse into how much the company AMD is changing things around to gain competitiveness in this new world, which led to a stunning disclosure by the company.

AMD has "tripled our software engineering, and are going all-in on the software." This not only means bring in more people, but also allow people to change roles: "we moved some of our best people in the organization to support" these teams. When this transformation is completed, the company will more closely resemble contemporaries in the industry such as Intel and NVIDIA. AMD commented that in the past they were "silicon first, then we thought about SDKs, toolchains and then the ISVs (software development companies)." They continued "Our shift in strategy is to talk to ISVs first...to understand what the developers want enabled." which is a fundamental change to how new processors are created. I really like this quote: "the old AMD would just chase speeds and feeds. The new AMD is going to be AI software first, we know how to do silicon"—and I agree that this is the right path forward.

AMD of the Old: Why Hardware-first is Bad for a Hardware Company in IT

AMD's hardware-first approach to tech has met with limited market success. Despite having a CPU microarchitecture that at least matches Intel, the company barely commands a quarter of the market (both server and client processors combined); and despite its gaming GPUs being contemporary, it barely has a sixth of this market. This is not for a lack of performance—AMD makes some very powerful CPUs and GPUs, which are able to keep competitors on their toes. The number-one problem with AMD's technology has been relatively less engagement with the software vendor ecosystem—to make best use of the hardware's exclusive and unique capabilities through first-party software technologies—APIs, developer tools, resources, developer networking, and optimization.

For example, Radeon GPUs have had tessellation capabilities at least two generations ahead of NVIDIA, which was only exploited by developers after Microsoft standardized it in the DirectX 11 API, the same happened with Mantle and DirectX 12. In both cases, the X-factor NVIDIA enjoys is a software-first approach, the way it engages with developers, and more importantly, the install-base (over 75% of the discrete GPU market-share). There have been several such examples of AMD silicon packing exotic accelerators across its hardware stack that haven't been properly exploited by the software community. The reasons are usually the same—AMD has been a hardware-first company.

Why is Tesla a hotter stock than General Motors? Because General Motors is an automobile company that happens to use some technology in its vehicles; whereas Tesla is a tech company that happens to know automobile engineering. Tesla vehicles are software-defined devices that can transport you around. Tesla's approach to transportation has been to understand what consumers want or might need from a technology standpoint, and then building the hardware to achieve it. In the end, you know Tesla for its savvy cars, much in the same way that you know NVIDIA for GPUs that "just work," and like Tesla, NVIDIA's revenues are overwhelmingly made up of hardware sales—despite them being software-first, or experience-first. Another example of exactly this would be Apple who have built a huge ecosystem of software and services that is designed to work extremely well together, but also locks people in their "walled garden," enabling huge profits for the company in the process.

NVIDIA's Weakness

This is not to say that AMD has neglected software at all—far from it, the company has played nice-guy by keeping much of its software base open-source, through initiatives such as GPUOpen and ROCm, which are great resources for software developers, and we definitely love the support for open source. It's just that AMD has not treated software as its main product, that makes people buy their hardware and bring in the revenues. AMD is aware of this and wants "to create a unified architecture across our CPU and RDNA, which will let us simplify [the] software." This looks like an approach similar to Intel's OneAPI, which makes a lot of sense, but it will be a challenging project. NVIDIA's advantage here is that they have just one kind of accelerator—the GPU, which runs CUDA—a single API for all developers to learn, which enables them to solve a huge range of computing challenges on hardware ranging from $200 to $30,000.

On the other hand, this is also a weakness of NVIDIA, and an advantage for AMD. AMD has a rich IP portfolio of compute solutions, ranging from classic CPUs and GPUs, to XDNA FPGA chips (through the Xilinx acquisition), now they just need to bring them together, exposing a unified computing interface that makes it easy to strategically shift workloads between these core types, to maximize performance, cost, efficiency or both. Such a capability would give the company the ability to sell customers a single-product combined accelerator system comprised of components like a CPU, GPU and specialized FPGA(s)—similar to how you're buying an iPhone and not a screen, processor, 5G modem and battery to combine them on your own.

Enabling Software Developers

If you've sat through NVIDIA's GTC sessions like we have, barely 5-10% of the showtime is spent talking about NVIDIA hardware (their latest AI GPUs or accelerators up and down the stack), most of the talk is about first-party software solutions—problems to solve, solutions, software, API, developer tools, collaboration tools, bare-metal system software, and only then the hardware. AMD started its journey toward exactly this.

They are now talking to the major software companies, like Microsoft, Adobe and OpenAI, to learn what their plans are and what they need from a future hardware generation. AMD's roadmaps now show the company's plans several years into the future, so that their partners can learn what AMD is creating, so the software products can better utilize these new features.

Market Research

We got a detailed presentation from market research firm IDC, which AMD contracted to study the short- and medium-term future of AI PCs, and the notion that PCs with native acceleration will bring a disruptive change to computing. This happened before, when bricks became iPhones, when networks became the Internet, and when text-based prompts were banished for GUI interfaces. To be honest, generative AI has taken a life of its own, and is playing a crucial role in the mainstreaming of this new tech, but the current implementation relies on cloud-based acceleration. Running everything in the cloud comes with huge power usage and expensive NVIDIA GPUs are used in the process. Are people willing to buy a whole new device just to get some of this acceleration onto their devices for privacy and latency? This remains to be seen. Even with 50 TOPS, the NPU of AMD "Strix" and Intel "Lunar Lake" won't exactly zip through image generation, but make text-based LLMs viable, as would certain audiovisual effects such as Teams webcam background replacements, noise suppression, and even live translation.

AMD is aware of the challenges, especially after Intel (Meteor Lake) and Microsoft (Copilot) spammed us with "AI" everywhere, and huge chunks of the userbase fail to see the convincing arguments. Privacy and Security are on AMD's radar, and you need to "demonstrate that you actually increase productivity per workload. If you're asking people to spend more money [... you need to prove that] you can save hours per week....that could be worth the investment, [but] will require a massive education of the end-users." There is also a goal to give special love to "build the most innovative and disruptive form factors" for notebooks, so that people are like "wow, there's something new here". Specifically in the laptop space they are watching Qualcomm's Windows on Arm initiative very closely and want to make sure to "launch a product only when it's ready," and to also "address price-points below $1000."

Where Does AMD Begin in 2024?

What's the first stop in AMD's journey? It's to ensure that it's able to grow its market-share both on the client side with AI PCs, and on the data-center side, with its AI GPUs. For AI PCs, the company believes it has a winning product with the Ryzen AI 300 series "Strix Point" mobile processors, which it thinks are in a good position to ramp through 2024. What definitely helps is the fact that "Strix Point" is based on a relatively mature TSMC 4 nm foundry node, with which it can secure volumes; compared to Intel's "Lunar Lake" and upcoming "Arrow Lake," which are both expected to use TSMC's 3 nm foundry node. ASUS already announced a mid-July media event where it plans to launch dozens of AI PCs, all of which are powered by Ryzen AI 300 series chips, and meet Microsoft Copilot+ requirements. Over on the data-center side, AMD's MI300X accelerator is receiving spillover demand from competing NVIDIA H100 GPUs, and the company plans to continue investing in the software side of this solution, to bring in large orders from leading AI cloud-compute providers running popular AI applications.

The improvements to the software ecosystem will take some time, AMD is looking at a three to five year timeframe, and to support that, AMD has greatly increased their software engineer headcount as mentioned before. They have also accelerated their hardware development: "we are going to launch a new [Radeon] Instinct product every 12 months," which is a difficult task, but it helps react quicker to changes in the software markets and its demand. On the CPU side, the company "now has two CPU teams, one does n+1 [next generation] the other n+2 [two generations ahead]," which reminds us a bit of Intel's tick-tock strategy, which was more silicon manufacturing focused of course. When asked about Moore's Law and its demise, the company also commented that it is exploring "AI in chip design to go beyond place and route," and that "yesterday's war is more rasterization, more ray tracing, more bandwidth," the challenges of the next generations are not only hardware, but software support for nurturing relations with software developers plays a crucial role. AMD even thinks that the eternal tug-of-war between CPU and GPU could shift in the future: "we can't think of AI as a checkbox/gimmick feature like USB—AI could become the hero."

What's heartening though is that AMD has made the bold move of mobilizing resources toward hiring software talent over acquiring another hardware company like it usually does when its wallet is full—this will pay off in the coming years.

View at TechPowerUp Main Site