TheLostSwede

News Editor

- Joined

- Nov 11, 2004

- Messages

- 18,834 (2.50/day)

- Location

- Sweden

| System Name | Overlord Mk MLI |

|---|---|

| Processor | AMD Ryzen 7 7800X3D |

| Motherboard | Gigabyte X670E Aorus Master |

| Cooling | Noctua NH-D15 SE with offsets |

| Memory | 32GB Team T-Create Expert DDR5 6000 MHz @ CL30-34-34-68 |

| Video Card(s) | Gainward GeForce RTX 4080 Phantom GS |

| Storage | 1TB Solidigm P44 Pro, 2 TB Corsair MP600 Pro, 2TB Kingston KC3000 |

| Display(s) | Acer XV272K LVbmiipruzx 4K@160Hz |

| Case | Fractal Design Torrent Compact |

| Audio Device(s) | Corsair Virtuoso SE |

| Power Supply | be quiet! Pure Power 12 M 850 W |

| Mouse | Logitech G502 Lightspeed |

| Keyboard | Corsair K70 Max |

| Software | Windows 10 Pro |

| Benchmark Scores | https://valid.x86.fr/yfsd9w |

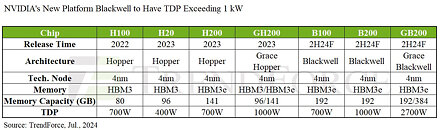

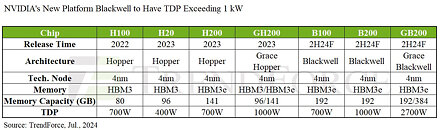

With the growing demand for high-speed computing, more effective cooling solutions for AI servers are gaining significant attention. TrendForce's latest report on AI servers reveals that NVIDIA is set to launch its next-generation Blackwell platform by the end of 2024. Major CSPs are expected to start building AI server data centers based on this new platform, potentially driving the penetration rate of liquid cooling solutions to 10%.

Air and liquid cooling systems to meet higher cooling demands

TrendForce reports that the NVIDIA Blackwell platform will officially launch in 2025, replacing the current Hopper platform and becoming the dominant solution for NVIDIA's high-end GPUs, accounting for nearly 83% of all high-end products. High-performance AI server models like the B200 and GB200 are designed for maximum efficiency, with individual GPUs consuming over 1,000 W. HGX models will house 8 GPUs each, while NVL models will support 36 or 72 GPUs per rack, significantly boosting the growth of the liquid cooling supply chain for AI servers.

TrendForce highlights the increasing TDP of server chips, with the B200 chip's TDP reaching 1,000 W, making traditional air cooling solutions inadequate. The TDP of the GB200 NVL36 and NVL72 complete rack systems is projected to reach 70kW and nearly 140kW, respectively, necessitating advanced liquid cooling solutions for effective heat management.

TrendForce observes that the GB200 NVL36 architecture will initially utilize a combination of air and liquid cooling solutions, while the NVL72, due to higher cooling demands, will primarily employ liquid cooling.

TrendForce identifies five major components in the current liquid cooling supply chain for GB200 rack systems: cold plates, coolant distribution units (CDUs), manifolds, quick disconnects (QDs), and rear door heat exchangers (RDHx).

The CDU is the critical system responsible for regulating coolant flow to maintain rack temperatures within the designated TDP range, preventing component damage. Vertiv is currently the main CDU supplier for NVIDIA AI solutions, with Chicony, Auras, Delta, and CoolIT undergoing continuous testing.

GB200 shipments expected to reach 60,000 units in 2025, making Blackwell the mainstream platform and accounting for over 80% of NVIDIA's high-end GPUs

In 2025, NVIDIA will target CSPs and enterprise customers with diverse AI server configurations, including the HGX, GB200 Rack, and MGX, with expected shipment ratios of 5:4:1. The HGX platform will seamlessly transition from the existing Hopper platform, enabling CSPs and large enterprise customers to adopt it quickly. The GB200 rack AI server solution will primarily target the hyperscale CSP market. TrendForce predicts NVIDIA will introduce the NVL36 configuration at the end of 2024 to quickly enter the market, with the more complex NVL72 expected to launch in 2025.

TrendForce forecasts that in 2025, GB200 NVL36 shipments will reach 60,000 racks, with Blackwell GPU usage between 2.1 to 2.2 million units.

However, there are several variables in the adoption of the GB200 Rack by end customers. TrendForce points out that the NVL72's power consumption of around 140kW per rack requires sophisticated liquid cooling solutions, making it challenging. Additionally, liquid-cooled rack designs are more suitable for new CSP data centers but involve complex planning processes. CSPs might also avoid being tied to a single supplier's specifications and opt for HGX or MGX models with x86 CPU architectures, or expand their self-developed ASIC AI server infrastructure for lower costs or specific AI applications.

View at TechPowerUp Main Site | Source

Air and liquid cooling systems to meet higher cooling demands

TrendForce reports that the NVIDIA Blackwell platform will officially launch in 2025, replacing the current Hopper platform and becoming the dominant solution for NVIDIA's high-end GPUs, accounting for nearly 83% of all high-end products. High-performance AI server models like the B200 and GB200 are designed for maximum efficiency, with individual GPUs consuming over 1,000 W. HGX models will house 8 GPUs each, while NVL models will support 36 or 72 GPUs per rack, significantly boosting the growth of the liquid cooling supply chain for AI servers.

TrendForce highlights the increasing TDP of server chips, with the B200 chip's TDP reaching 1,000 W, making traditional air cooling solutions inadequate. The TDP of the GB200 NVL36 and NVL72 complete rack systems is projected to reach 70kW and nearly 140kW, respectively, necessitating advanced liquid cooling solutions for effective heat management.

TrendForce observes that the GB200 NVL36 architecture will initially utilize a combination of air and liquid cooling solutions, while the NVL72, due to higher cooling demands, will primarily employ liquid cooling.

TrendForce identifies five major components in the current liquid cooling supply chain for GB200 rack systems: cold plates, coolant distribution units (CDUs), manifolds, quick disconnects (QDs), and rear door heat exchangers (RDHx).

The CDU is the critical system responsible for regulating coolant flow to maintain rack temperatures within the designated TDP range, preventing component damage. Vertiv is currently the main CDU supplier for NVIDIA AI solutions, with Chicony, Auras, Delta, and CoolIT undergoing continuous testing.

GB200 shipments expected to reach 60,000 units in 2025, making Blackwell the mainstream platform and accounting for over 80% of NVIDIA's high-end GPUs

In 2025, NVIDIA will target CSPs and enterprise customers with diverse AI server configurations, including the HGX, GB200 Rack, and MGX, with expected shipment ratios of 5:4:1. The HGX platform will seamlessly transition from the existing Hopper platform, enabling CSPs and large enterprise customers to adopt it quickly. The GB200 rack AI server solution will primarily target the hyperscale CSP market. TrendForce predicts NVIDIA will introduce the NVL36 configuration at the end of 2024 to quickly enter the market, with the more complex NVL72 expected to launch in 2025.

TrendForce forecasts that in 2025, GB200 NVL36 shipments will reach 60,000 racks, with Blackwell GPU usage between 2.1 to 2.2 million units.

However, there are several variables in the adoption of the GB200 Rack by end customers. TrendForce points out that the NVL72's power consumption of around 140kW per rack requires sophisticated liquid cooling solutions, making it challenging. Additionally, liquid-cooled rack designs are more suitable for new CSP data centers but involve complex planning processes. CSPs might also avoid being tied to a single supplier's specifications and opt for HGX or MGX models with x86 CPU architectures, or expand their self-developed ASIC AI server infrastructure for lower costs or specific AI applications.

View at TechPowerUp Main Site | Source