- Joined

- Oct 9, 2007

- Messages

- 47,583 (7.45/day)

- Location

- Dublin, Ireland

| System Name | RBMK-1000 |

|---|---|

| Processor | AMD Ryzen 7 5700G |

| Motherboard | ASUS ROG Strix B450-E Gaming |

| Cooling | DeepCool Gammax L240 V2 |

| Memory | 2x 8GB G.Skill Sniper X |

| Video Card(s) | Palit GeForce RTX 2080 SUPER GameRock |

| Storage | Western Digital Black NVMe 512GB |

| Display(s) | BenQ 1440p 60 Hz 27-inch |

| Case | Corsair Carbide 100R |

| Audio Device(s) | ASUS SupremeFX S1220A |

| Power Supply | Cooler Master MWE Gold 650W |

| Mouse | ASUS ROG Strix Impact |

| Keyboard | Gamdias Hermes E2 |

| Software | Windows 11 Pro |

The MLCommons consortium on Wednesday posted MLPerf Inference v4.1 benchmark results for popular AI inferencing accelerators available in the market, across brands that include NVIDIA, AMD, and Intel. AMD's Instinct MI300X accelerators emerged competitive to NVIDIA's "Hopper" H100 series AI GPUs. AMD also used the opportunity to showcase the kind of AI inferencing performance uplifts customers can expect from its next-generation EPYC "Turin" server processors powering these MI300X machines. "Turin" features "Zen 5" CPU cores, sporting a 512-bit FPU datapath, and improved performance in AI-relevant 512-bit SIMD instruction-sets, such as AVX-512, and VNNI. The MI300X, on the other hand, banks on the strengths of its memory sub-system, FP8 data format support, and efficient KV cache management.

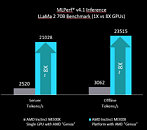

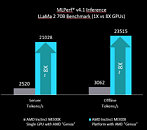

The MLPerf Inference v4.1 benchmark focused on the 70 billion-parameter LLaMA2-70B model. AMD's submissions included machines featuring the Instinct MI300X, powered by the current EPYC "Genoa" (Zen 4), and next-gen EPYC "Turin" (Zen 5). The GPUs are backed by AMD's ROCm open-source software stack. The benchmark evaluated inference performance using 24,576 Q&A samples from the OpenORCA dataset, with each sample containing up to 1024 input and output tokens. Two scenarios were assessed: the offline scenario, focusing on batch processing to maximize throughput in tokens per second, and the server scenario, which simulates real-time queries with strict latency limits (TTFT ≤ 2 seconds, TPOT ≤ 200 ms). This lets you see the chip's mettle in both high-throughput and low-latency queries.

AMD's first submission (4.1-0002) sees a server featuring 2P EPYC 9374F "Genoa" processors and 8x Instinct MI300X accelerators. Here, the machine clocks 21,028 tokens/sec in the server test, compared to 21,605 tokens/sec scored in an NVIDIA machine combining 8x NVIDIA DGX100 with a Xeon processor. In the offline test, the AMD machine scores 23,514 tokens/sec compared to 24,525 tokens/sec of the NVIDIA+Intel machine. AMD tested the 8x MI300X with a pair of EPYC "Turin" (Zen 5) processors of comparable core-counts, and gained on NVIDIA, with 22,021 server tokens/sec, and 24,110 offline tokens/sec. AMD claims that is achieving a near-linear scaling in performance between 1x MI300X and 8x MI300X, which speaks for AMD's platform I/O and memory management chops.

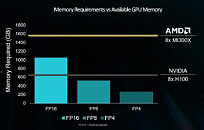

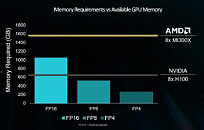

AMD's results bode well for future versions of the model, such as LLaMA 3.1 with its gargantuan 405 billion parameters. Here, the 192 GB of HBM3 with 5.3 TB/s of memory bandwidth come in really handy. This earned AMD a partnership with Meta to power LLaMa 3.1 405B. An 8x MI300X blade packs 1.5 TB of memory with over 42 TB/s of memory bandwidth, with Infinity Fabric handling the interconnectivity. A single server is able to accommodate the entire LLaMa 3.1 405B model using the FP16 data type.

View at TechPowerUp Main Site

The MLPerf Inference v4.1 benchmark focused on the 70 billion-parameter LLaMA2-70B model. AMD's submissions included machines featuring the Instinct MI300X, powered by the current EPYC "Genoa" (Zen 4), and next-gen EPYC "Turin" (Zen 5). The GPUs are backed by AMD's ROCm open-source software stack. The benchmark evaluated inference performance using 24,576 Q&A samples from the OpenORCA dataset, with each sample containing up to 1024 input and output tokens. Two scenarios were assessed: the offline scenario, focusing on batch processing to maximize throughput in tokens per second, and the server scenario, which simulates real-time queries with strict latency limits (TTFT ≤ 2 seconds, TPOT ≤ 200 ms). This lets you see the chip's mettle in both high-throughput and low-latency queries.

AMD's first submission (4.1-0002) sees a server featuring 2P EPYC 9374F "Genoa" processors and 8x Instinct MI300X accelerators. Here, the machine clocks 21,028 tokens/sec in the server test, compared to 21,605 tokens/sec scored in an NVIDIA machine combining 8x NVIDIA DGX100 with a Xeon processor. In the offline test, the AMD machine scores 23,514 tokens/sec compared to 24,525 tokens/sec of the NVIDIA+Intel machine. AMD tested the 8x MI300X with a pair of EPYC "Turin" (Zen 5) processors of comparable core-counts, and gained on NVIDIA, with 22,021 server tokens/sec, and 24,110 offline tokens/sec. AMD claims that is achieving a near-linear scaling in performance between 1x MI300X and 8x MI300X, which speaks for AMD's platform I/O and memory management chops.

AMD's results bode well for future versions of the model, such as LLaMA 3.1 with its gargantuan 405 billion parameters. Here, the 192 GB of HBM3 with 5.3 TB/s of memory bandwidth come in really handy. This earned AMD a partnership with Meta to power LLaMa 3.1 405B. An 8x MI300X blade packs 1.5 TB of memory with over 42 TB/s of memory bandwidth, with Infinity Fabric handling the interconnectivity. A single server is able to accommodate the entire LLaMa 3.1 405B model using the FP16 data type.

View at TechPowerUp Main Site