- Joined

- Oct 9, 2007

- Messages

- 47,435 (7.51/day)

- Location

- Hyderabad, India

| System Name | RBMK-1000 |

|---|---|

| Processor | AMD Ryzen 7 5700G |

| Motherboard | ASUS ROG Strix B450-E Gaming |

| Cooling | DeepCool Gammax L240 V2 |

| Memory | 2x 8GB G.Skill Sniper X |

| Video Card(s) | Palit GeForce RTX 2080 SUPER GameRock |

| Storage | Western Digital Black NVMe 512GB |

| Display(s) | BenQ 1440p 60 Hz 27-inch |

| Case | Corsair Carbide 100R |

| Audio Device(s) | ASUS SupremeFX S1220A |

| Power Supply | Cooler Master MWE Gold 650W |

| Mouse | ASUS ROG Strix Impact |

| Keyboard | Gamdias Hermes E2 |

| Software | Windows 11 Pro |

Intel on Tuesday announced the ultra core-count (UCC) variant of its "Granite Rapids" server processor microarchitecture, with the introduction of new Xeon 6 series SKUs with P-core counts as high as 128-core/256-thread per socket. "Granite Rapids" microarchitecture uses "Redwood Cove" performance cores. These are newer than the "Raptor Cove" cores powering "Sapphire Rapids," and a client version of these cores power the Core Ultra 1-series "Meteor Lake" processors. The server version of "Redwood Cove" comes with 112 KB of L1 cache (64 KB L1I and 48 KB L1D), and 2 MB of dedicated L2 cache. The server version of "Redwood Cove" is optimized for the mesh interconnect core layout on the three compute tiles making up "Granite Rapids-UCC," each core comes with a 3.93 MB segment of the tile's 168 MB shared L3 cache.

Perhaps the biggest change between the client and server variants of "Redwood Cove" are the AMX-FP16 and AVX512-FP16 instruction sets. The 128-core Xeon 6 6980P processor is based on the "Granite Rapids-UCC" package, which has three compute tiles of 44 cores, each, and a 4-channel memory interface, each. The three compute tiles have cache coherence, and so each core on any of the three tiles can benefit from the processor's 12-channel DDR5 memory interface. The package also has two SoC tiles with a 48-lane PCIe Gen 5 or CXL 2.0 root complex, making up a total of 96 lanes. The processor has 6 UPI links for multi-socket machines, and supports up to a 2P configuration per system, for a maximum core-count of 256 P-cores. Each of the three compute tiles is built on the Intel 3 foundry node, while the two SoC tiles are built on the Intel 7 node.

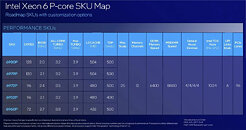

For its server processors, Intel does not resort to the same power measurement system as its client processors (base power, turbo power), since it has to stick to industry standards that guide data-center architects, and so it uses a flat TDP rating. The lineup is led by the Xeon 6980P, with its 128-core/256-thread core-count, a 2.00 GHz base frequency, 3.20 GHz all-core boost, 3.90 GHz maximum boost frequency, 504 MB of shared L3 cache, and 500 W TDP. The 6979P is next up, with a 120-core/240-thread configuration, 2.10 GHz base frequency, 3.20 GHz all-core boost, and 3.90 GHz maximum turbo frequency. Interestingly, it has the same 504 MB L3 cache size as the 6980P, as well as its 500 W TDP rating.

The Xeon 6972P is an interesting SKU, as it directly squares off against the top AMD EPYC "Genoa" part, with its 96-core/192-thread config. It ticks at 2.40 GHz base, 3.50 GHz all-core boost, and 3.90 GHz maximum boost. The L3 cache gets a slight haircut to a respectable 480 MB, but the TDP remains at 500 W. The 6952P has the same 96-core/192-thread config, but with lower clock speeds and TDP, with a 2.10 GHz base, 3.20 GHz all-core boost, and 3.90 GHz maximum boost. The TDP is reduced to 400 W.

The Xeon 6960P should appeal to the compute server market, with its balance of core counts and clock speeds. It comes with a 72-core/144-thread configuration, but the highest clock speeds in the lineup. This includes a 2.70 GHz base frequency, 3.80 GHz all-core boost, and 3.90 GHz maximum boost. The L3 cache size is 432 MB, while the TDP has been raised to 500 W to support the clock speeds.

All five models mentioned above are 2P capable, have 12-channel DDR5 memory interfaces, and native memory speeds of DDR5-6400 using conventional RDIMMs, or DDR5-8800 using MRDIMMs. All SKUs also have 96 PCIe Gen 5 or CXL 2.0 lanes. All SKUs also receive the same on-package accelerators (fixed function hardware to accelerate popular kinds of server applications), which include DSA (data streaming accelerator), IAA (in-memory analysis accelerator), QAT (Quick Assist Technology), and Dynamic Load Balancing (DLB).

View at TechPowerUp Main Site

Perhaps the biggest change between the client and server variants of "Redwood Cove" are the AMX-FP16 and AVX512-FP16 instruction sets. The 128-core Xeon 6 6980P processor is based on the "Granite Rapids-UCC" package, which has three compute tiles of 44 cores, each, and a 4-channel memory interface, each. The three compute tiles have cache coherence, and so each core on any of the three tiles can benefit from the processor's 12-channel DDR5 memory interface. The package also has two SoC tiles with a 48-lane PCIe Gen 5 or CXL 2.0 root complex, making up a total of 96 lanes. The processor has 6 UPI links for multi-socket machines, and supports up to a 2P configuration per system, for a maximum core-count of 256 P-cores. Each of the three compute tiles is built on the Intel 3 foundry node, while the two SoC tiles are built on the Intel 7 node.

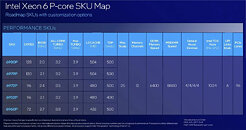

For its server processors, Intel does not resort to the same power measurement system as its client processors (base power, turbo power), since it has to stick to industry standards that guide data-center architects, and so it uses a flat TDP rating. The lineup is led by the Xeon 6980P, with its 128-core/256-thread core-count, a 2.00 GHz base frequency, 3.20 GHz all-core boost, 3.90 GHz maximum boost frequency, 504 MB of shared L3 cache, and 500 W TDP. The 6979P is next up, with a 120-core/240-thread configuration, 2.10 GHz base frequency, 3.20 GHz all-core boost, and 3.90 GHz maximum turbo frequency. Interestingly, it has the same 504 MB L3 cache size as the 6980P, as well as its 500 W TDP rating.

The Xeon 6972P is an interesting SKU, as it directly squares off against the top AMD EPYC "Genoa" part, with its 96-core/192-thread config. It ticks at 2.40 GHz base, 3.50 GHz all-core boost, and 3.90 GHz maximum boost. The L3 cache gets a slight haircut to a respectable 480 MB, but the TDP remains at 500 W. The 6952P has the same 96-core/192-thread config, but with lower clock speeds and TDP, with a 2.10 GHz base, 3.20 GHz all-core boost, and 3.90 GHz maximum boost. The TDP is reduced to 400 W.

The Xeon 6960P should appeal to the compute server market, with its balance of core counts and clock speeds. It comes with a 72-core/144-thread configuration, but the highest clock speeds in the lineup. This includes a 2.70 GHz base frequency, 3.80 GHz all-core boost, and 3.90 GHz maximum boost. The L3 cache size is 432 MB, while the TDP has been raised to 500 W to support the clock speeds.

All five models mentioned above are 2P capable, have 12-channel DDR5 memory interfaces, and native memory speeds of DDR5-6400 using conventional RDIMMs, or DDR5-8800 using MRDIMMs. All SKUs also have 96 PCIe Gen 5 or CXL 2.0 lanes. All SKUs also receive the same on-package accelerators (fixed function hardware to accelerate popular kinds of server applications), which include DSA (data streaming accelerator), IAA (in-memory analysis accelerator), QAT (Quick Assist Technology), and Dynamic Load Balancing (DLB).

View at TechPowerUp Main Site