- Joined

- Oct 9, 2007

- Messages

- 47,599 (7.45/day)

- Location

- Dublin, Ireland

| System Name | RBMK-1000 |

|---|---|

| Processor | AMD Ryzen 7 5700G |

| Motherboard | Gigabyte B550 AORUS Elite V2 |

| Cooling | DeepCool Gammax L240 V2 |

| Memory | 2x 16GB DDR4-3200 |

| Video Card(s) | Galax RTX 4070 Ti EX |

| Storage | Samsung 990 1TB |

| Display(s) | BenQ 1440p 60 Hz 27-inch |

| Case | Corsair Carbide 100R |

| Audio Device(s) | ASUS SupremeFX S1220A |

| Power Supply | Cooler Master MWE Gold 650W |

| Mouse | ASUS ROG Strix Impact |

| Keyboard | Gamdias Hermes E2 |

| Software | Windows 11 Pro |

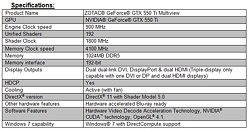

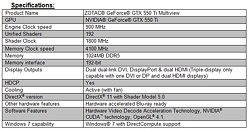

ZOTAC International, a leading innovator and the world's largest channel manufacturer of graphics cards, motherboards and mini-PCs, today expands the GeForce Multiview line-up with the addition of the ZOTAC GeForce GTX 550 Ti Multiview. The ZOTAC GeForce GTX 550 Ti Multiview is the latest mainstream graphics card capable of delivering a seamless triple-display computing experience.

"ZOTAC believes multi-monitor computing is the future of desktop computing. Triple displays are the sweet spot where productivity and the gaming experience drastically improves without overwhelming users with too many monitors," said Carsten Berger, marketing director, ZOTAC International. "With our latest ZOTAC GeForce GTX 550 Ti Multiview, we are able to deliver a quality visual experience that combines Microsoft DirectX 11 compatibility, NVIDIA CUDA technology and triple simultaneous displays at a mainstream price point."

The ZOTAC GeForce GTX 550 Ti Multiview is powered by the latest NVIDIA GeForce GTX 550 Ti graphics processor with 192 lightning-fast unified shaders paired with 1GB of DDR5 memory. The combination enables users to experience stunning visual quality while maintaining class-leading performance-per-watt with the ZOTAC GeForce GTX 550 Ti Multiview.

It's time to play with triple displays and the ZOTAC GeForce GTX 550 Ti Multiview.

View at TechPowerUp Main Site

"ZOTAC believes multi-monitor computing is the future of desktop computing. Triple displays are the sweet spot where productivity and the gaming experience drastically improves without overwhelming users with too many monitors," said Carsten Berger, marketing director, ZOTAC International. "With our latest ZOTAC GeForce GTX 550 Ti Multiview, we are able to deliver a quality visual experience that combines Microsoft DirectX 11 compatibility, NVIDIA CUDA technology and triple simultaneous displays at a mainstream price point."

The ZOTAC GeForce GTX 550 Ti Multiview is powered by the latest NVIDIA GeForce GTX 550 Ti graphics processor with 192 lightning-fast unified shaders paired with 1GB of DDR5 memory. The combination enables users to experience stunning visual quality while maintaining class-leading performance-per-watt with the ZOTAC GeForce GTX 550 Ti Multiview.

It's time to play with triple displays and the ZOTAC GeForce GTX 550 Ti Multiview.

View at TechPowerUp Main Site