Raevenlord

News Editor

- Joined

- Aug 12, 2016

- Messages

- 3,755 (1.18/day)

- Location

- Portugal

| System Name | The Ryzening |

|---|---|

| Processor | AMD Ryzen 9 5900X |

| Motherboard | MSI X570 MAG TOMAHAWK |

| Cooling | Lian Li Galahad 360mm AIO |

| Memory | 32 GB G.Skill Trident Z F4-3733 (4x 8 GB) |

| Video Card(s) | Gigabyte RTX 3070 Ti |

| Storage | Boot: Transcend MTE220S 2TB, Kintson A2000 1TB, Seagate Firewolf Pro 14 TB |

| Display(s) | Acer Nitro VG270UP (1440p 144 Hz IPS) |

| Case | Lian Li O11DX Dynamic White |

| Audio Device(s) | iFi Audio Zen DAC |

| Power Supply | Seasonic Focus+ 750 W |

| Mouse | Cooler Master Masterkeys Lite L |

| Keyboard | Cooler Master Masterkeys Lite L |

| Software | Windows 10 x64 |

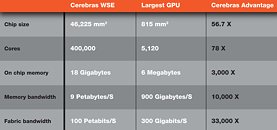

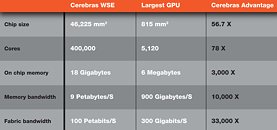

Cerebras has introduced the world to its CS-1 system, which will house the company's (and simultaneously, the industry's) most powerful monolithic accelerator. The CS-1 is an integrated solution the size of 15 industry-standard rack units, and packs everything from the Wafer Scale Engine to cooling systems. The CS-1 consumes 20 kW of power, with a full 4 kW dedicated solely to the cooling subsystem, like fans, pumps, and the heat exchanger, 15 kW dedicated to the chip, and 1 kW is totally lost to power supply inefficiencies. Obviously, power supply modules and other cooling subsystems are redundant, and hot-swappable if need be - you can imagine the computational value lost with each millisecond of downtime that were to occur in such a system.

The CS-1 system houses 12x 100GbE connections for pairing with other traditional compute systems, and the SC-1 is also scalable - multiple systems can be made to work in tandem, multiplying processing power by as many units as are integrated, and the entire system being addressable as a single homogeneous system. Power delivery is made directly into core clusters (remember there are 400,000 of those). Already deployed in the Argonne National laboratory, crunch time on the SC-1 is being used to power through cancer research (what an amazing addition to WCG this would be, right?) and black hole research.

View at TechPowerUp Main Site

The CS-1 system houses 12x 100GbE connections for pairing with other traditional compute systems, and the SC-1 is also scalable - multiple systems can be made to work in tandem, multiplying processing power by as many units as are integrated, and the entire system being addressable as a single homogeneous system. Power delivery is made directly into core clusters (remember there are 400,000 of those). Already deployed in the Argonne National laboratory, crunch time on the SC-1 is being used to power through cancer research (what an amazing addition to WCG this would be, right?) and black hole research.

View at TechPowerUp Main Site

and sucks down power like its going out of fashion

and sucks down power like its going out of fashion