- Joined

- Oct 9, 2007

- Messages

- 47,177 (7.56/day)

- Location

- Hyderabad, India

| System Name | RBMK-1000 |

|---|---|

| Processor | AMD Ryzen 7 5700G |

| Motherboard | ASUS ROG Strix B450-E Gaming |

| Cooling | DeepCool Gammax L240 V2 |

| Memory | 2x 8GB G.Skill Sniper X |

| Video Card(s) | Palit GeForce RTX 2080 SUPER GameRock |

| Storage | Western Digital Black NVMe 512GB |

| Display(s) | BenQ 1440p 60 Hz 27-inch |

| Case | Corsair Carbide 100R |

| Audio Device(s) | ASUS SupremeFX S1220A |

| Power Supply | Cooler Master MWE Gold 650W |

| Mouse | ASUS ROG Strix Impact |

| Keyboard | Gamdias Hermes E2 |

| Software | Windows 11 Pro |

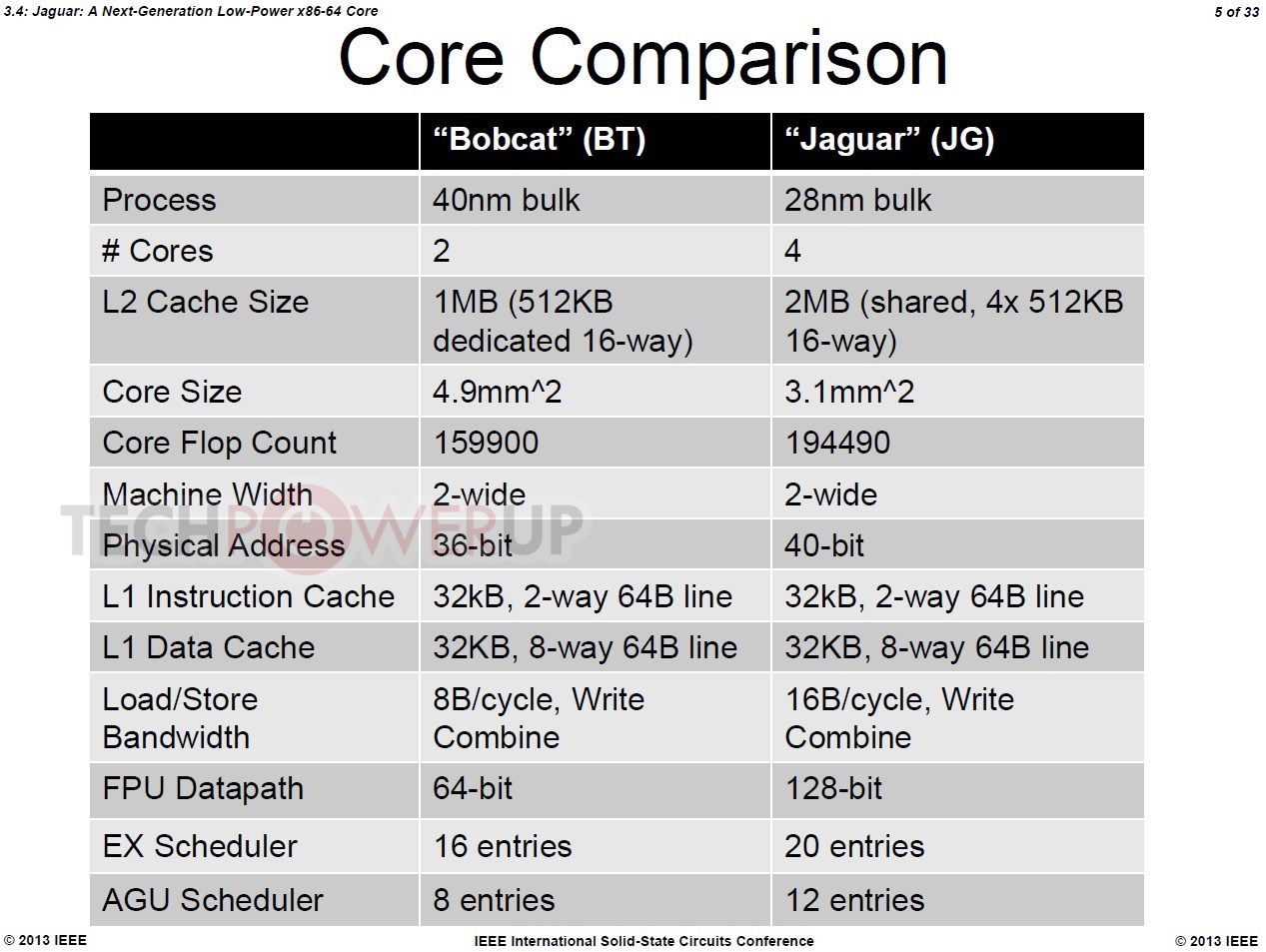

The bulk of AMD's 4th generation Ryzen desktop processors will comprise of "Vermeer," a high core-count socket AM4 processor and successor to the current-generation "Matisse." These chips combine up to two "Zen 3" CCDs with a cIOD (client I/O controller die). While the maximum core count of each chiplet isn't known, they will implement the "Zen 3" microarchitecture, which reportedly does away with CCX to get all cores on the CCD to share a single large L3 cache, this is expected to bring about improved inter-core latencies. AMD's generational IPC uplifting efforts could also include improving bandwidth between the various on-die components (something we saw signs of in the "Zen 2" based "Renoir"). The company is also expected to leverage a newer 7 nm-class silicon fabrication node at TSMC (either N7P or N7+), to increase clock speeds - or so we thought.

An Igor's Lab report points to the possibility of AMD gunning for efficiency, by letting the IPC gains handle the bulk of Vermeer's competitiveness against Intel's offerings, not clock-speeds. The report decodes OPNs (ordering part numbers) of two upcoming Vermeer parts, one 8-core and the other 16-core. While the 8-core part has some generational clock speed increases (by around 200 MHz on the base clock), the 16-core part has lower max boost clock speeds than the 3950X. Then again, the OPNs reference A0 revision, which could mean that these are engineering samples that will help AMD's ecosystem partners to build their products around these processors (think motherboard- or memory vendors), and that the retail product could come with higher clock speeds after all. We'll find out in September, when AMD is expected to debut its 4th generation Ryzen desktop processor family, around the same time NVIDIA launches GeForce "Ampere."

View at TechPowerUp Main Site

An Igor's Lab report points to the possibility of AMD gunning for efficiency, by letting the IPC gains handle the bulk of Vermeer's competitiveness against Intel's offerings, not clock-speeds. The report decodes OPNs (ordering part numbers) of two upcoming Vermeer parts, one 8-core and the other 16-core. While the 8-core part has some generational clock speed increases (by around 200 MHz on the base clock), the 16-core part has lower max boost clock speeds than the 3950X. Then again, the OPNs reference A0 revision, which could mean that these are engineering samples that will help AMD's ecosystem partners to build their products around these processors (think motherboard- or memory vendors), and that the retail product could come with higher clock speeds after all. We'll find out in September, when AMD is expected to debut its 4th generation Ryzen desktop processor family, around the same time NVIDIA launches GeForce "Ampere."

View at TechPowerUp Main Site