Gigabyte RTX 3060 Ti EAGLE Graphics Cards Put on Display... By Bosnian Retailer

CPU Infotech, a Bosnian retailer of computer hardware, recently posted a photo of their latest inventory entries on Facebook. The photo showcased the newly/received Gigabyte RTX 3060 Ti EAGLE graphics cards, one of Gigabyte's designs for this particular SKU. The RTX 3060 Ti EAGLE features a dual-slot, dual-fan cooler design that's the smallest seen on any Ampere graphics card to date. The retailer announces that the inventory should be for sale pretty soon - and all publicly available information points towards a December 2nd release date for the RTX 3060 Ti.

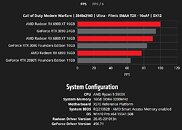

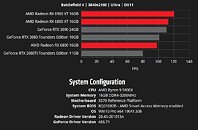

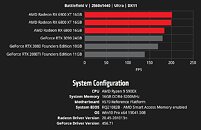

The RTX 3060 Ti is supposed to beat NVIDIA's previous RTX 2080 SUPER graphics cards in performance, whilst costing half of that cards' launch asking price at $399. This should make this one of the most interesting performance-per-dollar graphics cards in NVIDIA's lineup. The RTX 3060 Ti is reportedly based on the same 8 nm "GA104" silicon as the RTX 3070, with further cuts. It features 38 out of the 48 available streaming multiprocessors on "GA104". This amounts to 4,864 "Ampere" CUDA cores, 152 tensor cores, and 38 "Ampere" RT cores. The memory configuration is unchanged from the RTX 3070, which translates to 8 GB of 14 Gbps GDDR6 memory across a 256-bit wide memory interface, with 448 GB/s of memory bandwidth. This marks the first time in years NVIDIA has launched a Ti model before the regular-numbered SKU in a given series, showcasing just how intense AMD competition is expected to be.

The RTX 3060 Ti is supposed to beat NVIDIA's previous RTX 2080 SUPER graphics cards in performance, whilst costing half of that cards' launch asking price at $399. This should make this one of the most interesting performance-per-dollar graphics cards in NVIDIA's lineup. The RTX 3060 Ti is reportedly based on the same 8 nm "GA104" silicon as the RTX 3070, with further cuts. It features 38 out of the 48 available streaming multiprocessors on "GA104". This amounts to 4,864 "Ampere" CUDA cores, 152 tensor cores, and 38 "Ampere" RT cores. The memory configuration is unchanged from the RTX 3070, which translates to 8 GB of 14 Gbps GDDR6 memory across a 256-bit wide memory interface, with 448 GB/s of memory bandwidth. This marks the first time in years NVIDIA has launched a Ti model before the regular-numbered SKU in a given series, showcasing just how intense AMD competition is expected to be.