Monday, March 16th 2015

NVIDIA GeForce GTX TITAN-X Specs Revealed

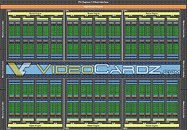

NVIDIA's GeForce GTX TITAN-X, unveiled last week at GDC 2015, is shaping up to be a beast, on paper. According to an architecture block-diagram of the GM200 silicon leaked to the web, the GTX TITAN-X appears to be maxing out all available components on the 28 nm GM200 silicon, on which it is based. While maintaining the same essential component hierarchy as the GM204, the GM200 (and the GTX TITAN-X) features six graphics processing clusters, holding a total of 3,072 CUDA cores, based on the "Maxwell" architecture.

With "Maxwell" GPUs, TMU count is derived as CUDA core count / 16, giving us a count of 192 TMUs. Other specs include 96 ROPs, and a 384-bit wide GDDR5 memory interface, holding 12 GB of memory, using 24x 4 Gb memory chips. The core is reportedly clocked at 1002 MHz, with a GPU Boost frequency of 1089 MHz. The memory is clocked at 7012 MHz (GDDR5-effective), yielding a memory bandwidth of 336 GB/s. NVIDIA will use a lossless texture-compression technology to improve bandwidth utilization. The chip's TDP is rated at 250W. The card draws power from a combination of 6-pin and 8-pin PCIe power connectors, display outputs include three DisplayPort 1.2, one HDMI 2.0, and one dual-link DVI.

Source:

VideoCardz

With "Maxwell" GPUs, TMU count is derived as CUDA core count / 16, giving us a count of 192 TMUs. Other specs include 96 ROPs, and a 384-bit wide GDDR5 memory interface, holding 12 GB of memory, using 24x 4 Gb memory chips. The core is reportedly clocked at 1002 MHz, with a GPU Boost frequency of 1089 MHz. The memory is clocked at 7012 MHz (GDDR5-effective), yielding a memory bandwidth of 336 GB/s. NVIDIA will use a lossless texture-compression technology to improve bandwidth utilization. The chip's TDP is rated at 250W. The card draws power from a combination of 6-pin and 8-pin PCIe power connectors, display outputs include three DisplayPort 1.2, one HDMI 2.0, and one dual-link DVI.

55 Comments on NVIDIA GeForce GTX TITAN-X Specs Revealed

And stupidly expensive...

Many older videocards even from lower performance segments can do it perfectly fine.

The question is not whether it should run the aging Crysis, but what's new it will be able to run. :)

We are very soon going to jump on new progressive manufacturing processes, so TitanX will become obsolete sooner rather than later. ;)

But...

Just looking at the launch price of the state-of-the-art, top dog, the fastest graphics card at the time of its launch - the Radeon HD 5870, and guess what?! Its launch price was set to 379$.

For comparison reasons, nvidia has always been worse regarding prices, at the time of the 8800 Ultra launch (when there was no competition at all), its price was set to north of 830$, while the 8800 GTX was 600-650$.

So, if you think that those money-grabbing bastards need to charge you 1000$, then you are in a big mistake and nothing good is going to happen to you since you really are ready to waste your money, to throw, or I don't know how else to describe it.

Sure, it might not come first, or even for a while, but the fact they are (seemingly) putting a rush on it really puts a clamp on Titan's main function (scaling above limitations of 4GB/1440p; 6GB and it's conceivable inadequacy at 4k). If the mainstream GM200/Fiji parts can overclock well, which by their memory setup (both less and in AMD's case more efficient use of power) they should...it really takes the wind out of Titan's sails.

When I saw the 'leaked benchmarks' of overclocks on Titan (1222mhz), first thing I did was subtract the power usage from 6GB of ram. What you end up with is a part that *should* overclock to ~1400mhz*. Doing the math, one can assume 980ti (with conceivably 3 less units) ends up a similar part in the end...pretty much separated by stock performance, core power efficiency, and memory buffer. It also just so happens this should be enough core performance (Titan at stock/980ti overclocked) to max out settings at 1440p or overclocked (in Titan's case because of buffer) strong-enough in an sli config to run even demanding games at 4k.

*This fits with nvidia's philosophy of raising clocks of maxwell around 20%. 1v should be very power efficient (and could yield a clock of around 1222) while a lesser part could use voltage (in place of more memory) up to the power leakage curve of ~1.163v (1.163*1.2 = 1400mhz).

At any rate, the target is very much known, what's left to see is how amd performs...if they can clock their less-optimized and more compute-heavy core to scale games at 4k with 8GB in crossfire...1440p *should* be fine. If they can, there is room for AMD to swoop in and grab market from 980 (390), 980 8GB (390 8GB), '980ti' 6GB (4GB for ~1440p) and Titan (crossfire 8GB and 4k) all with one chip...and conceivably at much lower prices.

TLDR; So yeah, so I find Titan the least exciting part of upcoming product launches.

In your hell market world, the company prices it 'as free as they like' and customer says - I will not buy it, and the company just does nothing, or if you are really lucky, they will lower it to a certain extent.

Or in other words - the market is not free because you don't reach a deal acceptable for both sides. Only for the corporation.

This is called corporative fascism and has nothing to do with anything free for people.

free mar·ket

Of course, "free market" is far from being ideal and has severe drawbacks.

Still loving those output choices as well!

You also should remember that ultimately, a GPU is a luxury item bought with disposable income. A gaming GPU is not essential to your health and well-being. You can believe and agrue that nVidia is gouging you on prices, but at the end of the day, they are gouging you on the price of a TOY, which is all a gaming GPU really is. I think you may want to remember that before throwing out words like Fascism, fascists and discrimination.

videocardz.com/55146/amd-radeon-r9-390x-possible-specifications-and-performance-leaked

HBM 1.5, I guess? Well, that explains that. So, is that 2GB chips with an added set, or 8hi with a double-width link? Either way, it sounds like the original implementation couldn't carry that much bw, so they doubled up. Next-gen (gen2) will apparently have a single link rated for 256gbps per stack (as expected).

Also, I bet the difficult decision was knowing they couldn't keep it within 300w. I personally am happy...the thought of having 375w, 75w which surely is not going to be needed completely for 4GB extra of ram, makes this product super competitive (clocks have a better chance of reaching full potential if it can be kept cool).

Edit: Okay, I think I understand the dual-link interposer now.

Also, it just goes to show how much better of a product you can make when you do your homework and double up (okay, vias and interposer links are different, but still..can never resist a Fermi joke).

I wonder if the 8gb version will compete with the 980ti in price.

(Sorry for not throwing this in an appropriate thread; seemed relevant considering this is the latest 'big chips' post.)