Tuesday, January 31st 2017

Backblaze's 2016 HDD Failure Stats Revealed: HGST the Most Reliable

Backblaze has just revealed their HDD failure rates statistics, with updates regarding 2016's Q4 and full-year analysis. These 2016 results join the company's statistics, which started being collected and collated in April 2013, to shed some light on the most - and least reliable - manufacturers. A total of 1,225 drives failed in 2016, which means the drive failure rate for 2016 was just 1.95 percent, a improving over the 2.47 percent that died in 2015 and miles below the 6.39 percent that hit the garbage bin in 2014.

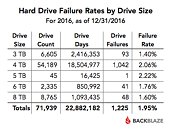

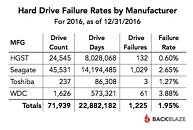

Organizing 2016's failure rates by drive size, independent of manufacturer, we see that 3 TB hard drives are the most reliable (with 1,40% failure rates), with 5 TB hard drives being the least reliable (at a 2,22% failure rate). When we organize the drives by manufacturer, HGST, which powers 34% (24,545) of the total drives (71,939), claims the reliability crown, with a measly 0,60% failure rate, and WDC bringing up the rear on reliability terms, with an average 3,88% failure rate, while simultaneously being one of the least represented manufacturers, with only 1,626 HDDs being used from the manufacturer.

Source:

Backblaze

Organizing 2016's failure rates by drive size, independent of manufacturer, we see that 3 TB hard drives are the most reliable (with 1,40% failure rates), with 5 TB hard drives being the least reliable (at a 2,22% failure rate). When we organize the drives by manufacturer, HGST, which powers 34% (24,545) of the total drives (71,939), claims the reliability crown, with a measly 0,60% failure rate, and WDC bringing up the rear on reliability terms, with an average 3,88% failure rate, while simultaneously being one of the least represented manufacturers, with only 1,626 HDDs being used from the manufacturer.

42 Comments on Backblaze's 2016 HDD Failure Stats Revealed: HGST the Most Reliable

By size and Brand

After all its 2017 and Spinning rust is on its way out !!! :)

People just can't get that through their thick skulls for a few years now.

And the short term:

Seagate ST4000DX000 has a scary-high failure rate at 13.57%. Good idea to avoid buying those.

The fact that only 9% of their Seagate ST1500DL003 drives are still operational is also scary. If I had one of those, I'd be getting the data off of it and disposing of it.

That said, the take away from Backblaze is pretty simple: of the 85,467 hard drives they purchased, 5,380 had failed. That amounts to 6.3% of drives purchased since April 2013. Failure rates have been declining from 6.39% in 2014, to 2.47% in 2015, to 1.95% in 2016. So not only are hard drives continuing to prove they're generally reliable, they're also getting more reliable with increasing densities. This is very good news! :D

A bit anecdotal, but the HGST in my home server is purring away while my Seagate just pasted it warranty date... and bad bits started to appear ;)

Considering that operating temps will vary so massively from drive to drive it basically invalidates any attempt to log the failures rates as although all the drives are being abused there are many which are being abused more and no common methodology at work at all.

I.E the two drives on the list with the highest failure are Seagate DX drives (the newest ones on the list), now if they bought a load in bulk and started using them to replace failed drives then they would be putting most of them in the areas where drive failure is most likely to occur, thus hyper inflating their failure rates.

Another point is that if you have 1000 DriveX and 100 DriveY and break 50 of each then the failure percentage will be vastly different even though you killed the same amount of drives.

Also, some of their number seem very fishy to me. I didn't realize it until a read Ford's post.

He took the 85,467 drives, and the 5,380 failures, and calculated a 6.3% failure rate. But BackBlace only claims a 3.36% failure rate.

So I started looking at some spot data for a few of the drives. Look at the Seagate ST1500DL003. They say they only used 51 drives, but somehow 77 failed? And that amounts to a 90% failure rate? I call BS. Besides the fact that it seems they had more drives fail than they actually had, the failure rate is wrong. And it isn't just that drive. Look at the Hitachi HDS723030ALA. They had 1,027 drives and 69 fail. That is just over a 6.7% failure rate, but they claim 1.92% Then look at the Seagate ST8000DM002. They have 8,660 drives and 48 failures. That amounts to a failure rate of 0.55%, but they claim 1.63%.

There is something screwy with their numbers.