Tuesday, June 11th 2019

AMD Radeon RX 5700 XT Confirmed to Feature 64 ROPs: Architecture Brief

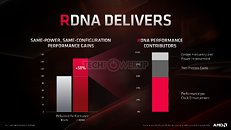

AMD "Navi 10" is a very different GPU from the "Vega 10," or indeed the "Polaris 10." The GPU sees the introduction of the new RDNA graphics architecture, which is the first big graphics architecture change on an AMD GPU in nearly a decade. AMD had in 2011 released its Graphics CoreNext (GCN) architecture, and successive generations of GPUs since then, brought generational improvements to GCN, all the way up to "Vega." At the heart of RDNA is its brand new Compute Unit (CU), which AMD redesigned to increase IPC, or single-thread performance.

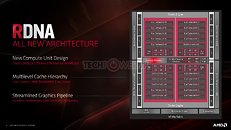

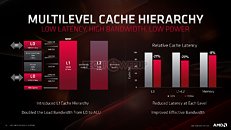

Before diving deeper, it's important to confirm two key specifications of the "Navi 10" GPU. The ROP count of the silicon is 64, double that of the "Polaris 10" silicon, and same as "Vega 10." The silicon has sixteen render-backends (RBs), these are quad-pumped, which work out to an ROP count of 64. AMD also confirmed that the chip has 160 TMUs. These TMUs are redesigned to feature 64-bit bi-linear filtering. The Radeon RX 5700 XT maxes out the silicon, while the RX 5700 disables four RDNA CUs, working out to 144 TMUs. The ROP count on the RX 5700 is unchanged at 64.The RDNA Compute Unit sees the bulk of AMD's innovation. Groups of two CUs make a "Dual Compute Unit" that share a scalar data cahe, shader instruction cache, and a local data share. Each CU is now split between two SIMD units of 32 stream processors, a vector register, and a scalar unit, each. This way, AMD doubled the number of scalar units on the silicon to 80, double the CU count. Each scalar unit is similar in concept to a CPU core, and is designed to handle heavy scalar indivisible workloads. Each SIMD unit has its own scheduler. Four TMUs are part of each CU. This massive redesign in SIMD and CU hierarchy achieves a doubling in scalar- and vector instruction rates, and resource pooling between every two adjacent CUs.Groups of five RDNA dual-compute unit share a prim unit, a rasterizer, 16 ROPs, and a large L1 cache. Two such groups make a Shader Engine, and the two Shader Engines meet at a centralized Graphics Command Processor that marshals workloads between the various components, a Geometry Processor, and four Asynchronous-Compute Engines (ACEs).The second major redesign "Navi" features over previous generations is the cache hierarchy. Each RDNA dual-CU has a local fast cache AMD refers to as L0 (level zero). Each 16 KB L0 unit is made up of the fastest SRAM, and cushions direct transfers between the compute units and the L1 cache, bypassing the compute unit's I-cache and K-cache. The 128 KB L1 cache shared between five dual-CUs is a 16-way block of fast SRAM cushioning transfers between the shade engines and the 4 MB of L2 cache.

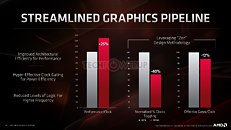

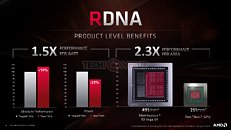

In all, RDNA helps AMD achieve a 2.3x gain in performance per area, 1.5x gain in performance per Watt. The "Navi 10" silicon measures just 251 mm² compared to the 495 mm² of the "Vega 10" GPU die. A lot of these spatial gains are also attributable to the switch to the new 7 nm silicon fabrication process from 14 nm.AMD also briefly touched on its vision for real-time ray-tracing. To begin with, we can confirm that the "Navi 10" silicon has no fixed function hardware for ray-tracing such as the RT core or tensor cores found in NVIDIA "Turing" RTX GPUs. For now, AMD's implementation of DXR (DirectX Ray-tracing) for now relies entirely on programmable shaders. At launch the RX 5700 series won't be advertised to support DXR. AMD will instead release support through driver updates. The RDNA 2 architecture scheduled for 2020-21 will pack some fixed-function hardware for certain real-time ray-tracing effects. AMD sees a future in which real-time ray-tracing is handled on the cloud. The next frontier for cloud-computing is cloud-assist, where your machine can offload processing workloads to the cloud.

Before diving deeper, it's important to confirm two key specifications of the "Navi 10" GPU. The ROP count of the silicon is 64, double that of the "Polaris 10" silicon, and same as "Vega 10." The silicon has sixteen render-backends (RBs), these are quad-pumped, which work out to an ROP count of 64. AMD also confirmed that the chip has 160 TMUs. These TMUs are redesigned to feature 64-bit bi-linear filtering. The Radeon RX 5700 XT maxes out the silicon, while the RX 5700 disables four RDNA CUs, working out to 144 TMUs. The ROP count on the RX 5700 is unchanged at 64.The RDNA Compute Unit sees the bulk of AMD's innovation. Groups of two CUs make a "Dual Compute Unit" that share a scalar data cahe, shader instruction cache, and a local data share. Each CU is now split between two SIMD units of 32 stream processors, a vector register, and a scalar unit, each. This way, AMD doubled the number of scalar units on the silicon to 80, double the CU count. Each scalar unit is similar in concept to a CPU core, and is designed to handle heavy scalar indivisible workloads. Each SIMD unit has its own scheduler. Four TMUs are part of each CU. This massive redesign in SIMD and CU hierarchy achieves a doubling in scalar- and vector instruction rates, and resource pooling between every two adjacent CUs.Groups of five RDNA dual-compute unit share a prim unit, a rasterizer, 16 ROPs, and a large L1 cache. Two such groups make a Shader Engine, and the two Shader Engines meet at a centralized Graphics Command Processor that marshals workloads between the various components, a Geometry Processor, and four Asynchronous-Compute Engines (ACEs).The second major redesign "Navi" features over previous generations is the cache hierarchy. Each RDNA dual-CU has a local fast cache AMD refers to as L0 (level zero). Each 16 KB L0 unit is made up of the fastest SRAM, and cushions direct transfers between the compute units and the L1 cache, bypassing the compute unit's I-cache and K-cache. The 128 KB L1 cache shared between five dual-CUs is a 16-way block of fast SRAM cushioning transfers between the shade engines and the 4 MB of L2 cache.

In all, RDNA helps AMD achieve a 2.3x gain in performance per area, 1.5x gain in performance per Watt. The "Navi 10" silicon measures just 251 mm² compared to the 495 mm² of the "Vega 10" GPU die. A lot of these spatial gains are also attributable to the switch to the new 7 nm silicon fabrication process from 14 nm.AMD also briefly touched on its vision for real-time ray-tracing. To begin with, we can confirm that the "Navi 10" silicon has no fixed function hardware for ray-tracing such as the RT core or tensor cores found in NVIDIA "Turing" RTX GPUs. For now, AMD's implementation of DXR (DirectX Ray-tracing) for now relies entirely on programmable shaders. At launch the RX 5700 series won't be advertised to support DXR. AMD will instead release support through driver updates. The RDNA 2 architecture scheduled for 2020-21 will pack some fixed-function hardware for certain real-time ray-tracing effects. AMD sees a future in which real-time ray-tracing is handled on the cloud. The next frontier for cloud-computing is cloud-assist, where your machine can offload processing workloads to the cloud.

38 Comments on AMD Radeon RX 5700 XT Confirmed to Feature 64 ROPs: Architecture Brief

I can't say I'm impressed enough with the price performance to drop $450+ to upgrade to something newer. I'm patient (and kind of broke), so I'm in no rush to upgrade. I can wait and see what kind of things we can expect from the next gen of GPUs.

Seems counter-intuitive when the market is increasing lower latency, high-FPS, high-refresh offering and current consumer devices with dedicated hardware are capable of real time ray tracing.

I am on the ray tracing wagon, and while I do appreciate the slightly cooler shadows and more realistic lighting in the 2 games I play that support it, I really do see it as more of a developer-side feature. I just imagine it's way easier to say "light source here, shiny thing here, go" than do all of the lightmap work and try to fake it. I think the cloud RTRT might be true for consoles...

Meanwhile RTRT over the cloud? Did they write that when they were high or something? The latency will be horrible.

:laugh: (funny since the next Xbox and PS will have RT and they are not using Nivida for their GPU's ... well not that i care more for Consoles than i care about RT, tho)ah? the RX5700XT will be much pricier than the 2070 ? (well ... for me it will be ... after all my retailer are thinking a 2080 is worth 850$ base price and a 2070 worth 580 average price )

well ... i reckon it would be bad if these new Radeon cards will be put on the same price level of stupidly priced RTX line (well if they aren't priced above ... it's half fine ... a RVII is just above a 2070 for me atm ... and a better alternative to me.... so if the RX5700XT is close to that : no issues )should be quite correct as a guess (although i find highly annoying that fashion of thinking "the cloud is the future and everyone want it" .... reflected on Stadia or other cloudgaming platform and now for that kind of idiocy )

Costs of that would be prohibitive.

If you cant spot the difference between RT off and RT on you should see an oculist. Since both next Xbox and PS5 will use Navi they would use a minimum amout of RT, which couldn't be compared to RTX cards.

Since you dont care about consoles nor RT I dont see the point of your comment.

It's too bad that AMD keeps using smaller nodes as a way to sell us small dies instead of taking full advantage.

I guess this is what happens when the company cares more about designing for consoles than for us. At least "the console" won't be quite so much of a joke, though, once it has Zen cores instead of Jaguar (which shouldn't have made it past the drawing board at Sony nor MS).

We waited so long for Polaris and then for Polaris to be replaced by the massively-hyped Vega, which had the same IPC as Fiji. Then, Radeon VII comes out with a small die. Color me underwhelmed.

I've got the impression that the green army is trolling on every blog and forum right at this moment, the war is on!

And Jaguar core is much powerful than then you guys think, its just dont clock that high.

As for Jaguar, my understanding is that it had worse IPC than Piledriver.It has also been the case that AMD doesn't compete, as with the 3870/3850. Same non-competitive performance as the previous generation. Without adequate competition (e.g. duopoly), sure, maybe a company thinks it's in its interest to do things this way.

AMD Tech Day coverage

Anti-Lag @ 2:28

Radeon Image Sharpening BFV (DLSS v RIS) @ 4:23

FarCry 5 benchmarked 1440p & 1080p (2060 v 5700) @ 5:54

PCIe 4.0 bandwidth demo 8k video @ 9:54

FidelityFX Sharpening WWZ @ 10:24

DSC @ 11:25

Nothing against PC gaming—it offers more control and customization, but with great power comes more money and more time fiddling. I have the means to buy a gaming rig, but I don’t have the time to mess with it. Consumers are speaking though, and the developers go to where the customers are.