Friday, August 14th 2020

Micron Confirms Next-Gen NVIDIA Ampere Memory Specifications - 12 GB GDDR6X, 1 TB/s Bandwidth

Micron have spilled the beans on at least some specifications for NVIDIA's next-gen Ampere graphics cards. In a new tech brief posted by the company earlier this week, hidden away behind Micron's market outlook, strategy and positioning, lie some secrets NVIDIA might not be too keen to see divulged before their #theultimatecountdown event.

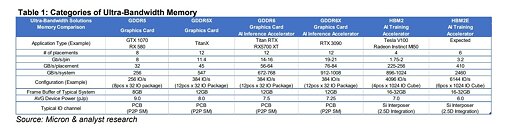

Under a comparison on ultra bandwidth solutions, segregated into the GDDR6X column, Micron lists a next-gen NVIDIA card under the "RTX 3090" product name. According to the spec sheet, this card features a total memory capacity of 12 GB GDDR6X, achieved through 12 memory chips with a 384-bit wide memory bus. As we saw today, only 11 of these seem to be populated on the RTX 3090, which, when paired with specifications for the GDDR6X memory chips being capable of 19-21 Gbps speeds, brings total memory subsystem bandwidth towards the 912 - 1008 GB/s range (using 12 chips; 11 chips results in 836 GB/s minimum). It's possible the RTX 3090 product name isn't an official NVIDIA product, but rather a Micron-guessed possibility, so don't look at it as factual representation of an upcoming graphics card. One other interesting aspect from the tech brief is that Micron expects their GDDR6X technology to enable 16 Gb (or 2 GB) density chips with 24 Gbps bandwidth, as early as 2021. You can read over the tech brief - which mentions NVIDIA by name as a development partner for GDDR6X - by following the source link and clicking on the "The Demand for Ultra-Bandwidth Solutions" document.

Source:

Micron

Under a comparison on ultra bandwidth solutions, segregated into the GDDR6X column, Micron lists a next-gen NVIDIA card under the "RTX 3090" product name. According to the spec sheet, this card features a total memory capacity of 12 GB GDDR6X, achieved through 12 memory chips with a 384-bit wide memory bus. As we saw today, only 11 of these seem to be populated on the RTX 3090, which, when paired with specifications for the GDDR6X memory chips being capable of 19-21 Gbps speeds, brings total memory subsystem bandwidth towards the 912 - 1008 GB/s range (using 12 chips; 11 chips results in 836 GB/s minimum). It's possible the RTX 3090 product name isn't an official NVIDIA product, but rather a Micron-guessed possibility, so don't look at it as factual representation of an upcoming graphics card. One other interesting aspect from the tech brief is that Micron expects their GDDR6X technology to enable 16 Gb (or 2 GB) density chips with 24 Gbps bandwidth, as early as 2021. You can read over the tech brief - which mentions NVIDIA by name as a development partner for GDDR6X - by following the source link and clicking on the "The Demand for Ultra-Bandwidth Solutions" document.

53 Comments on Micron Confirms Next-Gen NVIDIA Ampere Memory Specifications - 12 GB GDDR6X, 1 TB/s Bandwidth

Death rate after 6 months will say whether I'll buy anything with micron.

I'm also interested in what Nvidia Charges for the 3090 and if it's the actual flagship or will we see a 3090 ti or super down the line.

But of course people will fool themselves into "futureproofing" argument again, compounded by AMD once again loading cards unsuitable for 4K-60 with VRAM nobody needs. We're doing this thing every launch cycle!

What about VR and huge resolutions like Varjo HMDs ...

Ultra high resolution is eventually what will replace anti-aliasing as the proper thing to get rid of rough edges, probably equally or even more demanding as AA.

So in teh sense of moving forward properly.....yeah I think 12 gb is low.Vram is not just about resolution, play some GTA5 and see what features ask for more Vram, now think that games could up those features way more, way higher resolution textures that can all be loaded in or loaded in faster and less pop in.

The reason that no games on the market benefit from more then 8 - 9 gigs is because there are currenly barely any cards that have more then 8 - 9 gigs, same reason not a single game is build up from the ground around ray tracing or so.

In order for the games to have to use it, the hardware needs to exist because otherwise nobody can play and thus wont purchase said games.

So with the eye on better graphics etc, giving devs more room, I think going for 12 gb now is....very dissapointing.

I would have hoped for honestly double that in this day and age.

and because Big N is not giving more, it does not even matter what AMD will do because again, all Nvidia consumers would not be able to use whatever gamecompanies could have done with all that Vram so they just wont and we will all just have to wait for the next next gen.

24gb is utterly useless and too pricey. By the time more than 12gb can be used at a reasonable resolution (because less than 2% are on 4k... and I can't think of a title that can use 12gb) it might be too slow. Devs have a fair amount of headroom already, really.

Given how much the 11GB cards were/still are, I shudder to think how much they will ask for the new 12/16/24GB models.. :fear:..:respect:..:cry:

A game could be made to use more then 20gb of Vram while playing on 1080p, it just will use the memory storage capacity for other things, like I said, for example higher resolution textures and less to no pop in.

That is kinda what is being done now with the PS5 although that uses the SSD for it that a bit more closely.

More than 12-16GB is really just wasting cash right now. Its like buying a AMD processor with 12c/24t... if gaming is only using at most 6c/12t (99.9%) more NOW?

Again, devs have headroom to play with already... they aren't even using that.