Wednesday, May 19th 2021

NVIDIA Adds DLSS Support To 9 New Games Including VR Titles

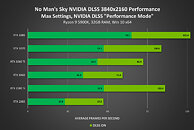

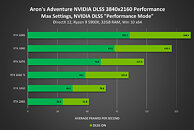

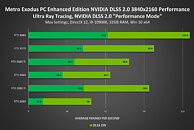

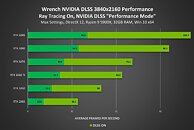

NVIDIA DLSS adoption continues at rapid pace, with a further 9 titles adding the game-changing, performance-accelerating, AI and Tensor Core-powered GeForce RTX technology. This follows the addition of DLSS to 5 games last month, and the launch of Metro Exodus PC Enhanced Edition a fortnight ago. This month, DLSS comes to No Man's Sky, AMID EVIL, Aron's Adventure, Everspace 2, Redout: Space Assault, Scavengers, and Wrench. And for the first time, DLSS comes to Virtual Reality headsets in No Man's Sky, Into The Radius, and Wrench.

By enabling NVIDIA DLSS in each, frame rates are greatly accelerated, giving you smoother gameplay and the headroom to enable higher-quality effects and rendering resolutions, and raytracing in AMID EVIL, Redout: Space Assault, and Wrench. For gamers, only GeForce RTX GPUs feature the Tensor Cores that power DLSS, and with DLSS now available in 50 titles and counting, GeForce RTX offers the fastest frame rates in leading triple-A games and indie darlings.Complete Games List

Source:

NVIDIA

By enabling NVIDIA DLSS in each, frame rates are greatly accelerated, giving you smoother gameplay and the headroom to enable higher-quality effects and rendering resolutions, and raytracing in AMID EVIL, Redout: Space Assault, and Wrench. For gamers, only GeForce RTX GPUs feature the Tensor Cores that power DLSS, and with DLSS now available in 50 titles and counting, GeForce RTX offers the fastest frame rates in leading triple-A games and indie darlings.Complete Games List

- AMID EVIL

- Aron's Adventure

- Everspace 2

- Metro Exodus PC Enhanced Edition

- No Man's Sky

- Redout: Space Assult

- Scavengers

- Wrench

- Into The Radius VR

49 Comments on NVIDIA Adds DLSS Support To 9 New Games Including VR Titles

"By enabling NVIDIA DLSS in each, frame rates are greatly accelerated, giving you smoother gameplay and the headroom to enable higher-quality effects and rendering resolutions, and raytracing"

What they mean is: "By lowering the resolution in each, frame rates are greatly accelerated, giving you smoother gameplay and the headroom to enable higher-quality effects and rendering resolutions, and raytracing and you wont suffer loss in quality from lowering the resolution because DLSS will make it look as good as if it was actually running at a higher resolution"

So for example take take lowest tier card that supports DLSS and in ~3 years time since that how long I usually keep my cards, that could mean the difference between playable and unplayable in new games w/o having to destroy in game settings to potato level.

From what I saw in comparison vids I kinda like DLSS 2.0, in games like Cyberpunk it even looks better to me than native imo. 'more details displayed on vegetation'

Also seems to work pretty well in Metro Exodus Enhanced.

When or if the time finally comes for me to upgrade my GPU this might be the deciding factor unless AMD's version will be good enough and with similar support in games.

Otherwise I might go back to Nvidia just for DLSS.

seeing it in VR titles is awesome

Gracefully missing the entire point of DLSS aren't we

Going forward when a open alternative arrives, DLSS wil be joining PhysX in the realm of dead proprietary tech.

edit: the first game on the list though, kinda suggestive :laugh:

if the marketing was "we have 1080p and it looks like this, and this is what it looks like with DLSS upscaled to 4k, way nicer right? so just run the game at a lower res and use this tech" but nope, they dont do that....

The point is that the marketing makes it about performance but its disingenuous because 4k DLSS isnt 4k.

The marketing should be about visual fidelity at the same resolution because that is what it does but they dont do that.

What I'm curious about is, is the AI hardware acceleration really necessary? It has been pointed out many times, NV claims it is necessary and it uses the specific hardware aka tensor cores but yet, from what I read and watched online, this matter has never been cleared. AMD on the other hand, makes it open and says, no AI hardware acceleration needed. (yet we have to see it in action). all of this seems weird.

Is NV covering the fact it is not needed and just use this HW AI acceleration to boost sales of the new hardware?

I remember NV saying G-sync module is a must in the monitor for any NV gpu to use it properly and yet now we have FreeSync aka G-sync compatible and NV cards work just fine.

Weird stuff. Really weird stuff.

Native support in the two most used game engines already.Educate yourself before spreading misinformation. Try some first hand experience before you talk BS. 4K DLSS can look better than 4K native, as in sharper text, sharper textures etc. Oh, and around 75% higher performance.The tech is great, when implementation is great. Glad to see native support in unity and unreal engine.

AMD and GTX owners hate DLSS but RTX owners loves it. Wonder why.Yeah, and Intel.

You can see in screenies side by side, Quality DLSS actually has slightly sharper vegetation at mid range. But if I need to sit and play spot the difference with stills, then as far as Im concerned thats a win for DLSS. You can easily tell when using any lower DLSS setting, its not bad, but I didnt buy a 4K screen to play with a vaseline filter. Still better than the original version of DLSS which made the game look like borderlands.

I would like to see DLSS in more titles, not sure how many its in now, but I know the last time they announced it was coming to several titles it basically amounted to a grand total of 1 title per month since its announcement in 2018 which isnt great! Not to mention the X2 version or whatever it was called that was supposed to bump IQ.

Also, the trailer they released for NMS is very misleading, half the trees are missing in the DLSS side!

also, nothing is "dead" about PhysX. it has become open source, it's built-in popular game engines, and is being widely used on all platforms.

just search your installed games folders for physx*.dll files. most likely you'll find some.

modern games rarely utilize PhysX GPU acceleration, I'll give you that, however, its CPU performance (and CPU hardware) has come a long way, and is not inferior to competitive engines in any way.

DO NOT throw insults at each other, as, it does not help the discussion.

Thank You and keep it civil.

www.rockpapershotgun.com/outriders-dlss-performance

You should stick to quality or balanced and you should never use motion blur with dlss enabled.

"DLSS Quality makes the solar panel look ten times sharper, and also makes the grate on the floor much more distinct. Everything looks sharper, too."Another mad AMD owner :laugh: maybe FSR is out and working in 2025, I highly doubt a RX480 gets support tho :laugh:

In every single thread about DLSS, the only people who talk shit, are AMD and GTX owners. Nothing new here :roll:

I know it's hard to accept new technology when you can't use it :D

The same thing applies to pretty much every technology on RTX cards, mind. RT? Great tech. If it doesn't kill your FPS and Nvidia decides your game is the chosen one to get it.

Other than that, you can epeen all you want about haves or have nots, but its the epitome of sad and disgusting all at the same time, that. Not the best form. Did it occur to you many potential buyers have been waiting it out because (much like myself tbh) there really wasn't much to be had at all? Turing was utter shite compared to Pascal and Ampere was available for about five minutes. And if you have a life, there's more to it than having the latest greatest, right?Duh.

We're still talking about the same rasterized graphics, now with a few more post effects on top that require dedicated hardware to work without murdering performance altogether. Let's not fool each other.

That would have been something.