NVIDIA DLSS Gets Ported to 10 Additional Titles, Including the New Back 4 Blood Game

NVIDIA's Deep Learning Super Sampling (DLSS) technology has been one of the main selling points of GeForce RTX graphics cards. With the broad adoption of the technology amongst many popular game titles, the gaming community has enjoyed the AI-powered upscaling technology that boosts frame-rate output and delivers better overall performance. Today, the company announced that DLSS arrived in 10 additional game titles, and those include today's release of Back 4 Blood, Baldur's Gate 3, Chivalry 2, Crysis Remastered Trilogy, Rise of the Tomb Raider, Shadow of the Tomb Raider, Sword and Fairy 7, and Swords of Legends Online.

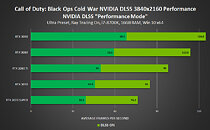

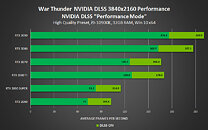

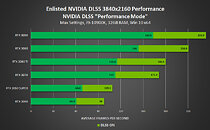

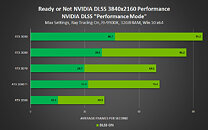

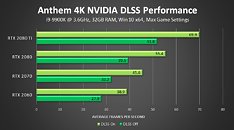

With so many titles receiving the DLSS update, NVIDIA advertises using the latest GeForce driver to achieve the best possible performance in the listed games. If you are wondering just how much DLSS adds to the performance, in the newest Back 4 Blood title, RTX GPUs see a 46% boost in FPS. Similar performance gains translate to other labels that received the DLSS patch. You can expect to achieve more than double the number of frames in older titles like Alan Wake Remastered, Tomb Raider saga, and FIST.For more information about performance at 4K resolution, please see the slides supplied by NVIDIA below.

With so many titles receiving the DLSS update, NVIDIA advertises using the latest GeForce driver to achieve the best possible performance in the listed games. If you are wondering just how much DLSS adds to the performance, in the newest Back 4 Blood title, RTX GPUs see a 46% boost in FPS. Similar performance gains translate to other labels that received the DLSS patch. You can expect to achieve more than double the number of frames in older titles like Alan Wake Remastered, Tomb Raider saga, and FIST.For more information about performance at 4K resolution, please see the slides supplied by NVIDIA below.