Monday, July 16th 2018

QA Consultants Determines AMD's Most Stable Graphics Drivers in the Industry

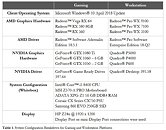

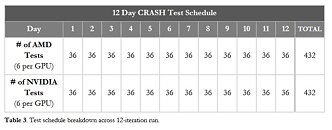

As independent third-party experts in the software quality assurance and testing industry for over 20 years, QA Consultants has conducted over 5,000+ mission-critical projects and has extensive testing experience and depth in various industries. Based in Toronto, Ontario, QA Consultants is the largest on-shore software quality assurance company, with a 30,000 sq. ft., industry-grade facility called The Test Factory.Commissioned by AMD, QA Consultants used Microsoft's Windows Hardware Lab Kit (HLK) to conduct a 12-day head-to-head test comparing AMD and Nvidia graphics drivers. The test compared 12 GPU's, six from AMD and six from NVIDIA, running on 12 identical machines. All machines were configured with Windows 10 April 2018 Update. Both gaming and professional GPUs were equally represented in the testing. After running for 12 days of 24-hour stress tests, the aggregate of AMD products passed 93% of the scheduled tests. The aggregate of NVIDIA products passed 82% of the scheduled tests. Based on the results of testing the 12 GPUs, QA Consultants concludes that AMD has the most stable graphics driver in the industry.

Sources:

QA Consultants, Graphics Driver Stability Report

About QA Consultants

QA Consultants is the North American leader in software quality assurance and testing services. Having successfully delivered over 5,000 testing and consulting projects to a variety of sectors including automotive and transportation, advertising and marketing, banking and finance, construction, media and entertainment, US & Canadian Federal State and Local government, healthcare, insurance, retail, hospitality, and telecommunications.

The Test Factory is the next generation of software testing, providing a superior quality, cost and service alternative to offshore providers and external contractors. The centre can handle any testing project of any size, with any application and for any industry. With full-time employees in Toronto, Ottawa and Dallas, QA Consultants supports customers by providing testing services such as accessibility testing, agile testing, test automation, data testing, functional testing, integration testing, mobility testing, performance testing, and security testing. Along with engagement models like Managed Consulting Services and On Demand Testing , QA Consultants is equipped to handle any client's request.

124 Comments on QA Consultants Determines AMD's Most Stable Graphics Drivers in the Industry

If you really want to see who's got the better driver, you need to look at way more cards than 12 GPUs. These drivers support probably more than 100 cards each. You also need to (obviously) look at more than one driver version.

What they did is like me asking an American and an European how many hours they work a week and based on that conclude that either Americans or Europeans work more.

And once more, they didn't say what failed in each case. That is really, really important.

The first major problem is the sample size. All it takes in this test is a single card to be bad to tip the conclusion either way. Increase the sample size, and variations between individual cards, and all operator related errors will even out. They tested a sample size of 1 per bin, which is ridiculous, 10 samples should be the minimum for any "semi-sientific" test. When they are testing cards of the same generation, but only one of them consistently have stability issues, this clearly points to problems with the sample. No competent person would base a conclusion of driver stability based on obvious hardware issues.

Secondly they didn't provide details about why things failed. Seriously, most of the 125 page report is useless information which could have been summarized, yet they didn't include information about why things failed.

Thirdly, the WHQL certification tests should at best be a part of a larger test suite, especially since the testing of heavy graphics load and rendering artifacts are too limited, which are very relevant for a stability test.

Hang = Controller didn't get a response from the machine--probably a BSOD.

Fail = Client caught a failure and submitted it to the controller.

The entire test was automated which is why there isn't much in the way of details.

The paper explains the parameters of the test. This has proven to be way too difficult for people to understand.Same as Bug up their

A bunch of I woulds and shoulds.

Failures are explained in the paper which certain people seam to have a problem understanding.WHQL submissions aren't shared with the public either yet you want this test logs to be. I'm take a wild guess because you disagree with the conclusion or you want to kindly debug.

At this point, I believe a poll would be in order.

Question: Why do we need 5 pages to explain cherry-picking?

Answer 1: Because people on TPU are that dumb.

Answer 2: Because when given a chance to throw dirt at something, arguments take the back seat.

AMD/NVIDIA need to take these results, attach a debugger, and hammer the test on problematical hardware to find out and fix what caused it. The specifics are above QA Consultants' pay grade (namely because it requires access to closed source code).

They took one canned test, ran it on very few cards using just one driver version and gave us the summary. If you were paid to determine the stability of a drive, is that how you would do it?

RX 560: 1

RX 580: 1

Vega 64: 2

WX 3100: 13

WX 7100: 5

WX 9100: 9

Total: 31

GTX 1050: 3

GTX 1060: 10

GTX 1080 Ti: 2

P600: 31

P4000: 12

P5000: 18

Total: 76

RX 560/RX 580 errored 4 hours out of 288 or 1%

P600 errored 124 hours out of 288 or 43%

Every card had at least two full days of no errors and every card had at least one error. The only card that may be suspect is the P600 but all the rest fall in line with a clear pattern of professional drivers/cards getting less driver love than gaming cards. Even if you completely omit P600, NVIDIA still had far more errors than AMD did even considering the 3 to 2 advantage NVIDIA; hence, their conclusion didn't mince words: "...AMD has the most stable graphics driver in the industry." That statement is absolutely true given the parameters of the test.

Now, I know better than to judge a company based on disgruntled (former) employees testimonies. But that's all a Google search brings up.

Now, I'm outta here. You and Ford are either being stubborn or plain dumb. And I don't care to fix either.

I like how you bring it up that your not going to judge them by it but that's what you just did.

K, but can you leave the ball if you go.

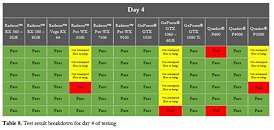

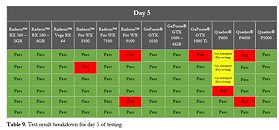

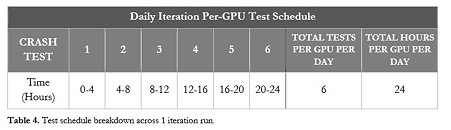

For example, look at this table:

With this methodology it matters when driver hangs. Much worse when it hangs in the beginning of the day (like GTX1060 does in this image). Better, if it happens later (like WX3100 or P5000 did that day).

Going over all the tables, there does not seem to be a pattern for the hangs, just pure luck.

Judging from the result tables:

AMD: 4 hangs in the middle of the day, resulting in 10 tests not run + 5 fails at the end of the day (that were potentially hangs)

Nvidia: 11 hangs in the middle of the day, resulting in 40 tests not run + 6 fails at the end of the day (that were potentially hangs)

The amount of yellow boxes is what irks me about their methodology.

My experience so far (on Windows 7 and 10) has been that automatic driver restart is far from reliable. Also Nvidia drivers seem to be far more responsive to manual forced driver restart than AMD drivers.

Edit:

I mean, theoretically they could have also scheduled the entire 12 day run in one go and see how far each card goes. This would have eliminated the following cards a long time before getting to the end. For curiousity's sake, I went over the result again and specifically, these cards would gave gone out after the number of successfuly test runs:

P5000 - 2

P600 - 6

WX 9100 - 9

GTX 1060 - 17

WX 3100 - 19

P4000 - 36

:laugh:

You got it backwards: the days are top to bottom, not bottom to top. Hang -> hang -> hang -> fail. The first hang should have been a fail and there should have been no subsequent fails after that but no, the driver continued to report that all was well when it wasn't:

Thanks for correcting me.