- Joined

- Oct 9, 2007

- Messages

- 47,853 (7.38/day)

- Location

- Dublin, Ireland

| System Name | RBMK-1000 |

|---|---|

| Processor | AMD Ryzen 7 5700G |

| Motherboard | Gigabyte B550 AORUS Elite V2 |

| Cooling | DeepCool Gammax L240 V2 |

| Memory | 2x 16GB DDR4-3200 |

| Video Card(s) | Galax RTX 4070 Ti EX |

| Storage | Samsung 990 1TB |

| Display(s) | BenQ 1440p 60 Hz 27-inch |

| Case | Corsair Carbide 100R |

| Audio Device(s) | ASUS SupremeFX S1220A |

| Power Supply | Cooler Master MWE Gold 650W |

| Mouse | ASUS ROG Strix Impact |

| Keyboard | Gamdias Hermes E2 |

| Software | Windows 11 Pro |

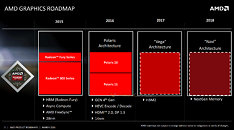

AMD finalized the GPU architecture roadmap running between 2016 and 2018. The company first detailed this at its Capsaicin Event in mid-March 2016. It sees the company's upcoming "Polaris" architecture, while making major architectural leaps over the current-generation, such as a 2.5-times performance/Watt uplift and driving the company's first 14 nanometer GPUs; being limited in its high-end graphics space presence. Polaris is rumored to drive graphics for Sony's upcoming 4K Ultra HD PlayStation, and as discrete GPUs, it will feature in only two chips - Polaris 10 "Ellesmere" and Polaris 11 "Baffin."

"Polaris" introduces several new features, such as HVEC (h.265) decode and encode hardware-acceleration, new display output standards such as DisplayPort 1.3 and HDMI 2.0; however, since neither Polaris 10 nor Polaris 11 are really "big" enthusiast chips that succeed the current "Fiji" silicon, will likely make do with current GDDR5/GDDR5X memory standards. That's not to say that Polaris 10 won't disrupt current performance-thru-enthusiast lineups, or even have the chops to take on NVIDIA's GP104. First-generation HBM limits the total memory amount to 4 GB over a 4096-bit path. Enthusiasts will have to wait until early-2017 for the introduction of the big-chip that succeeds "Fiji," which will not only leverage HBM2 to serve up vast amounts of super-fast memory; but also feature a slight architectural uplift. 2018 will see the introduction of its successor, codenamed "Navi," which features an even faster memory interface.

View at TechPowerUp Main Site

"Polaris" introduces several new features, such as HVEC (h.265) decode and encode hardware-acceleration, new display output standards such as DisplayPort 1.3 and HDMI 2.0; however, since neither Polaris 10 nor Polaris 11 are really "big" enthusiast chips that succeed the current "Fiji" silicon, will likely make do with current GDDR5/GDDR5X memory standards. That's not to say that Polaris 10 won't disrupt current performance-thru-enthusiast lineups, or even have the chops to take on NVIDIA's GP104. First-generation HBM limits the total memory amount to 4 GB over a 4096-bit path. Enthusiasts will have to wait until early-2017 for the introduction of the big-chip that succeeds "Fiji," which will not only leverage HBM2 to serve up vast amounts of super-fast memory; but also feature a slight architectural uplift. 2018 will see the introduction of its successor, codenamed "Navi," which features an even faster memory interface.

View at TechPowerUp Main Site