D

Deleted member 185088

Guest

Yeah but the difference is slight in consoles, but here we are talking of more than 40% increase on the official MSRP.Consoles are not quire around the same MSRP either. For example, the recently announced price of Xbox Series X is $499/499€/£449 and 49980 yen. Compared to US price Japanese price is reduced as it is a difficult market for Xbox and EU/GB prices reflect included taxes.

There are a number of reasons to vary MSRP across different regions or countries - taxes are a big one and marketing position is another.

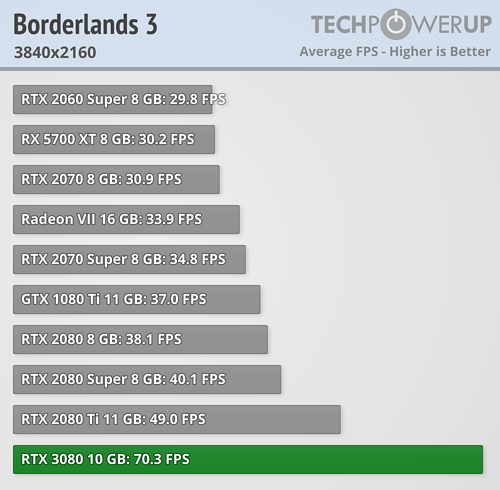

15-31%. RTX3080 is considerably more CPU limited on 1440p.