Comparing consoles to PCs is like apples and pears. For one: consoles use software totally differently than PCs, they often come with locked resolutions and frame rates, etc. For two: no console's performance has ever risen above that of a mid-range PC from the same era, and I don't think it's ever gonna change (mostly due to price and size/cooling restrictions). Devs tweak game settings to accommodate to hardware specifications on consoles, whereas on PC, you have the freedom to use whatever settings you like.

Example: playing The Witcher 3 on an Xbox One with washed out textures at 900p is not the same as playing it on a similarly aged high-end PC with ultra settings at 1080p or 1440p.

I wasn't the one starting with the console-to-PC comparisons here, I was just responding. Besides, comparing the upcoming consoles to previous generations is ... tricky. Not only are they architecturally more similar to PCs than ever (true, the current generation are also X86+AMD GPU, but nobody ever gamed on a Jaguar CPU), but unlike last time both console vendors are investing significantly in hardware (the PS4 at launch performed about the same as a lower midrange dGPU, at the time a ~$150 product; upcoming consoles will likely perform similarly to the 2070S (and probably 3060), a $500 product). No previous console's performance has risen above a mid-range PC from the same era, but the upcoming ones are definitely trying to change that. Besides that, did I ever say that playing a game at on a console at one resolution was the same as playing the same game on a PC at a different one? Nice straw man you've got there. Stop putting words in my mouth. The point of making console comparisons this time around is that there has never been a closer match between consoles and PCs in terms of actual performance than what we will have this November.

There you have it. Who decided PC dGPU is developed for a 3 year life cycle? It certainly wasn't us. They last double that time without any hiccups whatsoever, and even then hold resale value. Especially the high end. I'll take 4-5 if you don't mind. The 1080 I'm running now, makes 4-5 just fine, and then some. The 780ti I had prior, did similar, and they both had life in them still.

Two GPU upgrades per console gen is utterly ridiculous and unnecessary, since we all know the real jumps happen with real console gen updates.

Well, don't blame me, I didn't make GPU vendors think like this. Heck, I'm still happily using my Fury X (though it is starting to struggle enough at 1440p that it's time to retire it, but I'm very happy with its five-year lifespan). I would say this stems from being in a highly competitive market that has historically had dramatic shifts in performance in relatively short time spans, combined with relatively open software ecosystems (the latter of which consoles have avoided, thus avoiding the short term one-upmanship of the GPU market). That makes software a constantly moving target, and thus we get the chicken-and-egg-like race of more demanding games requiring faster GPUs allowing for even more demanding games requiring even faster GPUs, etc., etc. Of course, as the industry matures those time spans are growing, and given slowdowns in both architectural improvements and manufacturing nodes any high end GPU from today is likely to remain relevant for

at least five years, though likely longer, and at a far higher quality of life for users than previous ones.

That being said, future-proofing by adding more VRAM is a poor solution. Sure, the GPU needs to have

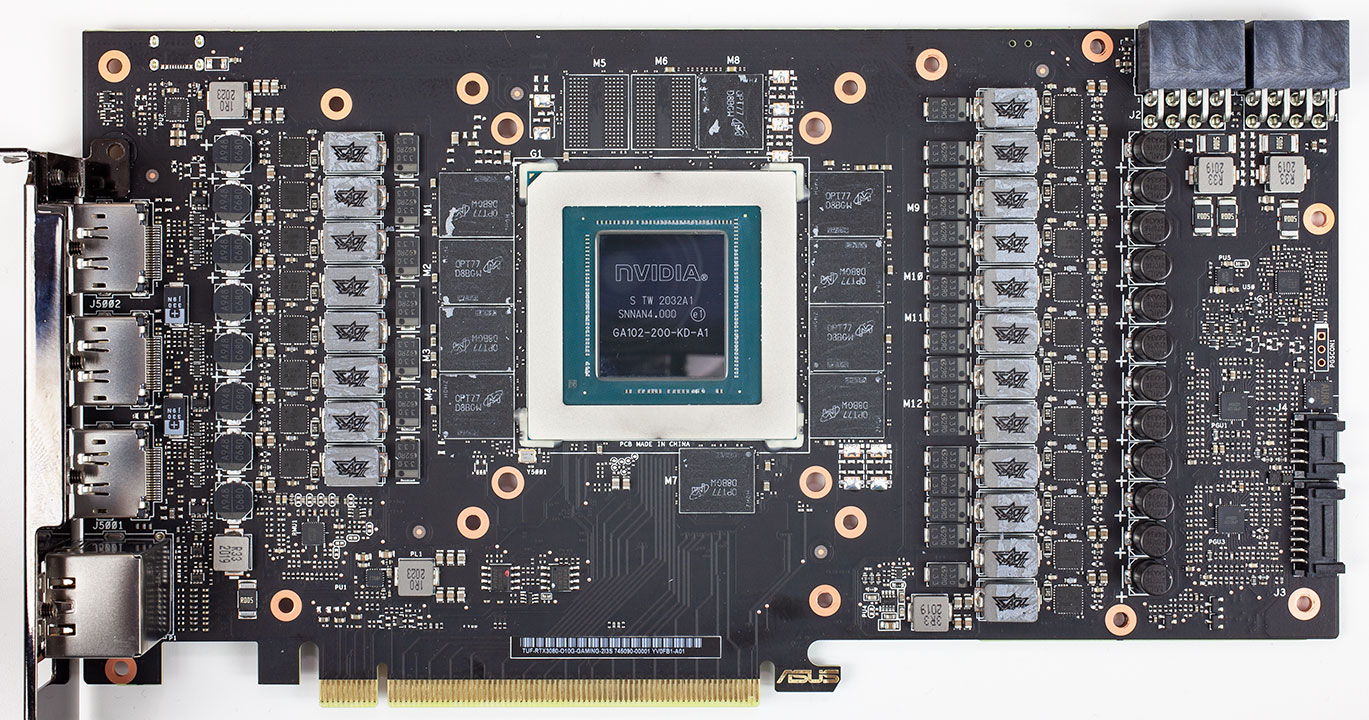

enough VRAM, there is no question about that. It needs an amount of VRAM and a bandwidth that both complement the computational power of the GPU, otherwise it will quickly become bottlenecked. The issue is that VRAM density and bandwidth both scale (very!) poorly with time - heck, base DRAM die clocks haven't really moved for a decade or more, with only more advanced ways of packing more data into the same signal increasing transfer speeds. But adding more RAM is very expensive too - heck, the huge framebuffers of current GPUs are a lot of the reason for high end GPUs today being $700-1500 rather than the $200-400 of a decade ago - die density has just barely moved, bandwidth is still an issue, requiring more complex and expensive PCBs, etc. I would be quite surprised if the BOM cost of the VRAM on the 3080 is below $200. Which then begs the question: would it really be worth it to pay $1000 for a 3080 20GB rather than $700 for a 3080 10GB, when there would be no perceptible performance difference for the vast majority of its useful lifetime?

The last question is particularly relevant when you start to look at VRAM usage scaling and comparing the memory sizes in question to previous generations where buying the highest VRAM SKU has been smart. Remember, scaling on the same with bus is either 1x or 2x (or potentially 4x I guess), so like we're discussing here, the only possible step is to 20GB - which brings with it a

very significant cost increase. The base SKU has 10GB, which is the second highest memory count of any consumer-facing GPU in history. Even if it's comparable to the likely GPU-allocated amount of RAM on upcoming consoles, it's still a very decent chunk. On the other hand, previous GPUs with different memory capacities have started out

much lower - 3/6GB for the 1060, 4/8GB for a whole host of others, and 2/4GB for quite a few if you look far enough back. The thing here is: while the percentage increases are always the same, the absolute amount of VRAM now is

massively higher than in those cases - the baseline we're currently talking about is higher than the high end option of the previous comparisons. What does that mean? For one, you're already operating at such a high level of performance that there's a lot of leeway for tuning and optimization. If a game requires 1GB more VRAM than what's available, lowering settings to fit that within a 10GB framebuffer will be trivial. Doing the same on a 3GB card? Pretty much impossible. A 2GB reduction in VRAM needs is likely more easily done on a 10GB framebuffer than a .5GB reduction on a 3GB framebuffer. After all, there is a baseline requirement that is

necessary for the game to run, onto which additional quality options add more. Raising the ceiling for maximum VRAM doesn't as much shift the baseline requirement upwards (though that too creeps upwards over time) as it expands the range of possible working configurations. Sure, 2GB is largely insufficient for 1080p today, but 3GB is still fine, and 4GB is plenty (at settings where GPUs with these amounts of VRAM would actually be able to deliver playable framerates). So you previously had a scale from, say, .5-4GB, then 2-8GB, and in the future maybe 4-12GB. Again, looking at percentages is misleading, as it takes a lot of work to fill those last few GB. And the higher you go, the easier it is to ease off on a setting or two without perceptibly losing quality. I.e. your experience will not change whatsoever, except that the game will (likely automatically) lower a couple of settings a single notch.

Of course, in the time it will take for 10GB to become a real limitation at 4k - I would say

at minimum three years - the 3080 will likely not have the shader performance to keep up anyhow, making the entire question moot. Lowering settings will thus become a necessity no matter the VRAM amount.

So, what will you then be paying for with a 3080 20GB? Likely 8GB of VRAM that will never see practical use (again, it will more than likely have stuff allocated to it, but it won't be

used in gameplay), and the luxury of keeping a couple of settings pegged to the max rather than lowering them imperceptibly. That might be worth it to you, but it certainly isn't for me. In fact, I'd say it's a complete waste of money.