-

Welcome to TechPowerUp Forums, Guest! Please check out our forum guidelines for info related to our community.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

ASUS GeForce RTX 3090 STRIX OC

- Thread starter W1zzard

- Start date

That's the problem. In modern games, especially BRs with large open environments with a lot of structures, even at minimum graphics settings at 1080p, the FPS drops (fluctuates) well below 240fps. Forget 360fps and fully utilizing 360Hz even on a 3090.If you trick out RTX at native 1080p with shadows, reflections, and global illumination, I wouldn't surprised if this card could only handle it at 60fps. Mind you, that's still just 1 bounce per pixel, with de-noising to smooth out light gaps. DLSS isn't free -- it at least costs time for training to ensure no artifacts for a given game, but I'm sure NVidia charges for this training. Full fidelity path-tracing graphics isn't as cheap as it's been made out to be.

For those who want 1080p at 360Hz, isn't it better to just lower the details on a mid-range high-frequency GPU, since they only care about the competitive advantage? Having RTX and all sorts of other details in the scene just make it hard to discern the opponent anyway.

However, obviously 360Hz offers better input lag reduction even at lower FPS due to faster scanout which is why I'm still buying one.

Last edited:

- Joined

- Oct 19, 2007

- Messages

- 8,269 (1.31/day)

| Processor | Intel i9 9900K @5GHz w/ Corsair H150i Pro CPU AiO w/Corsair HD120 RBG fan |

|---|---|

| Motherboard | Asus Z390 Maximus XI Code |

| Cooling | 6x120mm Corsair HD120 RBG fans |

| Memory | Corsair Vengeance RBG 2x8GB 3600MHz |

| Video Card(s) | Asus RTX 3080Ti STRIX OC |

| Storage | Samsung 970 EVO Plus 500GB , 970 EVO 1TB, Samsung 850 EVO 1TB SSD, 10TB Synology DS1621+ RAID5 |

| Display(s) | Corsair Xeneon 32" 32UHD144 4K |

| Case | Corsair 570x RBG Tempered Glass |

| Audio Device(s) | Onboard / Corsair Virtuoso XT Wireless RGB |

| Power Supply | Corsair HX850w Platinum Series |

| Mouse | Logitech G604s |

| Keyboard | Corsair K70 Rapidfire |

| Software | Windows 11 x64 Professional |

| Benchmark Scores | Firestrike - 23520 Heaven - 3670 |

I would absolutely love to get my hands on this card, but with it being $1800, it's going to be really hard for me to nab it up. Might have to wait for the possible 3080 20GB (Ti?) to come out and see the performance.

Also,

Got a typo there in the conclusion.

Also, id love to see WoW added back into reviews.

Also,

We also did a test run with the power limit maximized to the 480 W setting that ASUS provides. 480 W is much higher than anything else availalable on any other RTX 3090, so I wondered how much more performance can we get. At 4K resolution it's another 2%, which isn't that much, but it depends on the game, too. Only games that hit the power limit very early due to their rendering design can benefit from the addded power headroom.

Got a typo there in the conclusion.

Also, id love to see WoW added back into reviews.

- Joined

- Aug 9, 2006

- Messages

- 1,065 (0.16/day)

| System Name | [Primary Workstation] |

|---|---|

| Processor | Intel Core i7-920 Bloomfield @ 3.8GHz/4.55GHz [24-7/Bench] |

| Motherboard | EVGA X58 E758-A1 [Tweaked right!] |

| Cooling | Cooler Master V8 [stock fan + two 133CFM ULTRA KAZE fans] |

| Memory | 12GB [Kingston HyperX] |

| Video Card(s) | constantly upgrading/downgrading [prefer nVidia] |

| Storage | constantly upgrading/downgrading [prefer Hitachi/Samsung] |

| Display(s) | Triple LCD [40 inch primary + 32 & 28 inch auxiliary displays] |

| Case | Cooler Master Cosmos 1000 [Mesh Mod, CFM Overload] |

| Audio Device(s) | ASUS Xonar D1 + onboard Realtek ALC889A [Logitech Z-5300 Spk., Niko 650-HP 5.1 Hp., X-Bass Hp.] |

| Power Supply | Corsair TX950W [aka Reactor] |

| Software | This and that... [All software 100% legit and paid for, 0% pirated] |

| Benchmark Scores | Ridiculously good scores!!! |

Ouuufffff! Might be worth getting to just see what it does to legacy bechmarks like 3DMark 01 and 03 combined with say a 10900k in 5.3-5.4GHz range on AIO. Non exotic way to grab some HWbot records.

Oh yeah, new games, and all that other stuff in the review. Awesome tech! I guess... Not gonna lie, skipped all those games tests since I literally do NOT own any of the new stuff. (Does No Man Sky count, circa 2016?

)

)But yeah, crazy fillrate! Definitive HWbot record taker as far as benchmarking and overclocking community is concerned. If you are buying this to play Fortnite/PUBG or something, a $60 ebay RX580 8GB can do that, and has been able to do that since about 2-3 years now.

...

..

.

- Joined

- Dec 31, 2009

- Messages

- 19,375 (3.52/day)

| Benchmark Scores | Faster than yours... I'd bet on it. :) |

|---|

All games have significant fluctuations. MP can be worse... but that isn't dependent on the GPU really. That's the nature of MP gaming man.I doubt Apex Legends is the only MP game that has large FPS fluctuations @1080p even at medium to low settings, and upcoming games will generally be more demanding.

Not to mention 360Hz will only become more common.

360Hz will become more common, indeed. By that time, the next gen cards will be out. Like 4K, any decent 360 Hz monitor is quite pricey and overkill for anyone who doesn't have F U money or plays competitively where that stuff matters.

You're fighting for the <1%.

- Joined

- Mar 10, 2010

- Messages

- 11,880 (2.19/day)

- Location

- Manchester uk

| System Name | RyzenGtEvo/ Asus strix scar II |

|---|---|

| Processor | Amd R5 5900X/ Intel 8750H |

| Motherboard | Crosshair hero8 impact/Asus |

| Cooling | 360EK extreme rad+ 360$EK slim all push, cpu ek suprim Gpu full cover all EK |

| Memory | Gskill Trident Z 3900cas18 32Gb in four sticks./16Gb/16GB |

| Video Card(s) | Asus tuf RX7900XT /Rtx 2060 |

| Storage | Silicon power 2TB nvme/8Tb external/1Tb samsung Evo nvme 2Tb sata ssd/1Tb nvme |

| Display(s) | Samsung UAE28"850R 4k freesync.dell shiter |

| Case | Lianli 011 dynamic/strix scar2 |

| Audio Device(s) | Xfi creative 7.1 on board ,Yamaha dts av setup, corsair void pro headset |

| Power Supply | corsair 1200Hxi/Asus stock |

| Mouse | Roccat Kova/ Logitech G wireless |

| Keyboard | Roccat Aimo 120 |

| VR HMD | Oculus rift |

| Software | Win 10 Pro |

| Benchmark Scores | laptop Timespy 6506 |

It really depends on the use case where a Titan would benefit.

Everyone and Nvidia are saying it's an 8K gaming card, I agree it will be useful to some but it isn't a Titan.

Surely you agree with below, I don't mind greedy cards but that's a lot of heat thrown into any room.

480w it is insane

I can get close to that with effort and such for benching(,not doing this anymore though) but I think it a tad high 24/7.

- Joined

- Nov 23, 2010

- Messages

- 317 (0.06/day)

I like that Nvidia built this BFGPU and put it out there. It just showcases their top tier product and the potential of their technology.

Kind of like cars, M3 vs. 3-series. You pay for those infinitesimal improvements.

Kind of like cars, M3 vs. 3-series. You pay for those infinitesimal improvements.

- Joined

- Mar 7, 2007

- Messages

- 1,429 (0.22/day)

| Processor | E5-1680 V2 |

|---|---|

| Motherboard | Rampage IV black |

| Video Card(s) | Asrock 7900 xtx |

| Storage | 500 gb sd |

| Software | windows 10 64 bit |

| Benchmark Scores | 29,433 3dmark06 score |

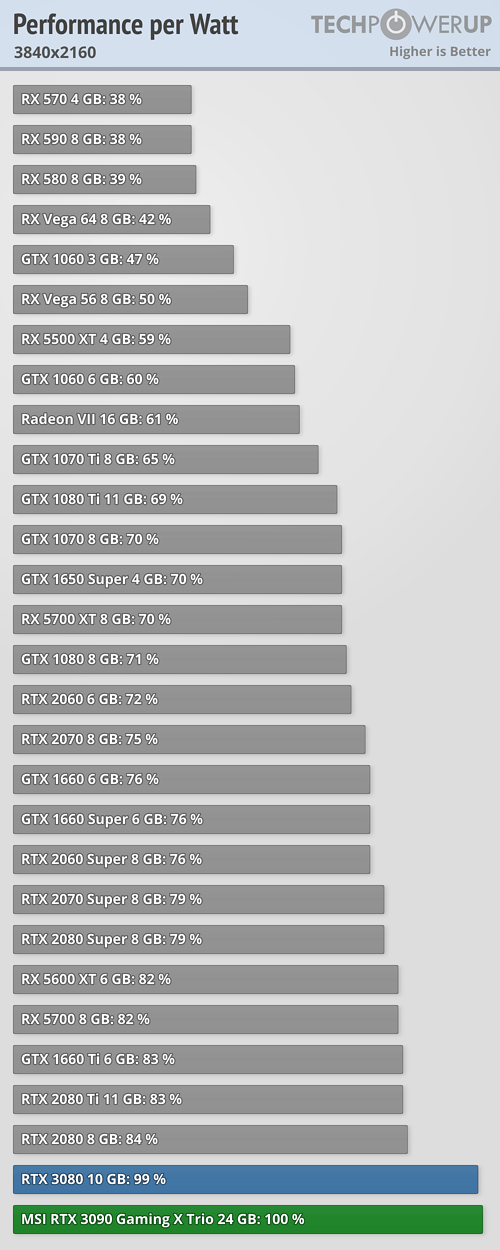

This card (just like I predicted) is a lot more power efficient that the RTX 3080:

So, your criticism is not totally sincere as the RTX 3080 must be an even worse card in your opinion. Also, NVIDIA has a higher performance per watt ratio for this gen vs the previous gen cards, so your comparison to Intel isn't valid.

As for me personally I never buy GPUs with a TDP above 150W (and CPUs above 95W) because I simply don't want that much heat under my desk, so I guess I will skip the entire GeForce 30 series because RTX 3060 has been rumored to have a TDP around 180-200W.

"Good lord" is misplaced. Again, this is a Titan class card. Either use it appropriately or forget about it - it does not justify its cost purely from a gaming perspective.

Are you kidding me? It's a "whole" 7 % more efficient than the 3080 which is VERY little and even if it were 20% more efficient with the ridiculous price difference it'd take a lifetime to make back the initial price difference between these cards, which is huge no matter how you lslice why it is what it is. Even if it is a titan I'd be interested in seeing how much better it performs in business applications etc, doubt it's even worth it in that scenario.

Nah, I'm just saying people shouldn't make blanket statements without acknowledging the benefits that most people don't realize can actually be very noticeable. We all acknowledge the price/performance value statement of a 3080 over a 3090. However, I do not agree with anyone saying the 3090 makes no noticeable difference at 1080p.All games have significant fluctuations. MP can be worse... but that isn't dependent on the GPU really. That's the nature of MP gaming man.

360Hz will become more common, indeed. By that time, the next gen cards will be out. Like 4K, any decent 360 Hz monitor is quite pricey and overkill for anyone who doesn't have F U money or plays competitively where that stuff matters.

You're fighting for the <1%.

360Hz is supposed to be available starting end of this month. Any serious competitive gamer will want one.

Last edited:

- Joined

- Feb 11, 2009

- Messages

- 5,628 (0.97/day)

| System Name | Cyberline |

|---|---|

| Processor | Intel Core i7 2600k -> 12600k |

| Motherboard | Asus P8P67 LE Rev 3.0 -> Gigabyte Z690 Auros Elite DDR4 |

| Cooling | Tuniq Tower 120 -> Custom Watercoolingloop |

| Memory | Corsair (4x2) 8gb 1600mhz -> Crucial (8x2) 16gb 3600mhz |

| Video Card(s) | AMD RX480 -> RX7800XT |

| Storage | Samsung 750 Evo 250gb SSD + WD 1tb x 2 + WD 2tb -> 2tb MVMe SSD |

| Display(s) | Philips 32inch LPF5605H (television) -> Dell S3220DGF |

| Case | antec 600 -> Thermaltake Tenor HTCP case |

| Audio Device(s) | Focusrite 2i4 (USB) |

| Power Supply | Seasonic 620watt 80+ Platinum |

| Mouse | Elecom EX-G |

| Keyboard | Rapoo V700 |

| Software | Windows 10 Pro 64bit |

Sorry, this is not a 1080p card, not by a long shot. If you're here to argue about this card merits for this low resolution I don't know what else to say because I don't have any polite words in my lexicon.

What's next, you're going to argue that an excavator is not a good way of planting seeds? Or maybe you need to play your favourite game at 600fps? Why?

Well no need to get upset, its just a videocard, lets look at the facts and not made up stats shall we?

Nvidia themselves pushed for new 360hz screens, nr1 so high refreshrate/framerate gaming is definitly something they are going for, now lets look at this review shall we?

Average FPS at 1080p with this card is about 200fps.....so yeah we are not even close to touching that new pushed 360hz standard and not even remotely close to your made up 600fps...

sooo yeah.

- Joined

- Jan 11, 2005

- Messages

- 1,491 (0.20/day)

- Location

- 66 feet from the ground

| System Name | 2nd AMD puppy |

|---|---|

| Processor | FX-8350 vishera |

| Motherboard | Gigabyte GA-970A-UD3 |

| Cooling | Cooler Master Hyper TX2 |

| Memory | 16 Gb DDR3:8GB Kingston HyperX Beast + 8Gb G.Skill Sniper(by courtesy of tabascosauz &TPU) |

| Video Card(s) | Sapphire RX 580 Nitro+;1450/2000 Mhz |

| Storage | SSD :840 pro 128 Gb;Iridium pro 240Gb ; HDD 2xWD-1Tb |

| Display(s) | Benq XL2730Z 144 Hz freesync |

| Case | NZXT 820 PHANTOM |

| Audio Device(s) | Audigy SE with Logitech Z-5500 |

| Power Supply | Riotoro Enigma G2 850W |

| Mouse | Razer copperhead / Gamdias zeus (by courtesy of sneekypeet & TPU) |

| Keyboard | MS Sidewinder x4 |

| Software | win10 64bit ltsc |

| Benchmark Scores | irrelevant for me |

solid card too bad it is a "scalper" edition also

- Joined

- Apr 18, 2013

- Messages

- 1,260 (0.29/day)

- Location

- Artem S. Tashkinov

Well no need to get upset, its just a videocard, lets look at the facts and not made up stats shall we?

Nvidia themselves pushed for new 360hz screens, nr1 so high refreshrate/framerate gaming is definitly something they are going for, now lets look at this review shall we?

Average FPS at 1080p with this card is about 200fps.....so yeah we are not even close to touching that new pushed 360hz standard and not even remotely close to your made up 600fps...

sooo yeah.

So, NVIDIA is to be blamed for extremely poorly optimized games which are often limited by the CPU performance. AMD fans never fail to disappoint with the utmost disrespect towards intelligence and logic.

And according to you games under RDNA 2.0 will magically scale better. LMAO.

sooo yeah.

Here's some harsh reality for you:

Tell me exactly how NVIDIA is supposed to fix this suckfest.

Last edited:

- Joined

- Nov 3, 2013

- Messages

- 2,141 (0.52/day)

- Location

- Serbia

| Processor | Ryzen 5600 |

|---|---|

| Motherboard | X570 I Aorus Pro |

| Cooling | Deepcool AG400 |

| Memory | HyperX Fury 2 x 8GB 3200 CL16 |

| Video Card(s) | RX 6700 10GB SWFT 309 |

| Storage | SX8200 Pro 512 / NV2 512 |

| Display(s) | 24G2U |

| Case | NR200P |

| Power Supply | Ion SFX 650 |

| Mouse | G703 (TTC Gold 60M) |

| Keyboard | Keychron V1 (Akko Matcha Green) / Apex m500 (Gateron milky yellow) |

| Software | W10 |

I've ordered one and hopefully going to receive it before Cyberpunk 2077 release (I am from Italy).

What to say, will probably end up being the fastest 3090 together with Aorus Xtreme and Zotac AMP extreme. $/perf 3080 is for sure better but you're paying the fact this card is the fastest GPU on Earth.

What to say, will probably end up being the fastest 3090 together with Aorus Xtreme and Zotac AMP extreme. $/perf 3080 is for sure better but you're paying the fact this card is the fastest GPU on Earth.

- Joined

- Dec 31, 2009

- Messages

- 19,375 (3.52/day)

| Benchmark Scores | Faster than yours... I'd bet on it. :) |

|---|

I get you... I don't like blanket statements either, but when everything is covered except for the toes....Nah, I'm just saying people shouldn't make blanket statements without acknowledging the benefits that most people don't realize can actually be very noticeable. We all acknowledge the price/performance value statement of a 3080 over a 3090. However, I do not agree with anyone saying the 3090 makes no noticeable difference at 1080p.

Think of this way too... you're going to be heavily CPU limited at low res trying to feed all of those frames in. I think in TPU reviews we see much larger, significant gains at the higher resolution. To use this on anything less than 4K does it an injustice IMO. You're so CPU limited, especially at 1080p, the bottleneck shifts significantly to the CPU with this monster (and the 3080).

Watch the FPS go up with CPU clock increases at that low of a res. Look how poorly AMD does with its lack of clock speed. I think starting off at a static 4.5, then go 4.7, 4.9, 5.1, 5.3, etc... hell, I'd love to see some single stage results at 5.5+ just to see if it keeps going so we know how high it scales. The lows aren't due to the GPU at that point1080p).

These overclocked versions like the Strix are awesome, but, unless you are at 4K+, the 'value' (and I use that term loosely, like hot dog down a hallway loosely) of this card for gaming is garbage. Similar to the Titan it 'replaces'. You bought the Titan because it was a bridge between gaming and prosumer offering perks for the latter. This is the same thing going on here, sans the Titan name (so far). Everything about this card screams Titan........except for the Geforce naming.......thanks Nvidia.

CPU limited at such a low resolution man. It may be the game devs, it may be too slow CPUs for the beast of a GPU at 1080p. Look at how little the card gains over a 3080 at 1080p compared to 4K.Average FPS at 1080p with this card is about 200fps.....so yeah we are not even close to touching that new pushed 360hz standard and not even remotely close to your made up 600fps...

The flagship is the 3080. This is a Titan replacement.Only 5 years ago Graphics card flagship were only 500 dollars today you need 2000 dollars. What a rip off

Also, the first Titan was released in 2013... IIRC, it was $1000. So, seven years later, it costs 50% more (reference to FE). And the 780Ti was $700... 780 was $500.

Last edited:

- Joined

- Sep 23, 2020

- Messages

- 6 (0.00/day)

| System Name | Potato |

|---|---|

| Processor | Intel core i5 9600 KF |

| Motherboard | Asus ROG Strix Gaming F |

| Cooling | Corsair H80I Rgb |

| Memory | Corsair Vengeance 32 Gb 4x8GB 3600 Mhz |

| Video Card(s) | EVGA Geforce GTX 1080 FTW |

| Storage | Samsung 500 Gb m2 nvme |

| Display(s) | Asus ROG QHD |

| Case | Nzxt h440 |

| Audio Device(s) | Creative blaster Z |

| Power Supply | Fractal design Tesla 1000W |

| Mouse | MSI DS 200 RGB |

| Keyboard | SteelSeries RGB m800 |

| Software | Windows X |

| Benchmark Scores | 12000 ish |

Only 5 years ago Graphics card flagship were only 500 dollars today you need 2000 dollars. What a rip off

- Joined

- Nov 23, 2010

- Messages

- 317 (0.06/day)

Only 5 years ago Graphics card flagship were only 500 dollars today you need 2000 dollars. What a rip off

The BOM has steadily increased too.

- Joined

- Mar 10, 2010

- Messages

- 11,880 (2.19/day)

- Location

- Manchester uk

| System Name | RyzenGtEvo/ Asus strix scar II |

|---|---|

| Processor | Amd R5 5900X/ Intel 8750H |

| Motherboard | Crosshair hero8 impact/Asus |

| Cooling | 360EK extreme rad+ 360$EK slim all push, cpu ek suprim Gpu full cover all EK |

| Memory | Gskill Trident Z 3900cas18 32Gb in four sticks./16Gb/16GB |

| Video Card(s) | Asus tuf RX7900XT /Rtx 2060 |

| Storage | Silicon power 2TB nvme/8Tb external/1Tb samsung Evo nvme 2Tb sata ssd/1Tb nvme |

| Display(s) | Samsung UAE28"850R 4k freesync.dell shiter |

| Case | Lianli 011 dynamic/strix scar2 |

| Audio Device(s) | Xfi creative 7.1 on board ,Yamaha dts av setup, corsair void pro headset |

| Power Supply | corsair 1200Hxi/Asus stock |

| Mouse | Roccat Kova/ Logitech G wireless |

| Keyboard | Roccat Aimo 120 |

| VR HMD | Oculus rift |

| Software | Win 10 Pro |

| Benchmark Scores | laptop Timespy 6506 |

According to one guy on here these cooler's cost £20 (can't recall who luckily for him or his name would be here), the silicon cost has gone up, so has Nvidia's die size , and the cost of all other parts making the 3080 and 70 good buys, personally I think the markup on these 3090 is a bit much, they're worth more than a 3080 but not this much.The BOM has steadily increased too.

@EarthDog , I think they (Nvidia)gimped the pro use of the 3090 specifically because Pros were just buying Titan's, they're 6000 Quadros are due and I think they want their money for them.

Yet the 3090 is geared primarily towards the "toes" or 1%.I get you... I don't like blanket statements either, but when everything is covered except for the toes....

Think of this way too... you're going to be heavily CPU limited at low res trying to feed all of those frames in. I think in TPU reviews we see much larger, significant gains at the higher resolution. To use this on anything less than 4K does it an injustice IMO. You're so CPU limited, especially at 1080p, the bottleneck shifts significantly to the CPU with this monster (and the 3080).

Watch the FPS go up with CPU clock increases at that low of a res. Look how poorly AMD does with its lack of clock speed. I think starting off at a static 4.5, then go 4.7, 4.9, 5.1, 5.3, etc... hell, I'd love to see some single stage results at 5.5+ just to see if it keeps going so we know how high it scales. The lows aren't due to the GPU at that point....

These overclocked versions like the Strix are awesome, but, unless you are at 4K+, the 'value' (and I use that term loosely, like hot dog down a hallway loosely) of this card for gaming is garbage. Similar to the Titan it 'replaces'.

Here are my PC's main specs and real-world results:

8700k 5.03GHz, 0 AVX offset

2080 Ti - Asus Strix OC - Constant 1890core, 1900mem

32GB DDR4 3216, 13-14-14-28-350

240Hz - PG258Q

When fps drops to ~125fps in heavy scenes in Apex Legends at 1680x1050 (even lower than 1080), GPU load is at 80%+ read using GPU-Z. As most of us should know, 80% GPU load does not mean it's not occasionally hitting full or near-full capacity. It's just a rough guideline but it's quite high.

When FPS jumps to around 187fps in typical scenes, GPU load is around 50% (lower).

CPU usage stays relatively constant with the total/average CPU usage at ~25%, and highest total usage on any physical core at ~40%.

Clearly the CPU is not the bottleneck in this case, whereas the GPU is.

Of course we'll see better gains for high-end GPUs at higher resolutions on average because the GPU will be the bottleneck more often for obvious reasons throughout the scenes but that does not mean you can't be GPU bound at lower resolutions as scenes vary drastically. It simply depends on the complexity/load of the scene vs the capacity of the graphics card.

Again, you keep arguing value while I am not. I'm pointing out the performance differences for those that want it and are willing to pay for it.

Lucky bastard. I'm assuming you're saying you were able to order the Asus 3090 Strix O24G.I've ordered one and hopefully going to receive it before Cyberpunk 2077 release (I am from Italy).

What to say, will probably end up being the fastest 3090 together with Aorus Xtreme and Zotac AMP extreme. $/perf 3080 is for sure better but you're paying the fact this card is the fastest GPU on Earth.

Where did you find it?

I've only seen it listed as unavailable on BestBuy and Newegg.

Last edited:

- Joined

- Dec 31, 2009

- Messages

- 19,375 (3.52/day)

| Benchmark Scores | Faster than yours... I'd bet on it. :) |

|---|

80% gpu load is a problem. They should be at 99% when they arent held back.Snip

Shame on NV for marketing it as a 8K gaming GPU. And from that perspective, it is a patheticly performing product. How can it even be positive that it is "19% faster" than the 3080? Which is not true in terms of that it is the reference 3080. When you the AIB 3090 to an AIB 3080, the difference is more like 15% or below. And comparing the same AIB models, difference is closer to 10%. This is a shame. A big shame. For efficiency, so far both the 3080 and the 3090 sits in a not so better position than the 2080 and 2080 Ti. The only positive thing so far in the 3000 series is the performance upgrade of the 3080, but it's still short of the 1080's performance gain, not to speak of the 1/3 of its efficiency gain. And what we have seen of the 3070 so far (Galax slides showing it's well below the 2080 Ti, while NV slides claimed it was faster than the 2080 Ti), it isn't supposed to be a bomb either. From NV, my last hope is in the 3060.

Last edited:

- Joined

- Feb 11, 2009

- Messages

- 5,628 (0.97/day)

| System Name | Cyberline |

|---|---|

| Processor | Intel Core i7 2600k -> 12600k |

| Motherboard | Asus P8P67 LE Rev 3.0 -> Gigabyte Z690 Auros Elite DDR4 |

| Cooling | Tuniq Tower 120 -> Custom Watercoolingloop |

| Memory | Corsair (4x2) 8gb 1600mhz -> Crucial (8x2) 16gb 3600mhz |

| Video Card(s) | AMD RX480 -> RX7800XT |

| Storage | Samsung 750 Evo 250gb SSD + WD 1tb x 2 + WD 2tb -> 2tb MVMe SSD |

| Display(s) | Philips 32inch LPF5605H (television) -> Dell S3220DGF |

| Case | antec 600 -> Thermaltake Tenor HTCP case |

| Audio Device(s) | Focusrite 2i4 (USB) |

| Power Supply | Seasonic 620watt 80+ Platinum |

| Mouse | Elecom EX-G |

| Keyboard | Rapoo V700 |

| Software | Windows 10 Pro 64bit |

So, NVIDIA is to be blamed for extremely poorly optimized games which are often limited by the CPU performance. AMD fans never fail to disappoint with the utmost disrespect towards intelligence and logic.

And according to you games under RDNA 2.0 will magically scale better. LMAO.

sooo yeah.

Here's some harsh reality for you:

Tell me exactly how NVIDIA is supposed to fix this suckfest.

Literally did not mention a thing about AMD....the fact that you felt the need to bring that company up says a lot about your mindset in this little discussion I'm afraid.

Lets also not forget its the Nvidia users who did not jump from the 10 series to the 20 series and that the presentation was very much about convincing them "thats its time to upgrade"....

And are you really saying the stack of games TPU uses for their reviews are bad choices? that they somehow not represent reality out there? like I honestly im kinda taken back but your viewpoint here.

And intelligence and logic, already said man, 50% more performacne for 50% more power consumption is not some impressive leap in tech, 50% performance while using no more power would be, 50% more performance for 20% less power...now that would be something to cheer for.

That's a common misconception. GPU load or utilization is only a certain kind of metric over a sample period of time. Specifically:80% gpu load is a problem. They should be at 99% when they arent held back.

| utilization.gpu | Percent of time over the past sample period during which one or more kernels was executing on the GPU. The sample period may be between 1 second and 1/6 second depending on the product. |

It does not indicate exactly the "capacity" used. There are many other parts of the entire graphics system/pipeline that can be a bottleneck yet result in <99% "utilization".

You are funny, really.I like that Nvidia built this BFGPU and put it out there. It just showcases their top tier product and the potential of their technology.

Kind of like cars, M3 vs. 3-series. You pay for those infinitesimal improvements.

LOL, a lot more power efficient than the 3080? F.e. this chart compares an AIB 3090 to a reference 3080. If you take ASUS TUF 3080 f. .e. it's 2% better in perf/W than the ref 3080. And if you check back-to-back generations, the 2080 Ti was 22% more efficient than the 1080 Ti (which is not a big leap at all), while the 3080 Ti is also around that 22% uplift from the 2080 Ti (assuming a 96-97% reference in the chart). When you check the 980 Ti - 1080 Ti switch, there is a whopping 65% efficiency increase. 3 times more than the 1080TI-2080Ti or the 2080Ti-3080Ti switch.This card (just like I predicted) is a lot more power efficient that the RTX 3080:

So, your criticism is not totally sincere as the RTX 3080 must be an even worse card in your opinion.

However, if you check all the other 3 3090's results, all are much worse in terms of efficiency than the ASUS Strix as they all consume more power and they perform worse. There is some problem for me regarding that.

If you check power consumption charts, ASUS consumes 20W less in games while the ASUS card performs 9% better in games. However in Furmark, the ASUS card consumes 50W more. For me, this shows some kind of measurement anomalies in the gaming section.

So, NVIDIA is to be blamed for extremely poorly optimized games which are often limited by the CPU performance.

Please don't cry.

Last edited:

- Joined

- Feb 14, 2012

- Messages

- 2,358 (0.50/day)

| System Name | msdos |

|---|---|

| Processor | 8086 |

| Motherboard | mainboard |

| Cooling | passive |

| Memory | 640KB + 384KB extended |

| Video Card(s) | EGA |

| Storage | 5.25" |

| Display(s) | 80x25 |

| Case | plastic |

| Audio Device(s) | modchip |

| Power Supply | 45 watts |

| Mouse | serial |

| Keyboard | yes |

| Software | disk commander |

| Benchmark Scores | still running |

> no-holes-barred

no holds barred

no holds barred