Right, good story, and then you get to the situations reviewers cannot cover in full length, and you still notice the occasional stutter, inconsistency, a hang here or there, and you're just not quite as smooth on frametimes as you'd love to be.

Or you start modding and DO require that VRAM allocated because many more assets are pushed through than developers intended. I've seen it too often. Allocation is relevant to performance and frame times, even if its not in active usage. You are pushing harder on your VRAM bandwidth with more swaps required, and this will cause hiccups.

Reading charts != gaming

That would be a good point - if I had been arguing the opposite. Yes, the lack of frametime data and/or .1%/1% lows in TPU's charts is a weakness, and it is entirely possible that some of those average FPS numbers are misleading. But in general, they won't be. The problem with only looking at averages is that you're unable to spot the outliers, not that the overall image is wrong.

But... I'll leave everyone to their own illusion. Its very hard to get the full insight on this apart from long term experience. However if you intend to use your card for longer than 2-3 years, better have 'too much' VRAM or you'll find yourself upgrading soon. One thing though... putting your eggs in the basket of 'future technologies' is the worst possible outlook IMHO. Remember DX12 and its mGPU? Hmhm developers definitely jumped on that. I can name you another few hands full of such 'developments' that fell off the dev budget train.

Except that mGPU has been a shitshow since the first implementation of SLI, and DX12 putting the onus for making it work entirely on developers was

exactly what made it problematic previously (the few games that had official profiles worked okay-ish, everything else was crap, and developers are always pressed on time). DS is supposedly easily implemented, is standard on the Xbox consoles (which is a

huge push for adoption by itself), and ultimately does the same that already happens, just faster and more efficiently. So while I agree that betting on future tech to save the day is generally a bad idea, DS seems like one of those (relatively few) cases where it's likely to work out decently. As advertised? Unlikely. But as an improvement over the current "okay, on the current trajectory in 10 seconds the player might enter areas A, B or C, each of which need 500MB of new textures loaded, and we can't expect more than 200MB/s, so let's start caching!"? That's a given. And, as I said above, that doesn't even need DirectStorage, it just requires games to be developed with the expectation of SSD storage.

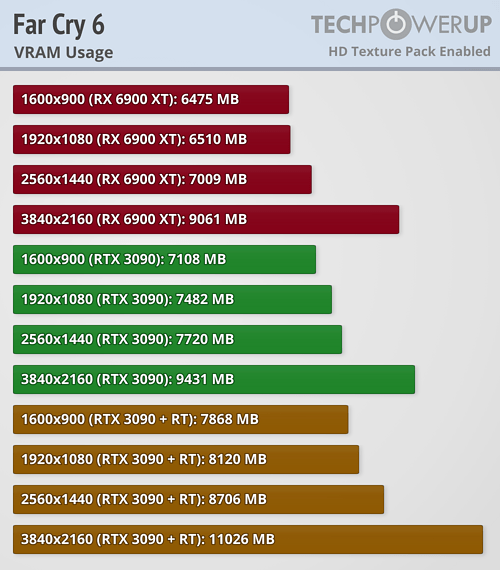

BTW... 6GB cards are definitely VRAM limited in those Far Cry charts. So there you have it. 1060 and 1660ti equal perf? Ouch. That's 26% performance lost... almost a perfect relative perf loss compared to having 8GB vs 6GB (25% less). That's your window looking at the future of cards 4-6 years of age. The rebuttal 'but 20 FPS' does not matter. They would have had a playable near 30 with more VRAM. In a relative sense, with higher perf cards that's 40 being an actual 60 if you had sufficient memory. - And now note the correlation with Far Cry's VRAM allocations being all way over 6GB.

It seems like you're trying to make some "gotcha" point here, but ... *ahem*

There are three GPUs that show uncharacteristic performance regressions compared to previously tested games, and all at 2160p: the 4GB 5500 XT, the 6GB 1660 Ti, and the 6GB 5600 XT. The 6GB cards show much smaller drops than the 4GB card, but still clearly noticeable. So, for Far Cry 6, while reported VRAM usage at 2160p is in the 9-10GB range,

actual VRAM usage is in the >6GB <8GB range.

So ... yes?

My whole point was: "you can't trust VRAM readouts from drivers or software, as they are not representative of actual VRAM usage" - as a counter to your "I've seen games come close to 7GB, so 8GB is going to be too little soon" argument. The point of my argument isn't specifically whether or not 8GB is sufficient or not, but more broadly that you need to look at actual performance data and not VRAM usage. Your initial statement was made on a deeply flawed basis - much more flawed than the absence of .1% data in TPU's reviews.

To make this extra clear: Your argument that I responded to was "I'm seeing >7GB, so we might soon be hitting 8GB and be bottlenecked." My response was "here's an example of a game that shows

9GB of VRAM usage, yet is only clearly bottlenecked on

6GB or lower."

As for whether the lower amount of VRAM will bring your from "a playable near 30" to something lower: at that point you need to

lower your damn settings. Seriously. This is at Ultra. Playing at Ultra is always dumb and wasteful, even on a flagship GPU. And yes, lowering texture quality is often a lot more noticeable than other settings with a similar performance gain. But when you're at the "can I hit 30 or not" point in performance, well, either you're playing a game where smoothness doesn't matter, or you'll have a better play experience lowering your settings.

As I apparently have to repeat myself:

This could of course be interpreted as 8GB of VRAM becoming too little in the near future, but, a) we don't know where in the 6-8GB range that usage sits; b) there are technologies incoming that will alleviate this; c) this is only at 2160p, which these GPUs can barely handle at these settings levels even today. Thus, it's far more likely for compute to be a bottleneck in the future than VRAM, outside of a handful of poorly balanced SKUs - and there is no indication of the 3060 Ti being one of those.

I mean ... this should be pretty clear. The important thing is a GPU with a good balance of compute and VRAM. In current games, and as VRAM usage has developed in the past years, 8GB is unlikely to be a significant bottleneck for anything but the most powerful GPUs at resolutions they are actually capable of rendering at half-decent framerates. If you're buying a 3060 Ti to play at 2160p Ultra, then either you are making some particularly poor choices or you are well aware that this will not result in a smooth experience (which, depending on the game, can be perfectly fine). If you are buying a 3060 Ti, have a 2160p monitor, and refuse to lower your resolution or settings? Then you are letting stubbornness get in the way of enjoying your games, and the bottleneck is your attitude, not the GPU. Either way, even the 3070 Ti (with its 26% additional compute resources) will most likely do just fine with its 8GB for the vast majority of titles at the settings it can otherwise handle. That card has a higher chance of being bottlenecked by VRAM in some titles, and no doubt will, but enough for it to

really matter? Not likely. And certainly not to a degree that can't be overcome by adjusting a few settings.

It even has nasty leaks with nvidia grass feature that it will consume 10s of gigs of VRAM if its available.

It even has nasty leaks with nvidia grass feature that it will consume 10s of gigs of VRAM if its available.