- Joined

- Aug 19, 2017

- Messages

- 3,242 (1.12/day)

Researchers from Università di Bologna and CINECA, the largest supercomputing center in Italy, have been playing with the concept of developing a RISC-V supercomputer. The team has laid the grounds for the first-ever implementation that demonstrates the capability of the relatively novel ISA to run high-performance computing. To create a supercomputer, you need pieces of hardware that seem like Lego building blocks. Those are called clusters, made from a motherboard, processor, memory, and storage. Italian researchers decided to try and use something different than Intel/AMD solution to the problem and use a processor based on RISC-V ISA. Using SiFive's Freedom U740 SoC as the base, researchers named their RISC-V cluster "Monte Cimone."

Monte Cimone features four dual-board servers, each in a 1U form factor. Each board has a SiFive's Freedom U740 SoC with four U74 cores running up to 1.4 GHz and one S7 management core. In total, eight nodes combine for a total of 32 RISC-V cores. Paired with 16 GB of 64-bit DDR4 memory operating at 1866s MT/s, PCIe Gen 3 x8 bus running at 7.8 GB/s, one gigabit Ethernet port, USB 3.2 Gen 1 interfaces, the system is powered by two 250 Watt PSUs to support future expansion and addition of accelerator cards.

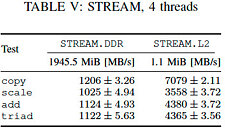

The team over in Italy benchmarked the system using HPL and Stream to determine the machine's floating-point computation capability and memory bandwidth. While the results are not very impressive, they are a beginning for RISC-V. Each node produced a sustained 1.86 GFLOPS performance in HPL, with a total computing power of 14.88 GFLOPS with perfect linear scaling. However, the efficiency for the entire cluster was 85%, resulting in 12.65 GFLOPS of computational force. The node should achieve a 14.928 GB/s in memory bandwidth; however, the actual results were 7760 MB/s.

These results show two things. Firstly, the RISC-V HPC software stack is mature but needs further optimization and faster silicon to achieve anything monumental like weather simulation. Secondly, it shows that scaling in the HPC world is quite tricky and requires careful optimization to get the hardware and software to coexist in a world where everything scales well. So to get to a point where we meet some of the scaling and performance of supercomputers like Frontier, RISC-V needs a lot more tuning. Researchers and engineers are working hard to bring that idea to life, and it is a matter of time before we see more robust designs appear.

View at TechPowerUp Main Site | Source

Monte Cimone features four dual-board servers, each in a 1U form factor. Each board has a SiFive's Freedom U740 SoC with four U74 cores running up to 1.4 GHz and one S7 management core. In total, eight nodes combine for a total of 32 RISC-V cores. Paired with 16 GB of 64-bit DDR4 memory operating at 1866s MT/s, PCIe Gen 3 x8 bus running at 7.8 GB/s, one gigabit Ethernet port, USB 3.2 Gen 1 interfaces, the system is powered by two 250 Watt PSUs to support future expansion and addition of accelerator cards.

The team over in Italy benchmarked the system using HPL and Stream to determine the machine's floating-point computation capability and memory bandwidth. While the results are not very impressive, they are a beginning for RISC-V. Each node produced a sustained 1.86 GFLOPS performance in HPL, with a total computing power of 14.88 GFLOPS with perfect linear scaling. However, the efficiency for the entire cluster was 85%, resulting in 12.65 GFLOPS of computational force. The node should achieve a 14.928 GB/s in memory bandwidth; however, the actual results were 7760 MB/s.

These results show two things. Firstly, the RISC-V HPC software stack is mature but needs further optimization and faster silicon to achieve anything monumental like weather simulation. Secondly, it shows that scaling in the HPC world is quite tricky and requires careful optimization to get the hardware and software to coexist in a world where everything scales well. So to get to a point where we meet some of the scaling and performance of supercomputers like Frontier, RISC-V needs a lot more tuning. Researchers and engineers are working hard to bring that idea to life, and it is a matter of time before we see more robust designs appear.

View at TechPowerUp Main Site | Source