- Joined

- Oct 21, 2005

- Messages

- 7,074 (1.01/day)

- Location

- USA

| System Name | Computer of Theseus |

|---|---|

| Processor | Intel i9-12900KS: 50x Pcore multi @ 1.18Vcore (target 1.275V -100mv offset) |

| Motherboard | EVGA Z690 Classified |

| Cooling | Noctua NH-D15S, 2xThermalRight TY-143, 4xNoctua NF-A12x25,3xNF-A12x15, 2xAquacomputer Splitty9Active |

| Memory | G-Skill Trident Z5 (32GB) DDR5-6000 C36 F5-6000J3636F16GX2-TZ5RK |

| Video Card(s) | ASUS PROART RTX 4070 Ti-Super OC 16GB, 2670MHz, 0.93V |

| Storage | 1x Samsung 990 Pro 1TB NVMe (OS), 2x Samsung 970 Evo Plus 2TB (data), ASUS BW-16D1HT (BluRay) |

| Display(s) | Dell S3220DGF 32" 2560x1440 165Hz Primary, Dell P2017H 19.5" 1600x900 Secondary, Ergotron LX arms. |

| Case | Lian Li O11 Air Mini |

| Audio Device(s) | Audiotechnica ATR2100X-USB, El Gato Wave XLR Mic Preamp, ATH M50X Headphones, Behringer 302USB Mixer |

| Power Supply | Super Flower Leadex Platinum SE 1000W 80+ Platinum White, MODDIY 12VHPWR Cable |

| Mouse | Zowie EC3-C |

| Keyboard | Vortex Multix 87 Winter TKL (Gateron G Pro Yellow) |

| Software | Win 10 LTSC 21H2 |

I have been running this board since 2017.

Since December 2021, I have run it as follows with no issues:

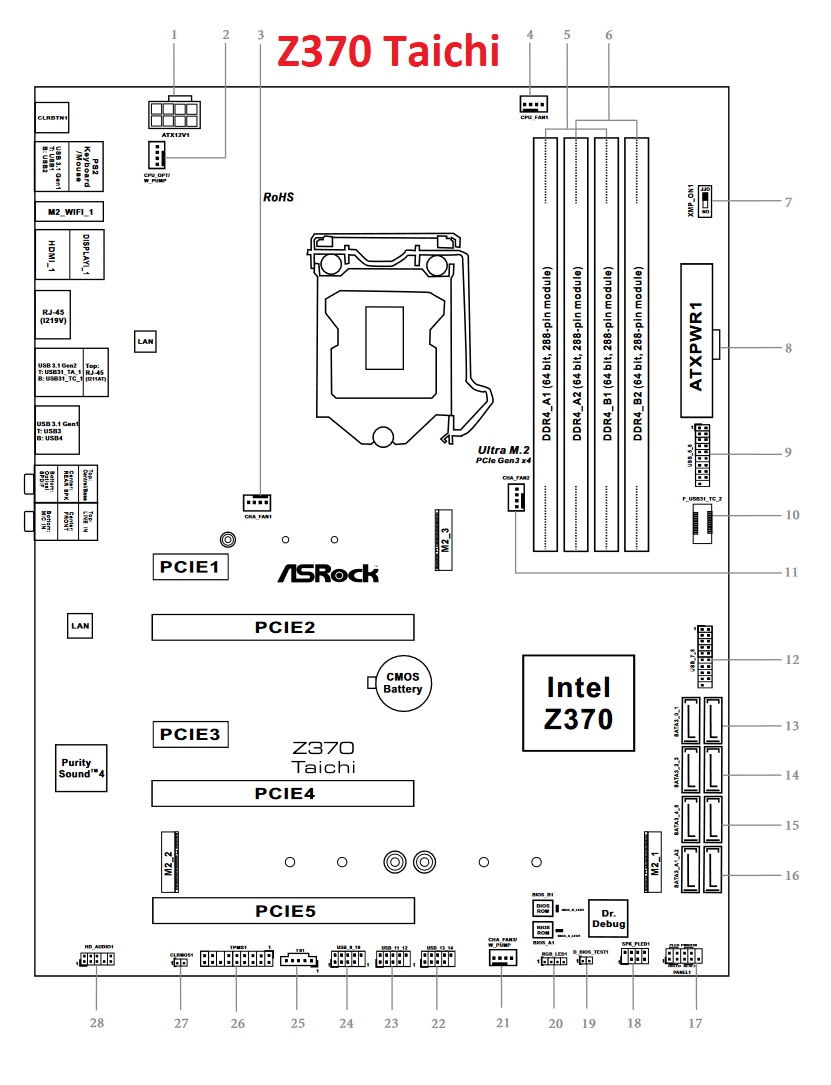

PCIE2: Nvidia RTX 3060 12GB

M.2_3 Samsung 970 Pro 512GB

M.2_2 Old Samsung 970 Evo Plus 2TB

M.2_1 Inland Premium NVMe 1TB

In June 2022, I swapped the Inland for a second Samsung 970 Evo Plus 2TB to increase my storage. Since doing so, I have had issues with stability when running 3 NVMe. The newest Evo Plus was RMA'd to Samsung and no issues were found. The newest Evo Plus was then run stand alone in two different systems with no issues for several weeks. I re-added the Inland to the system and ran it with just Inland and newest Evo Plus for 2 weeks without any issue. I have found that the system will rapidly have IO errors and crash if a drive is installed in M.2_1, but if that slot is kept empty, I can run any two of the four NVMe drives fine.

PCIE2: Nvidia RTX 3060 12GB

M.2_3 Samsung 970 Pro 512GB

M.2_2 Old Samsung 970 Evo Plus 2TB

M.2_1 New Samsung 970 Evo Plus 2TB

This week, I purchased a PCI-E 3.0 x4 to M.2 NVMe adapter card and installed my old 970 Evo Plus 2TB into it. I then installed it into PCIE4 under the graphics card. The system fails to boot. Removing the graphics card will cause the system to boot. Reinstalling the graphics card and removing the PCI-E to M.2 adapter card will allow the system to boot. Reinstalling the PCIE to M.2 adapter card with no drive in it and having the 3060 will also allow the system to boot. I also have another M.2 Adapter card, and same problem occurs.

The system is now

PCIE2: Nvidia RTX 3060 12GB

M.2_3 Samsung 970 Pro 512GB

M.2_2 New Samsung 970 Evo Plus 2TB

PCIE4 Old Samsung 970 Evo Plus 2TB

I then moved the adapter card to the lowest full size PCIE slot, PCIE5.

That brings us to the latest configuration.

PCIE2: Nvidia RTX 3060 12GB

M.2_3 Samsung 970 Pro 512GB

M.2_2 New Samsung 970 Evo Plus 2TB

PCIE5 Old Samsung 970 Evo Plus 2TB

The system appears to be working for the time being as it will actually boot, which is an improvement, but I am not sure that it is stable, as I had it reboot on me in this configuration already once without warning. Clearly, I am thinking there is an issue with the PCI express on the board. Does anyone have any tips? I am on the latest motherboard BIOS. I am thinking remounting CPU might be the next step.

Since December 2021, I have run it as follows with no issues:

PCIE2: Nvidia RTX 3060 12GB

M.2_3 Samsung 970 Pro 512GB

M.2_2 Old Samsung 970 Evo Plus 2TB

M.2_1 Inland Premium NVMe 1TB

In June 2022, I swapped the Inland for a second Samsung 970 Evo Plus 2TB to increase my storage. Since doing so, I have had issues with stability when running 3 NVMe. The newest Evo Plus was RMA'd to Samsung and no issues were found. The newest Evo Plus was then run stand alone in two different systems with no issues for several weeks. I re-added the Inland to the system and ran it with just Inland and newest Evo Plus for 2 weeks without any issue. I have found that the system will rapidly have IO errors and crash if a drive is installed in M.2_1, but if that slot is kept empty, I can run any two of the four NVMe drives fine.

PCIE2: Nvidia RTX 3060 12GB

M.2_3 Samsung 970 Pro 512GB

M.2_2 Old Samsung 970 Evo Plus 2TB

M.2_1 New Samsung 970 Evo Plus 2TB

This week, I purchased a PCI-E 3.0 x4 to M.2 NVMe adapter card and installed my old 970 Evo Plus 2TB into it. I then installed it into PCIE4 under the graphics card. The system fails to boot. Removing the graphics card will cause the system to boot. Reinstalling the graphics card and removing the PCI-E to M.2 adapter card will allow the system to boot. Reinstalling the PCIE to M.2 adapter card with no drive in it and having the 3060 will also allow the system to boot. I also have another M.2 Adapter card, and same problem occurs.

The system is now

PCIE2: Nvidia RTX 3060 12GB

M.2_3 Samsung 970 Pro 512GB

M.2_2 New Samsung 970 Evo Plus 2TB

PCIE4 Old Samsung 970 Evo Plus 2TB

I then moved the adapter card to the lowest full size PCIE slot, PCIE5.

That brings us to the latest configuration.

PCIE2: Nvidia RTX 3060 12GB

M.2_3 Samsung 970 Pro 512GB

M.2_2 New Samsung 970 Evo Plus 2TB

PCIE5 Old Samsung 970 Evo Plus 2TB

The system appears to be working for the time being as it will actually boot, which is an improvement, but I am not sure that it is stable, as I had it reboot on me in this configuration already once without warning. Clearly, I am thinking there is an issue with the PCI express on the board. Does anyone have any tips? I am on the latest motherboard BIOS. I am thinking remounting CPU might be the next step.

Last edited: