T0@st

News Editor

- Joined

- Mar 7, 2023

- Messages

- 2,077 (3.34/day)

- Location

- South East, UK

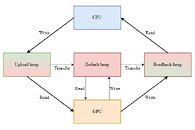

Microsoft has implemented two new features into its DirectX 12 API - GPU Upload Heaps and Non-Normalized sampling have been added via the latest Agility SDK 1.710.0 preview, and the former looks to be the more intriguing of the pair. The SDK preview is only accessible to developers at the present time, since its official introduction on Friday 31 March. Support has also been initiated via the latest graphics drivers issued by NVIDIA, Intel, and AMD. The Microsoft team has this to say about the preview version of GPU upload heaps feature in DirectX 12: "Historically a GPU's VRAM was inaccessible to the CPU, forcing programs to have to copy large amounts of data to the GPU via the PCI bus. Most modern GPUs have introduced VRAM resizable base address register (BAR) enabling Windows to manage the GPU VRAM in WDDM 2.0 or later."

They continue to describe how the update allows the CPU to gain access to the pool of VRAM on the connected graphics card: "With the VRAM being managed by Windows, D3D now exposes the heap memory access directly to the CPU! This allows both the CPU and GPU to directly access the memory simultaneously, removing the need to copy data from the CPU to the GPU increasing performance in certain scenarios." This GPU optimization could offer many benefits in the context of computer games, since memory requirements continue to grow in line with an increase in visual sophistication and complexity.

A shared pool of memory between the CPU and GPU will eliminate the need to keep duplicates of the game scenario data in both system memory and graphics card VRAM, therefore resulting in a reduced data stream between the two locations. Modern graphics cards have tended to feature very fast on-board memory standards (GDDR6) in contrast to main system memory (DDR5 at best). In theory the CPU could benefit greatly from exclusive access to a pool of ultra quick VRAM, perhaps giving an early preview of a time when DDR6 becomes the daily standard in main system memory.

View at TechPowerUp Main Site | Source

They continue to describe how the update allows the CPU to gain access to the pool of VRAM on the connected graphics card: "With the VRAM being managed by Windows, D3D now exposes the heap memory access directly to the CPU! This allows both the CPU and GPU to directly access the memory simultaneously, removing the need to copy data from the CPU to the GPU increasing performance in certain scenarios." This GPU optimization could offer many benefits in the context of computer games, since memory requirements continue to grow in line with an increase in visual sophistication and complexity.

A shared pool of memory between the CPU and GPU will eliminate the need to keep duplicates of the game scenario data in both system memory and graphics card VRAM, therefore resulting in a reduced data stream between the two locations. Modern graphics cards have tended to feature very fast on-board memory standards (GDDR6) in contrast to main system memory (DDR5 at best). In theory the CPU could benefit greatly from exclusive access to a pool of ultra quick VRAM, perhaps giving an early preview of a time when DDR6 becomes the daily standard in main system memory.

View at TechPowerUp Main Site | Source