TheLostSwede

News Editor

- Joined

- Nov 11, 2004

- Messages

- 17,841 (2.42/day)

- Location

- Sweden

| System Name | Overlord Mk MLI |

|---|---|

| Processor | AMD Ryzen 7 7800X3D |

| Motherboard | Gigabyte X670E Aorus Master |

| Cooling | Noctua NH-D15 SE with offsets |

| Memory | 32GB Team T-Create Expert DDR5 6000 MHz @ CL30-34-34-68 |

| Video Card(s) | Gainward GeForce RTX 4080 Phantom GS |

| Storage | 1TB Solidigm P44 Pro, 2 TB Corsair MP600 Pro, 2TB Kingston KC3000 |

| Display(s) | Acer XV272K LVbmiipruzx 4K@160Hz |

| Case | Fractal Design Torrent Compact |

| Audio Device(s) | Corsair Virtuoso SE |

| Power Supply | be quiet! Pure Power 12 M 850 W |

| Mouse | Logitech G502 Lightspeed |

| Keyboard | Corsair K70 Max |

| Software | Windows 10 Pro |

| Benchmark Scores | https://valid.x86.fr/yfsd9w |

A strong growth in AI server shipments has driven demand for high bandwidth memory (HBM). TrendForce reports that the top three HBM suppliers in 2022 were SK hynix, Samsung, and Micron, with 50%, 40%, and 10% market share, respectively. Furthermore, the specifications of high-end AI GPUs designed for deep learning have led to HBM product iteration. To prepare for the launch of NVIDIA H100 and AMD MI300 in 2H23, all three major suppliers are planning for the mass production of HBM3 products. At present, SK hynix is the only supplier that mass produces HBM3 products, and as a result, is projected to increase its market share to 53% as more customers adopt HBM3. Samsung and Micron are expected to start mass production sometime towards the end of this year or early 2024, with HBM market shares of 38% and 9%, respectively.

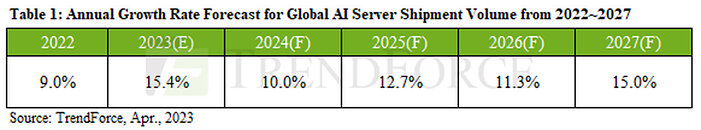

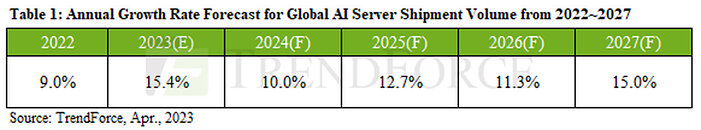

AI server shipment volume expected to increase by 15.4% in 2023

NVIDIA's DM/ML AI servers are equipped with an average of four or eight high-end graphics cards and two mainstream x86 server CPUs. These servers are primarily used by top US cloud services providers such as Google, AWS, Meta, and Microsoft. TrendForce analysis indicates that the shipment volume of servers with high-end GPGPUs is expected to increase by around 9% in 2022, with approximately 80% of these shipments concentrated in eight major cloud service providers in China and the US. Looking ahead to 2023, Microsoft, Meta, Baidu, and ByteDance will launch generative AI products and services, further boosting AI server shipments. It is estimated that the shipment volume of AI servers will increase by 15.4% this year, and a 12.2% CAGR for AI server shipments is projected from 2023 to 2027.

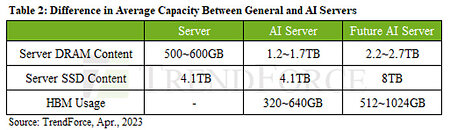

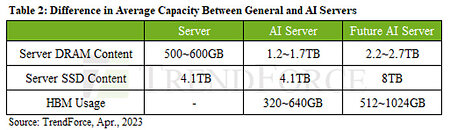

AI servers stimulate a simultaneous increase in demand for server DRAM, SSD, and HBM

TrendForce points out that the rise of AI servers is likely to increase demand for memory usage. While general servers have 500-600 GB of server DRAM, AI servers require significantly more—averaging between 1.2-1.7 TB with 64-128 GB per module. For enterprise SSDs, priority is given to DRAM or HBM due to the high-speed requirements of AI servers, but there has yet to be a noticeable push to expand SSD capacity. However, in terms of interface, PCIe 5.0 is more favored when it comes to addressing high-speed computing needs. Additionally, AI servers tend to use GPGPUs, and with NVIDIA A100 80 GB configurations of four or eight, HBM usage would be around 320-640 GB. As AI models grow increasingly complex, demand for server DRAM, SSDs, and HBM will grow simultaneously.

View at TechPowerUp Main Site | Source

AI server shipment volume expected to increase by 15.4% in 2023

NVIDIA's DM/ML AI servers are equipped with an average of four or eight high-end graphics cards and two mainstream x86 server CPUs. These servers are primarily used by top US cloud services providers such as Google, AWS, Meta, and Microsoft. TrendForce analysis indicates that the shipment volume of servers with high-end GPGPUs is expected to increase by around 9% in 2022, with approximately 80% of these shipments concentrated in eight major cloud service providers in China and the US. Looking ahead to 2023, Microsoft, Meta, Baidu, and ByteDance will launch generative AI products and services, further boosting AI server shipments. It is estimated that the shipment volume of AI servers will increase by 15.4% this year, and a 12.2% CAGR for AI server shipments is projected from 2023 to 2027.

AI servers stimulate a simultaneous increase in demand for server DRAM, SSD, and HBM

TrendForce points out that the rise of AI servers is likely to increase demand for memory usage. While general servers have 500-600 GB of server DRAM, AI servers require significantly more—averaging between 1.2-1.7 TB with 64-128 GB per module. For enterprise SSDs, priority is given to DRAM or HBM due to the high-speed requirements of AI servers, but there has yet to be a noticeable push to expand SSD capacity. However, in terms of interface, PCIe 5.0 is more favored when it comes to addressing high-speed computing needs. Additionally, AI servers tend to use GPGPUs, and with NVIDIA A100 80 GB configurations of four or eight, HBM usage would be around 320-640 GB. As AI models grow increasingly complex, demand for server DRAM, SSDs, and HBM will grow simultaneously.

View at TechPowerUp Main Site | Source