I agree with this from i guess a short-lived RT experience. I think we've got a long way to go in over-all graphics development to give RT a real shot at ultra realism.... although i admit in some scenes/viewed objects or reflective surfaces RT looks great. Personally i don't hang about in games to observe the lighting effects in still or slow paced visuals, i'm too busy kicking A-S-S in FPS MP titles. But should game developers sharpen up their act with realistic textures and other aspects of the game with RT enabled... it would still suck considering the best of GPUs which would be capable of running this sort of stuff seamlessly will probably cost around $2000-$3000 ... courtesy of the green monster.

And historically speaking, in every technical field, whenever it was needed to choose between to technologies, the older one was always the safe bet. I'm talking telephone vs Discord/Skype, email vs websites, land lines vs wifi...this is not due to poor design of the new tech but with the fact that the older tech had more experience, more backbone to itself, and that the older tech always was built on lesser means, and needed to stand on a simpler toolchain.

I trust 100% that if we have to choose between better textures and raster or better raytracing in the coming...10 years honestly, raster and higher quality textures will always be a safer bet. It will appear in more games, it will look better in more games, it will put less of a damper on performance in more games, etc.

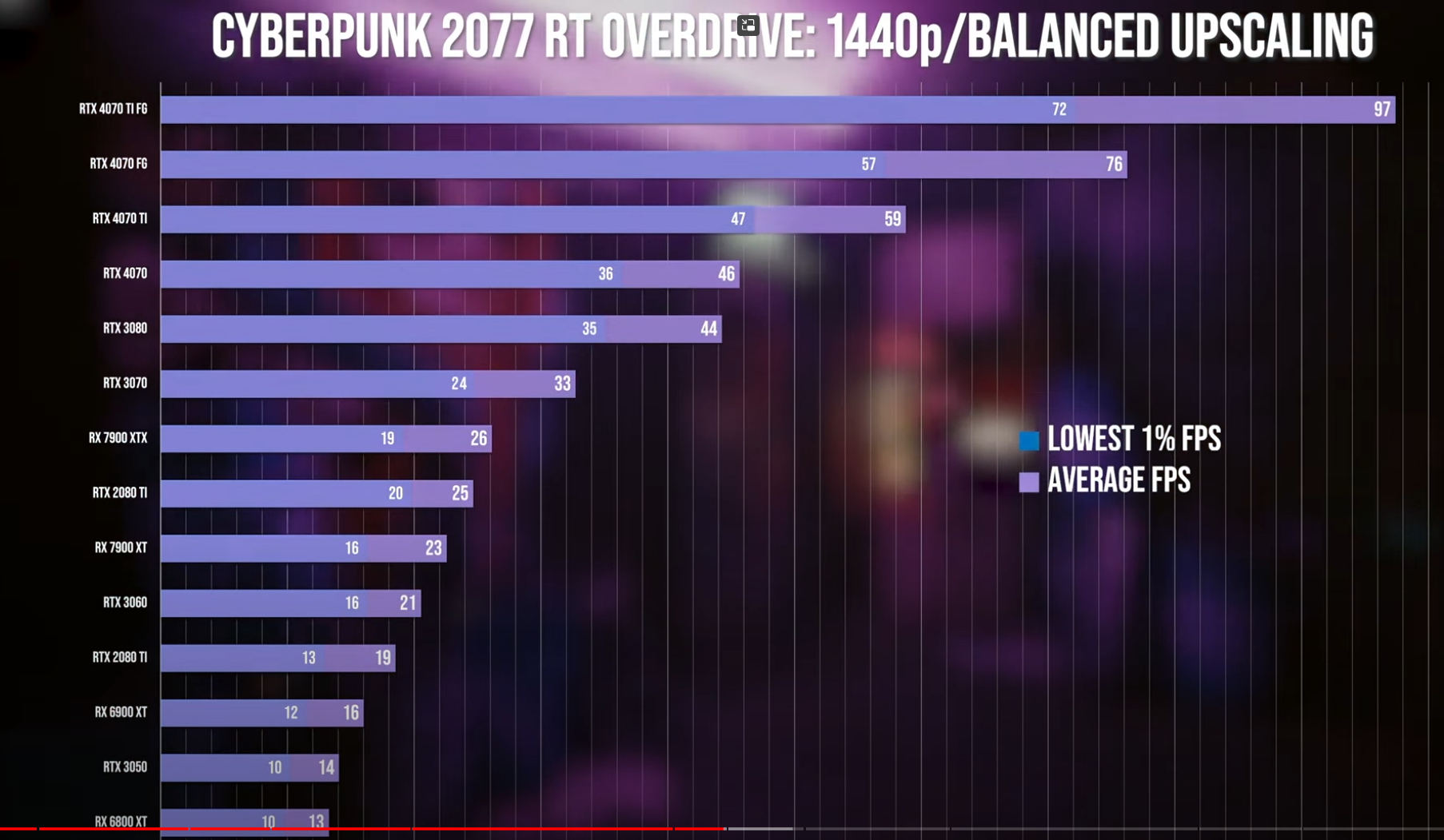

I believe I've said this elsewhere (probably Reddit actually), but with Cyberpunk's Path Tracing, we've had an indisputable technological statement from Nvidia:

"If you want Path Tracing, you need a team of Nvidia engineers dedicated to helping your team achieve it, months and years of slow progress with partial raytracing first, and for your users to own a $1600 or above GPU, so they can get 17 FPS, and then use supersampling and frame generation to get to above 60 FPS."

For PT to exist properly in the gaming world, you'll need to:

A) Knock down "a team of dedicated Nvidia engineers" to "an API and documentation that is easy enough to use for most render specialists"

B) Months and years need to become weeks to months (so get the PT running as easily as any other features, not spend a year optimising it enough to be functional)

C) Get that GPU cost to below $700

D) Get those frames to at the bare minimum 30 on that GPU, and use supersampling (and in time, get it to 60, then to 144, and all that)

To me, getting A should take already a solid year, and getting those render specialists competent with full PT will easily take years. It takes so long for common knowledge and docs to grow to a truly usable degree. Let's say 5 years or so for A to fully materialise.

Getting B is easy if A is completely done, let's say that it'll slowly get done as A is done.

Getting C will also easily take at least 2, if not 3 new generations of GPUs, so 4 to 6 years, not before.

Getting D is the same as getting C, but you can probably add another gen or two of GPUs for it.

I'm betting that Path Tracing will actually be present in quite a few AAA games in 5 years time, and still be only an option because it'll be an elitist thing. Then you'll get it to slide down from being elitist to commonplace in the next 5 years. Until these whole 10 years are done, I highly doubt that you'll be better off seeking Raytracing than seeking good raster/textures/classic game tech, which by the way, will still keep growing, albeit at an ever slower pace on the fields where raytracing can replace it.

Also nice icon/name lol